Hardware Update

Sorry it’s been a while since my last post, everyone – but fear not, I have been busy! We’re just waiting on Synapse to review the SteamVR Tracking license recap and community questions post once they return from their Thanksgiving holiday. Expect it this week! Also look forward to seeing my janky prototyping skills sometime soon :)

In the meantime, I suppose I’ll share the development process of a VR storytelling experience I’m working on. I’m callin’ this one “Somniat.”

Viveport Developer Awards

While I can talk about VR design for days, I haven’t had the chance / made the time to design a proper experience yet. However, with the Thanksgiving holiday I found myself in a week without obligations, and HTC just so happens to be hosting an awards showcase called the Viveport Developer Awards (VDAs) that doesn’t close until 30 November. I’ve been working on a storyboard for an interactive narrative over the past month, and so I figured I’d try to assemble it into a functional experience over these ~10 days (as of really solidifying the storyboard). It’ll be like a game jam, but longer! …and lonelier.

Also being completely transparent, there’s a good chance this experience won’t be able to be considered for the VDAs. I believe it needs to be published by 30 November, and the Viveport Developer Guide states that developers should give the submission process a 2 week overhead; plus there’s always unforeseen hiccups in the development workflow. Whether or not it’s eligible, I’m still excited to bring this experience to life and hear what users have to say about it.

But hey! I’m holding onto that good old fashioned “what if” for some extra motivation.

Somniat

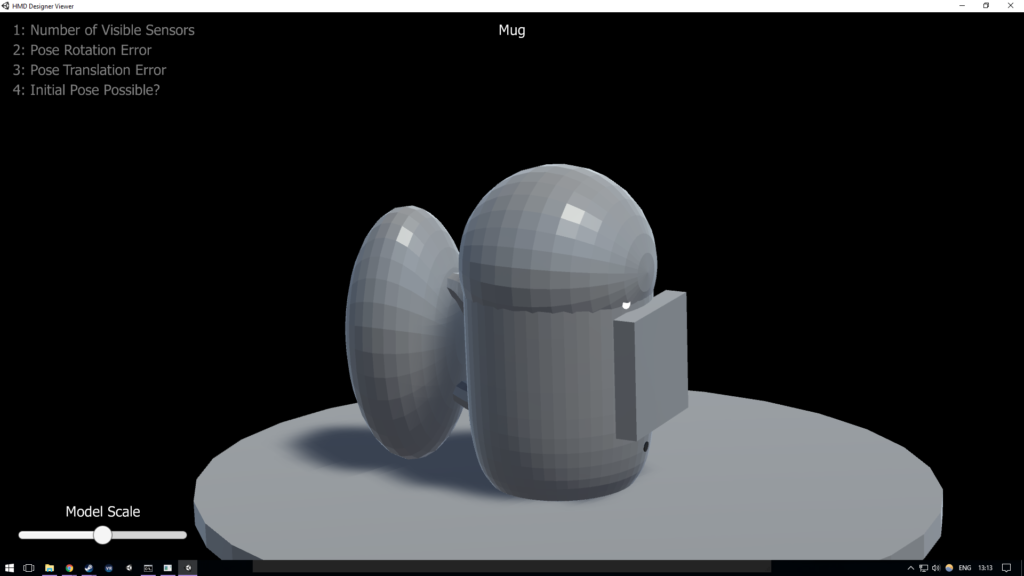

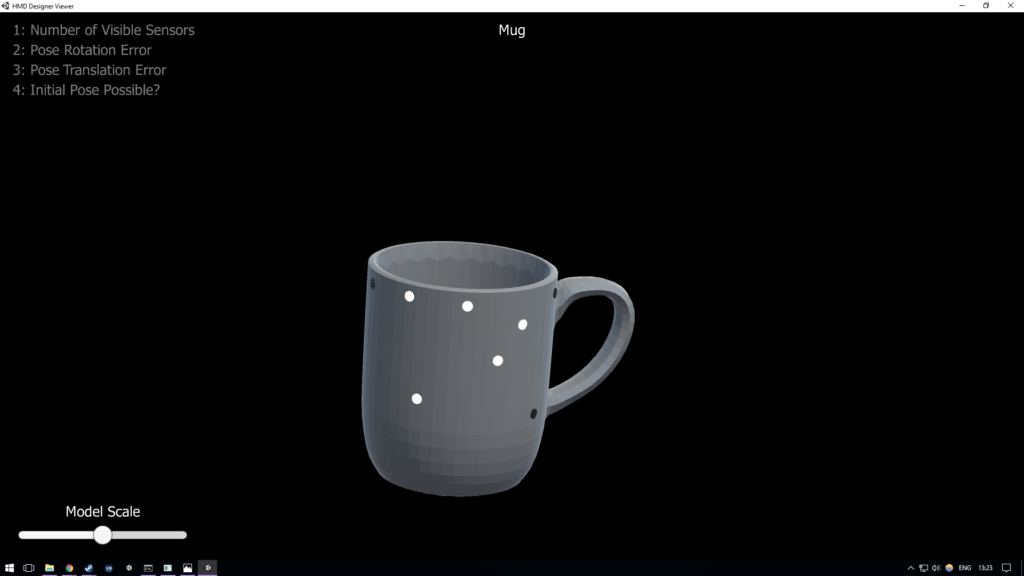

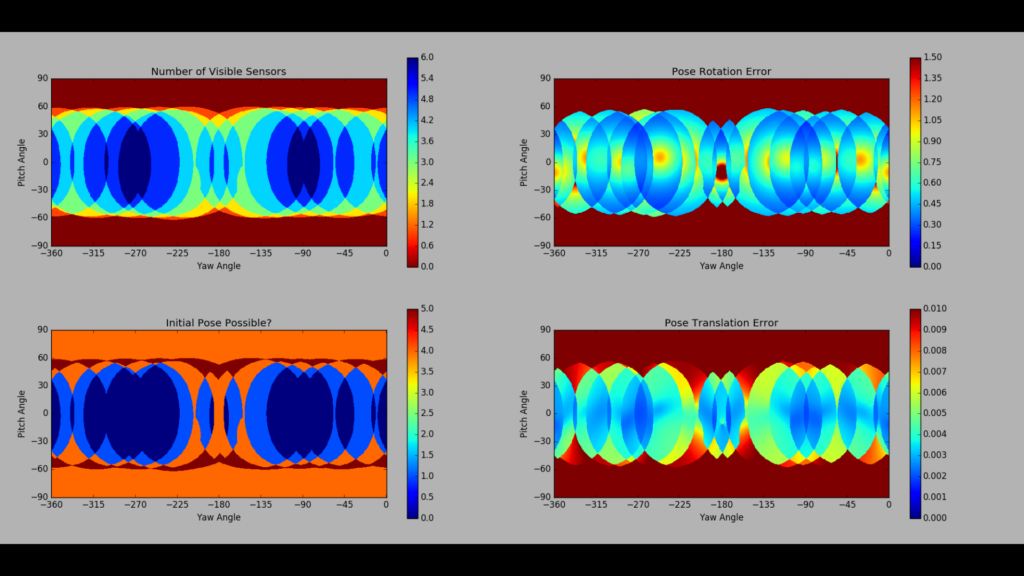

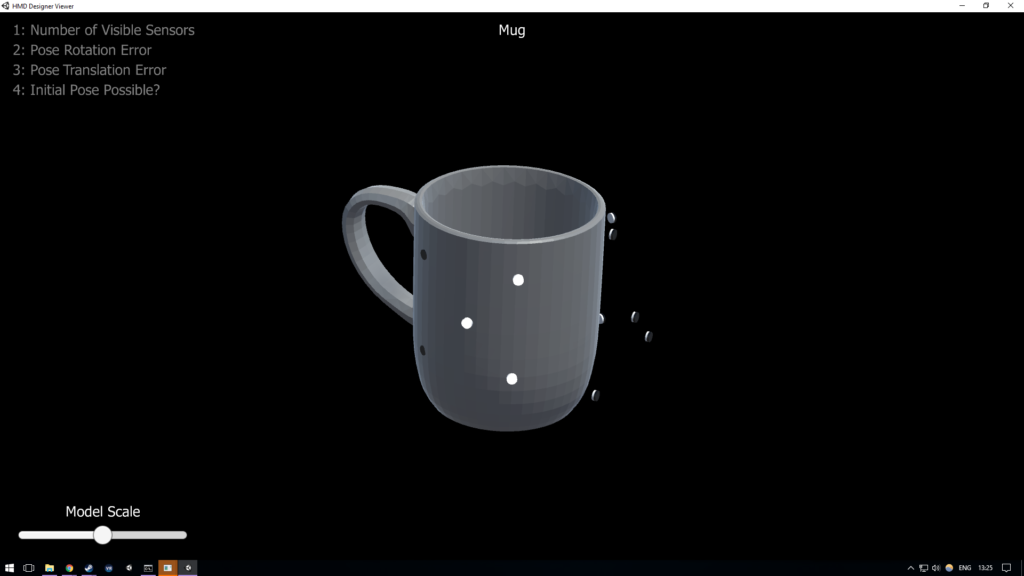

So as a brief preface, I’ve learned that when I create something, I really like to know every bit of how that thing works, inside and out. Because of that, I like to start from the ground up in a lot of situations (this is why the Talaria locomotion wearable prototyping environment is made entirely of primitives – I wasn’t familiar with any formal 3D modelling at the time, and still wanted to know everything that was going into the project). I suppose it’s tied to a love of learning, and also probably a bit of perfectionism. Either way, because of this mindset I plan on very minimally using outside assets in this project. I would love to do all the scripting, modelling, audio, interaction, story, and so on internally. This isn’t because I think I can do it “better” than others already have (I can assure you that anyone with a focus in any of those aforementioned areas could easily surpass what I create), but because I’m eager to experience the process of creating an experience from start to finish and putting it all together, performing all steps in between. Plus I get to create an experience for other human beings. How cool is that?! Again, this may not be a practical approach considering the given time frame, but nevertheless I am looking forward to what I will learn.

So Somniat is going to be a interactive storytelling experience. I’m really drawn to the possibilities VR has as a storytelling medium, and this project is a way for me to start exploring what is possible. Going in, I know there are a few things I would like to avoid or focus on: I don’t want this to be a passive experience. I want the user to be the main character of a plot, and not to watch the plot happen to somebody/something else. I also love suspension of disbelief and experiencing the surreal. I find surreal experiences in VR to be so profound; it’s like unlocking all the imagination we had when we were kids and telling our brains it’s real again. And that leads to my last hope – I want my users to have a return to innocence; to be kids again. I fear that by simply living our lives we easily lose sight of all the imagination, spontaneity, and joy that followed us daily through childhood. I want to try and reconnect with those feelings, even if it’s just a little bit.

Since this is due to be such a quick turnaround, if you’d rather experience the content first hand without spoilers, feel free to come back to the rest of this post once the release is public.

Let’s get into the storyboard!

Storyboard

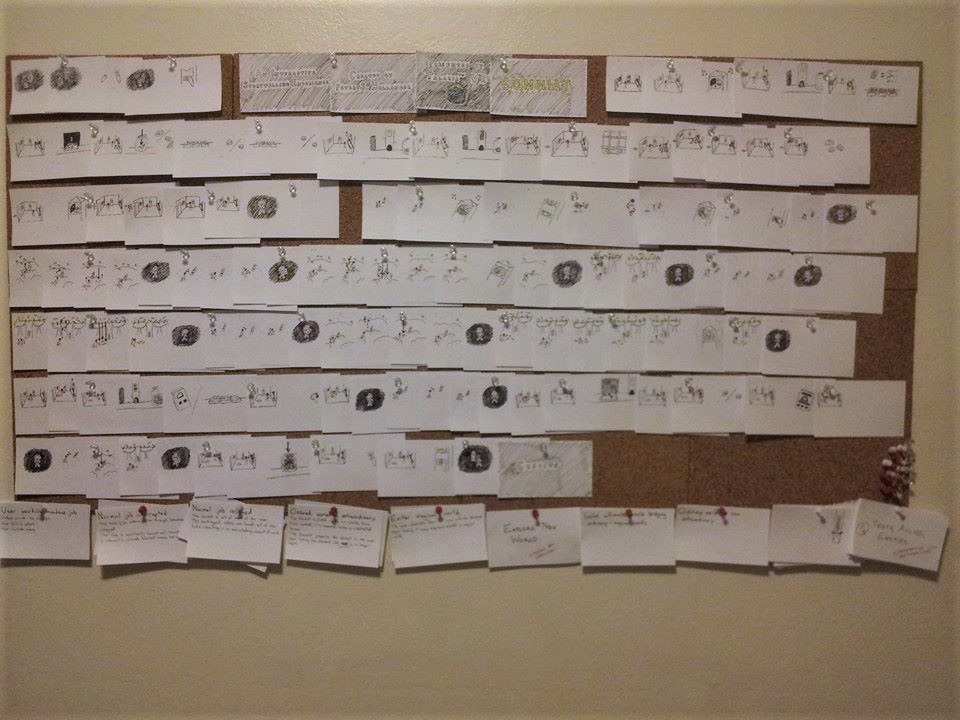

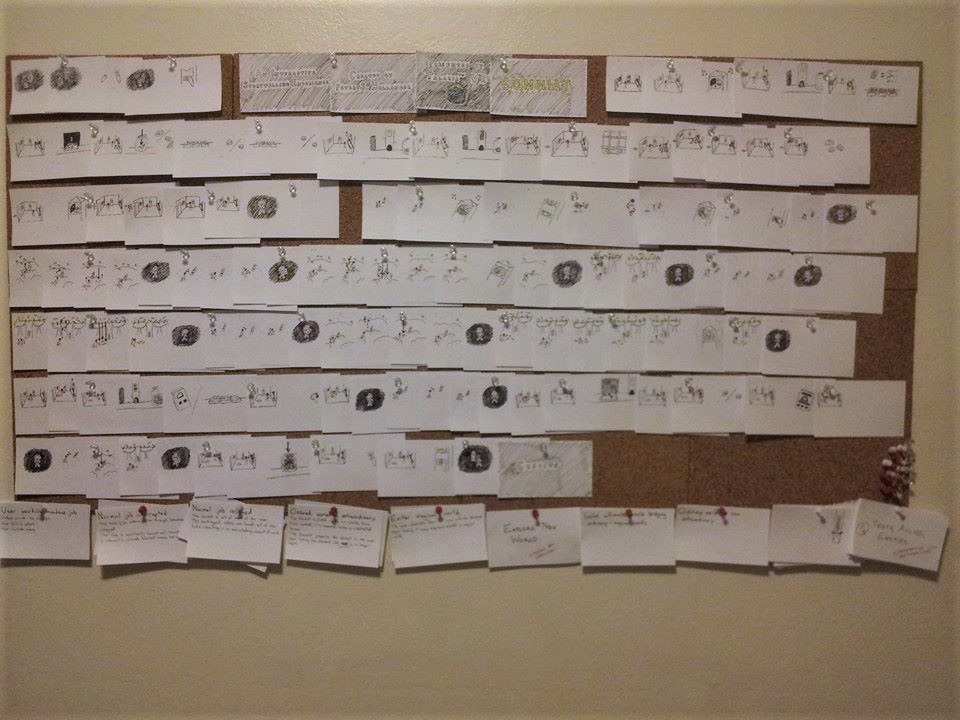

Behold! My storyboarding wall!

That’s 110 cards pinned up. It was a really fun adventure watching all those doodles come together into a coherent story.

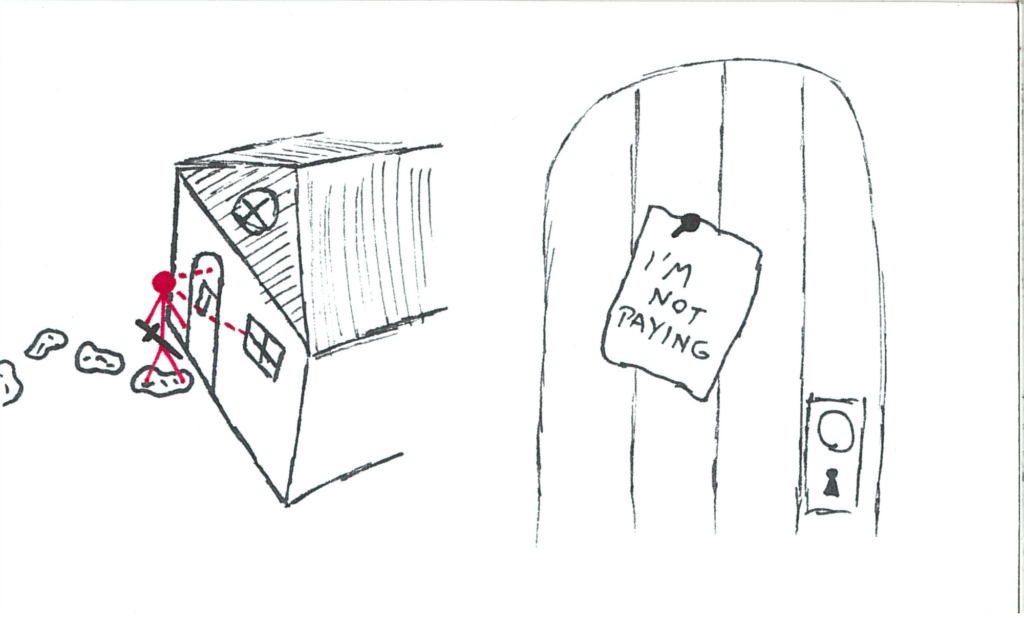

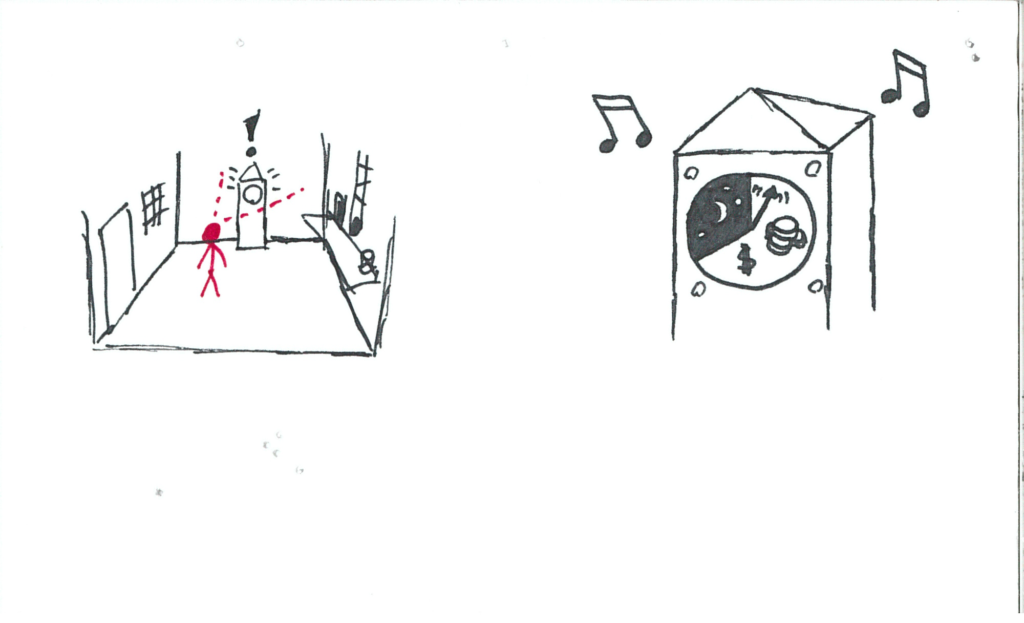

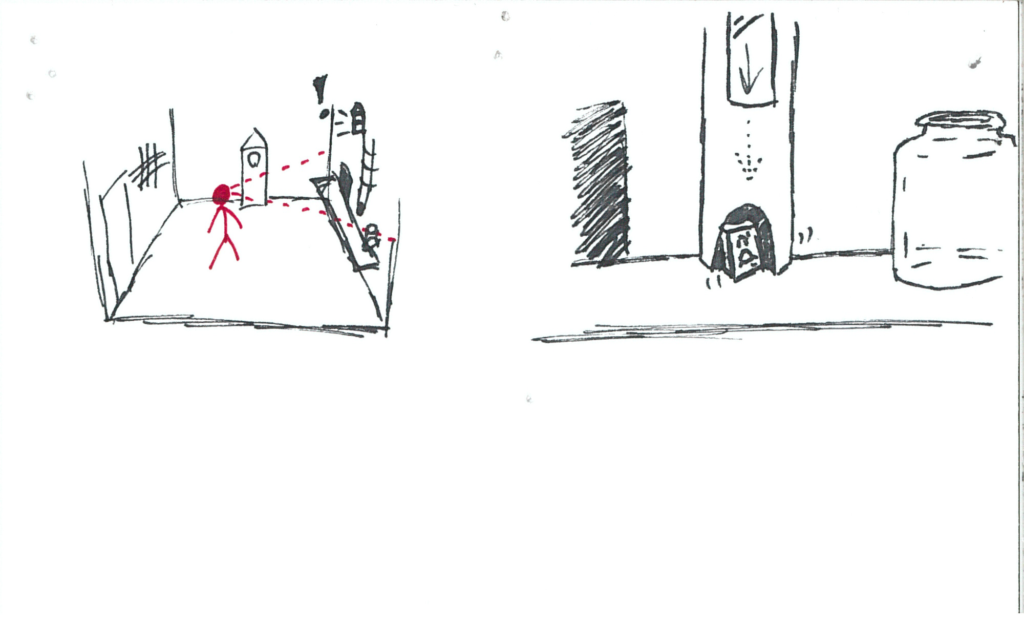

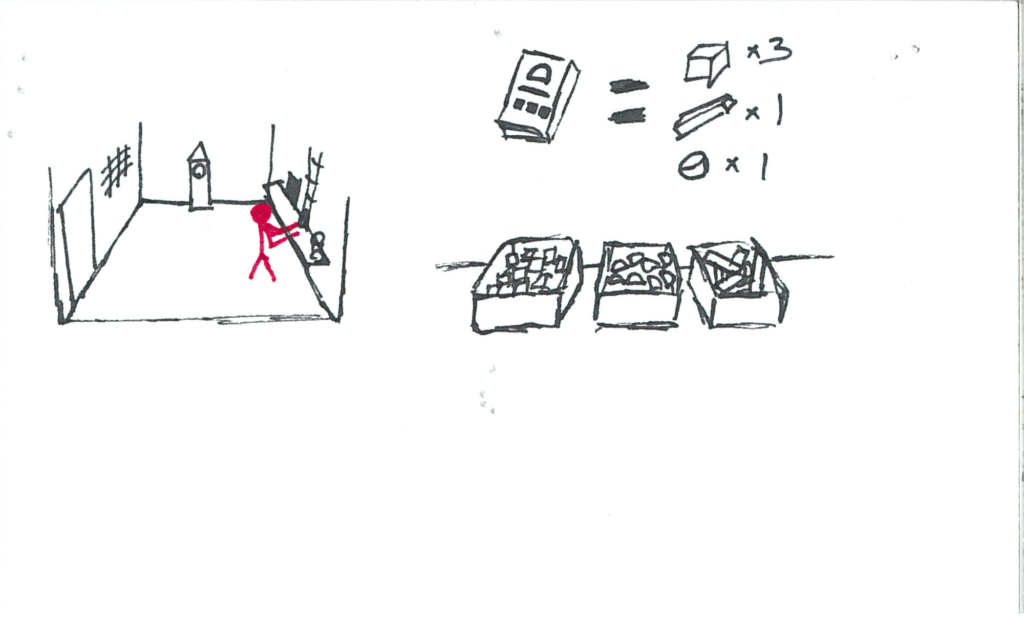

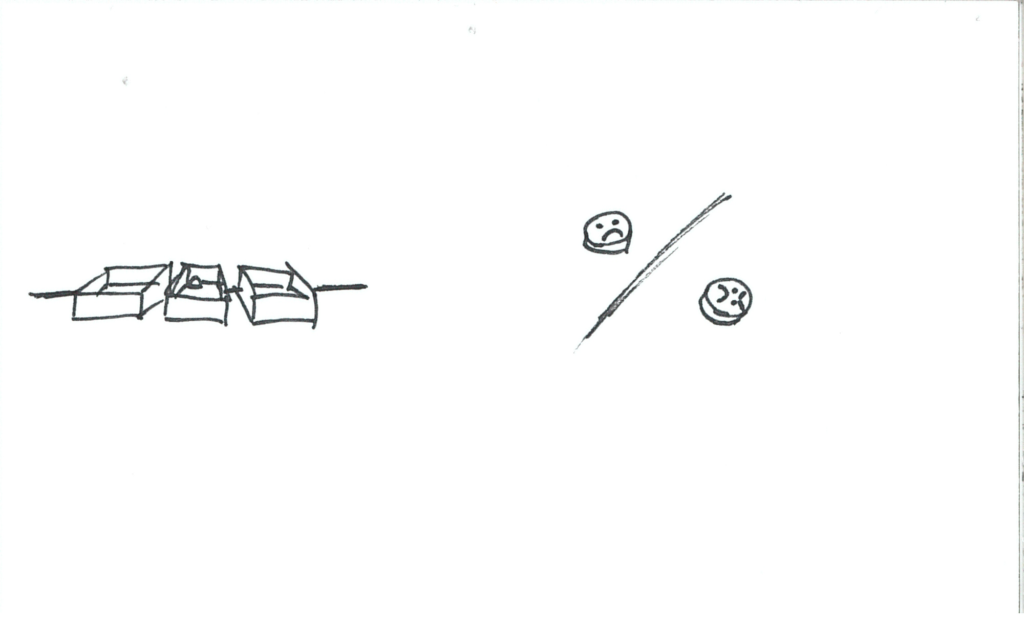

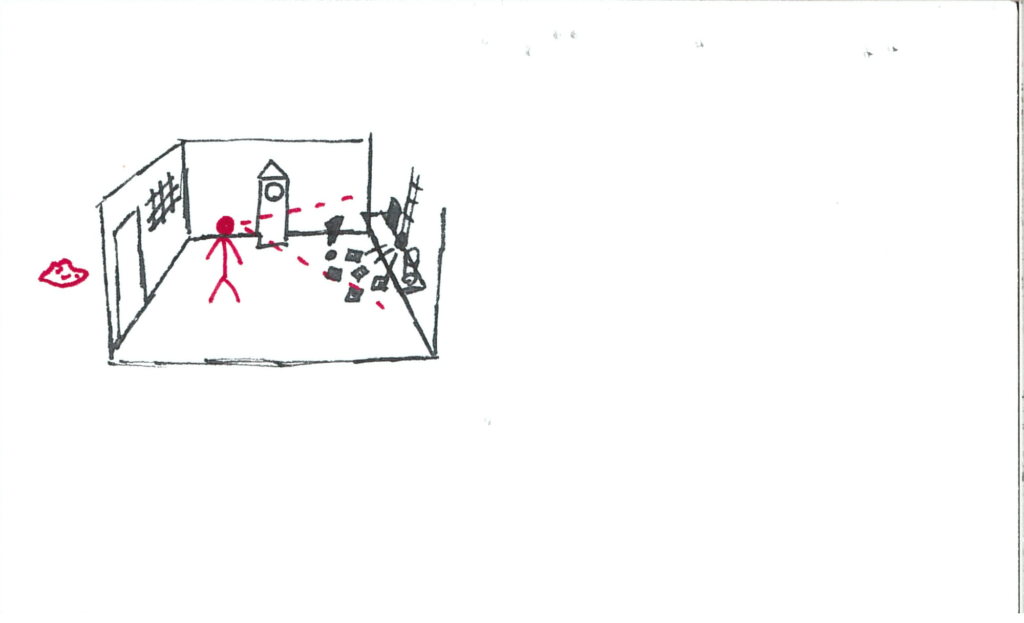

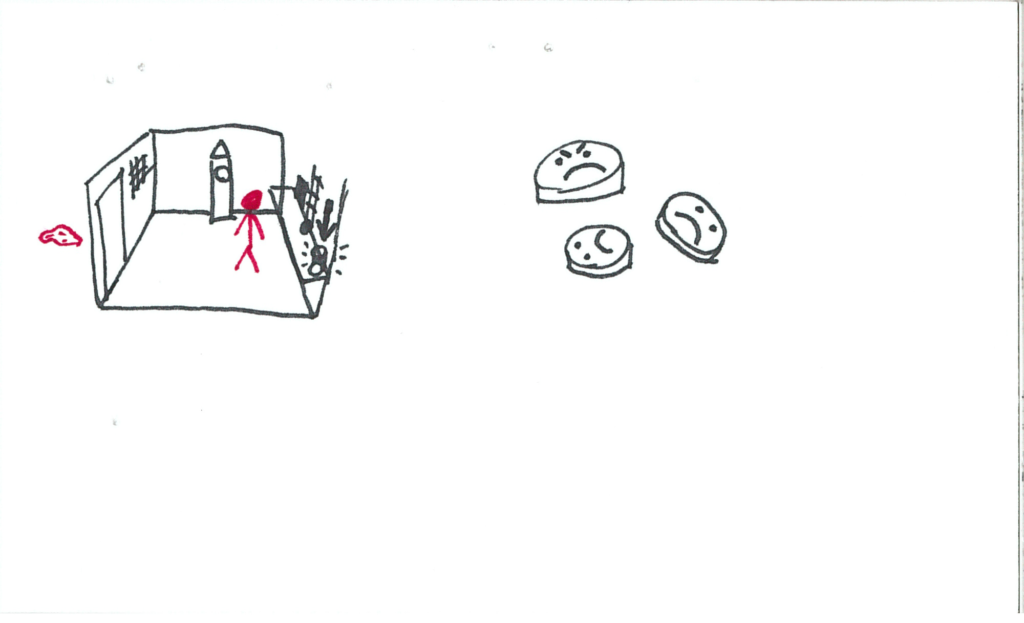

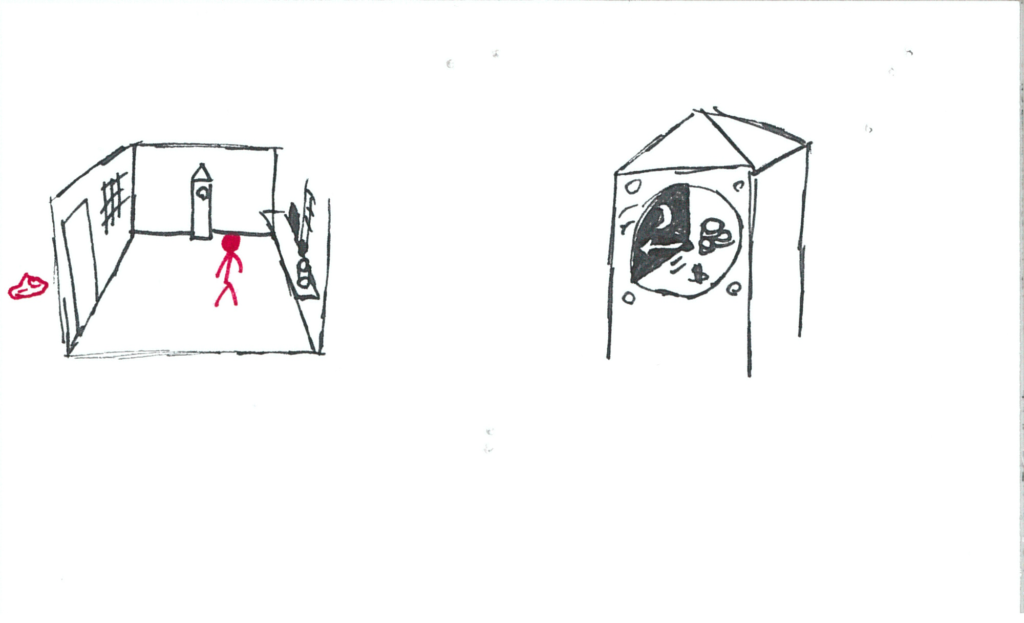

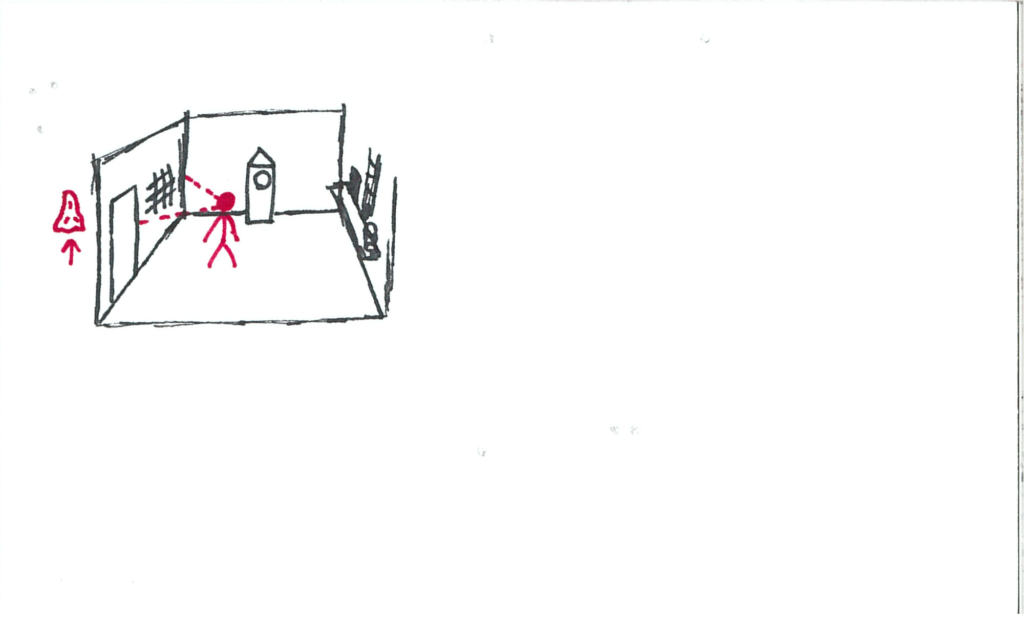

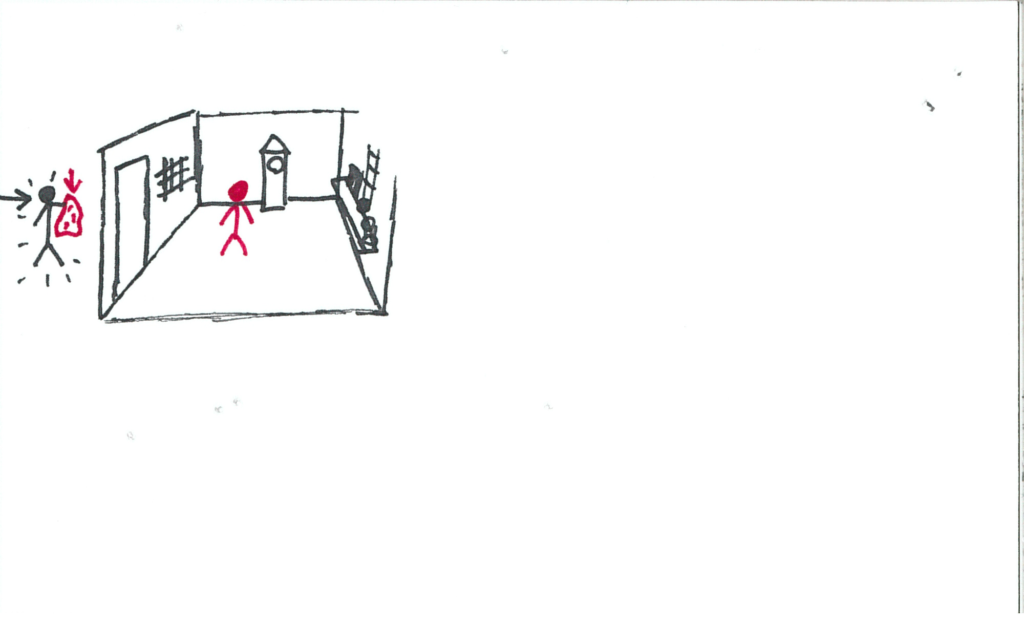

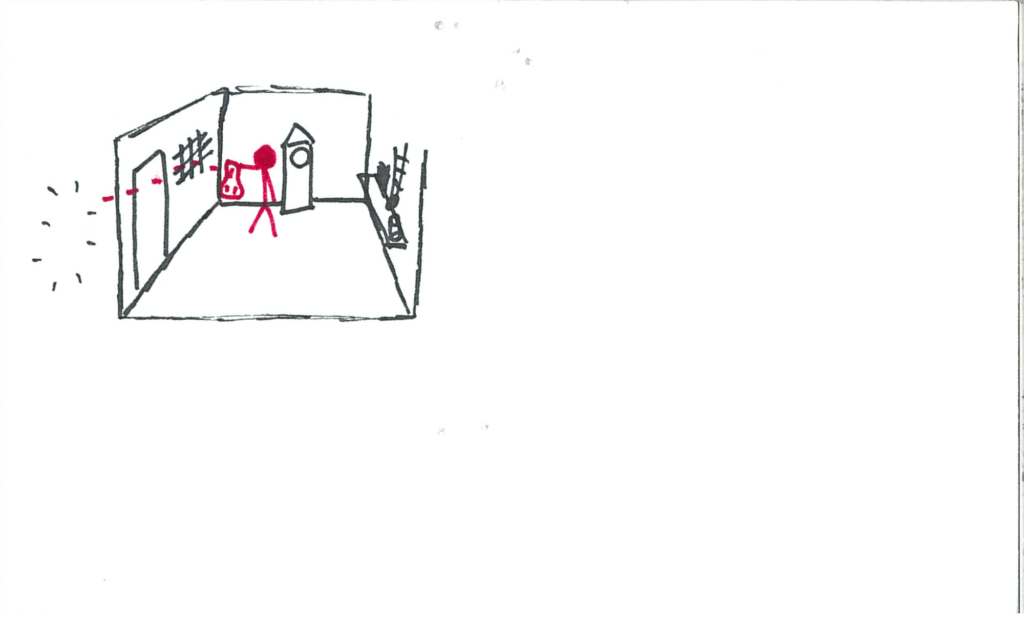

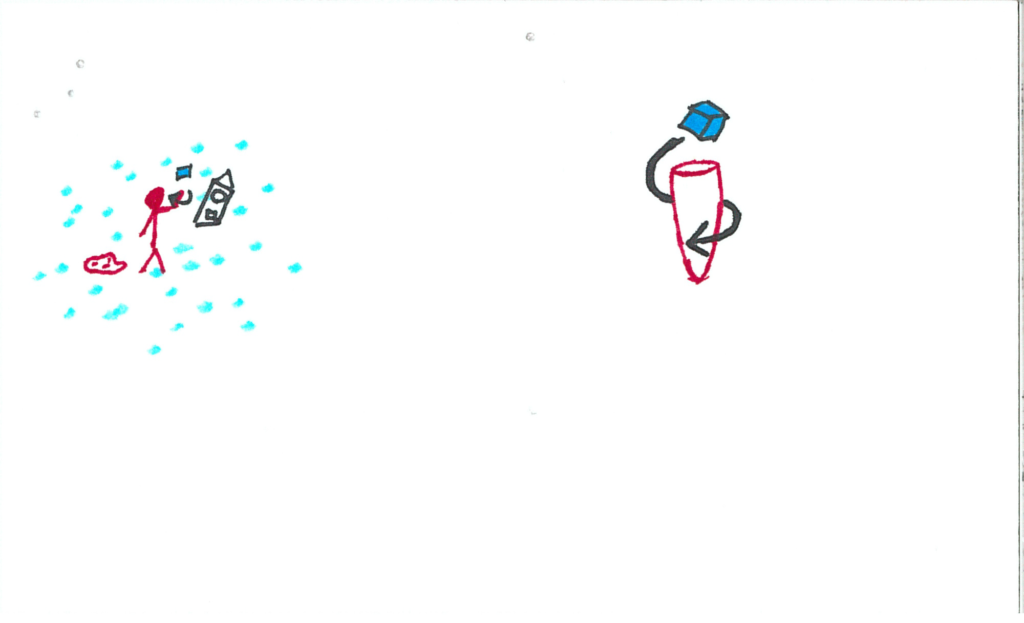

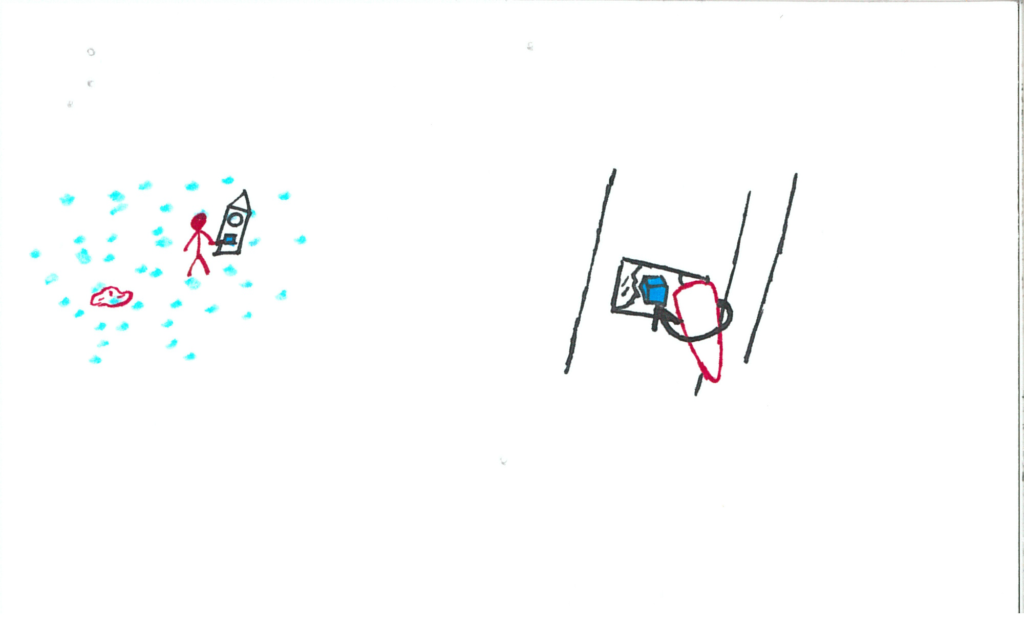

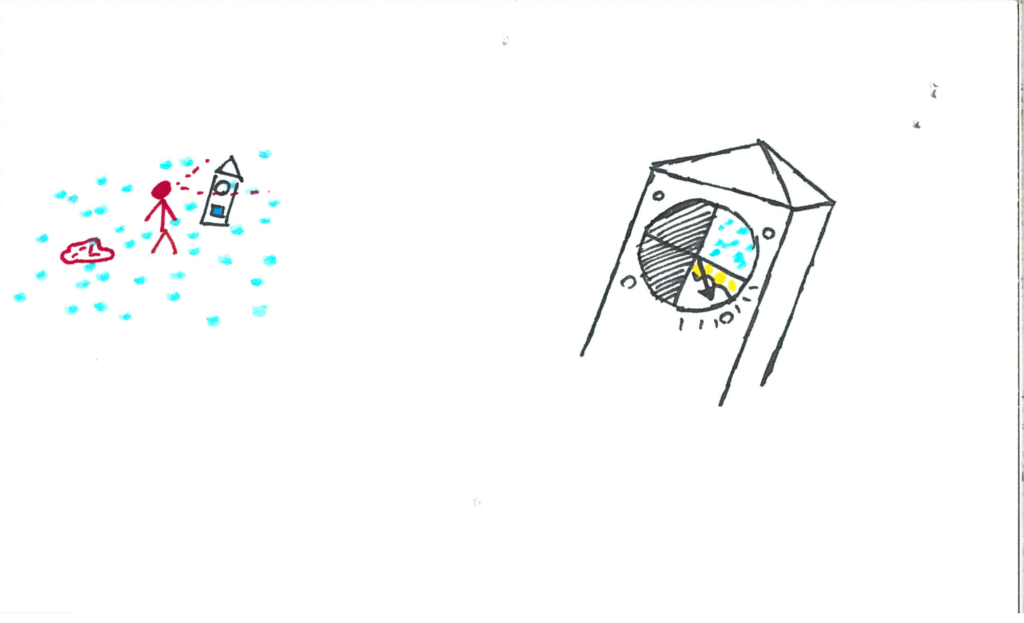

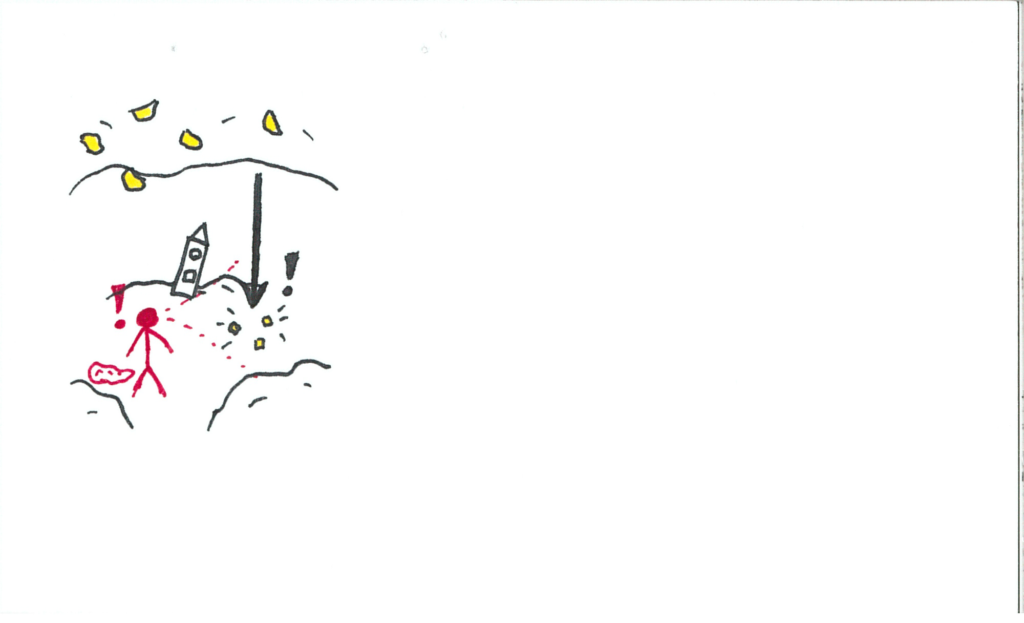

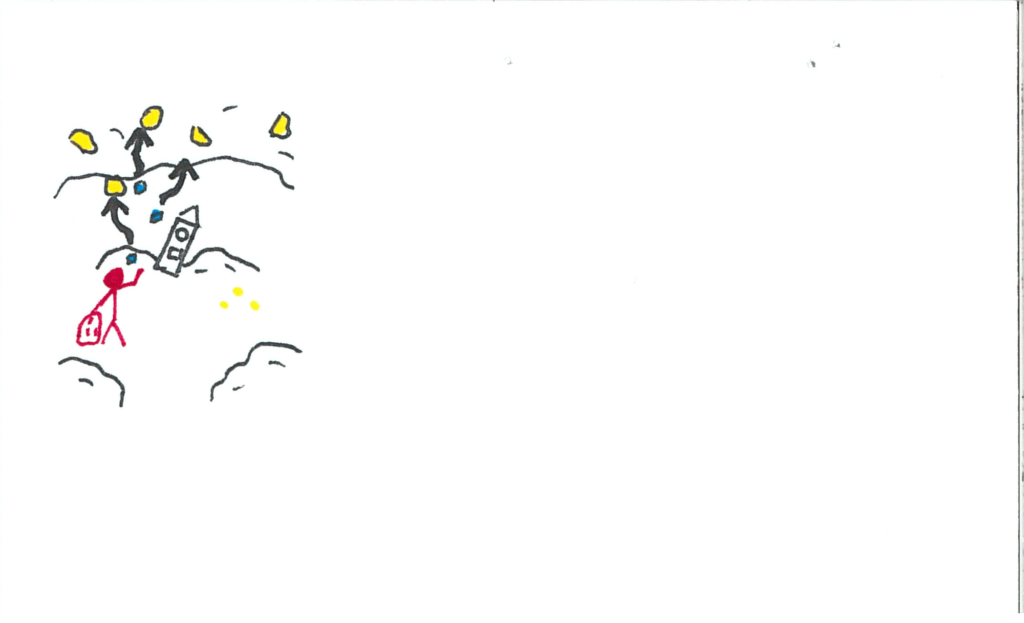

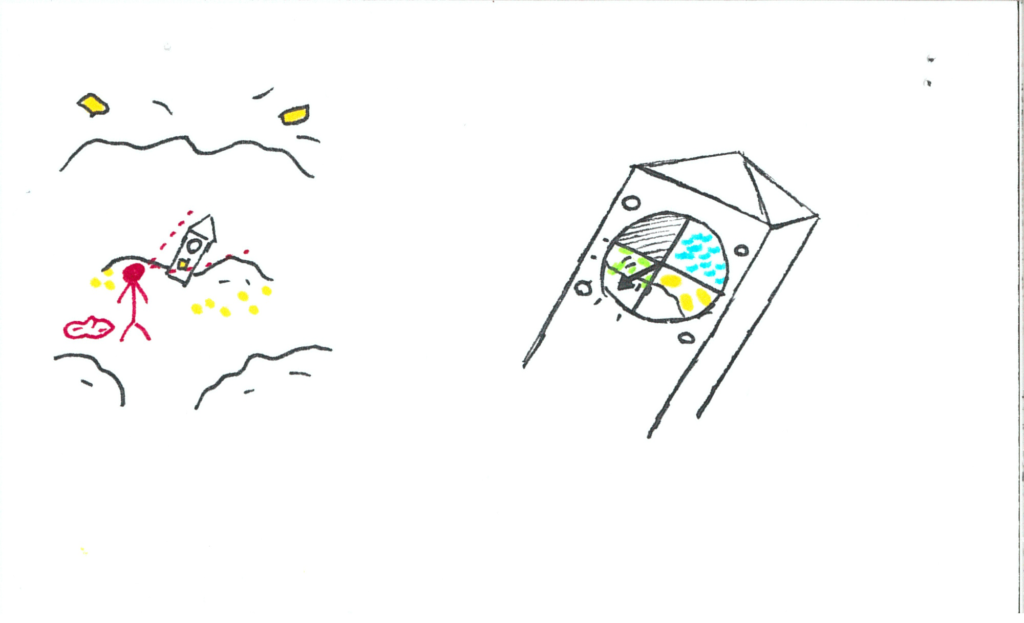

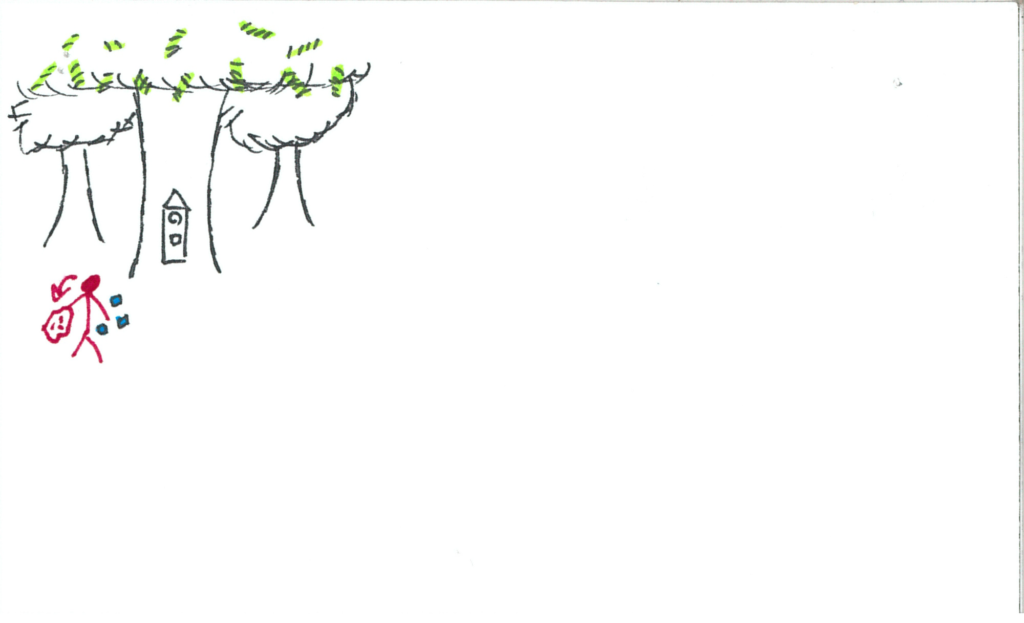

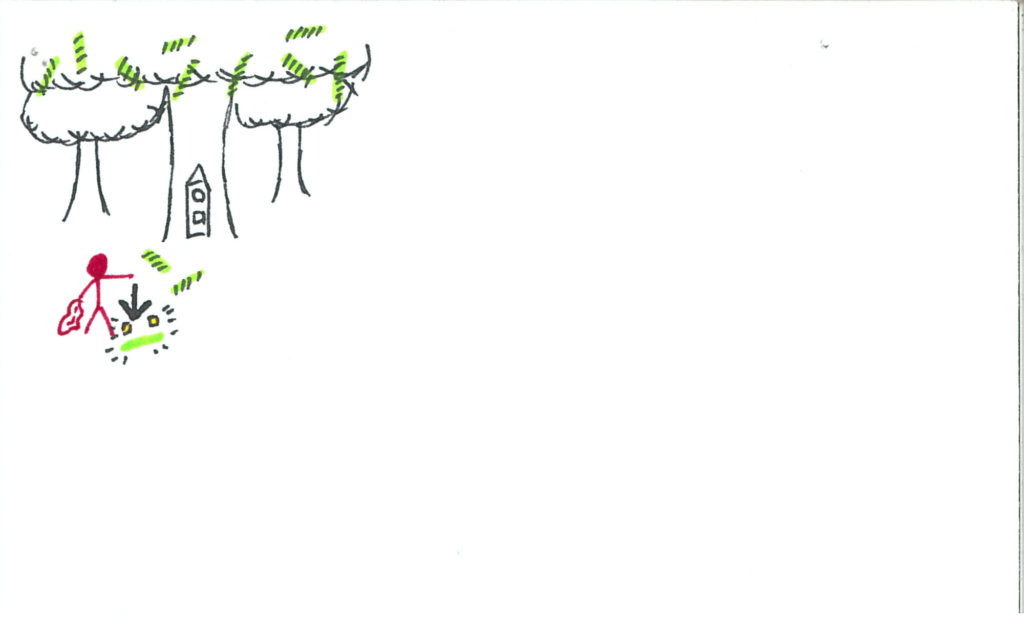

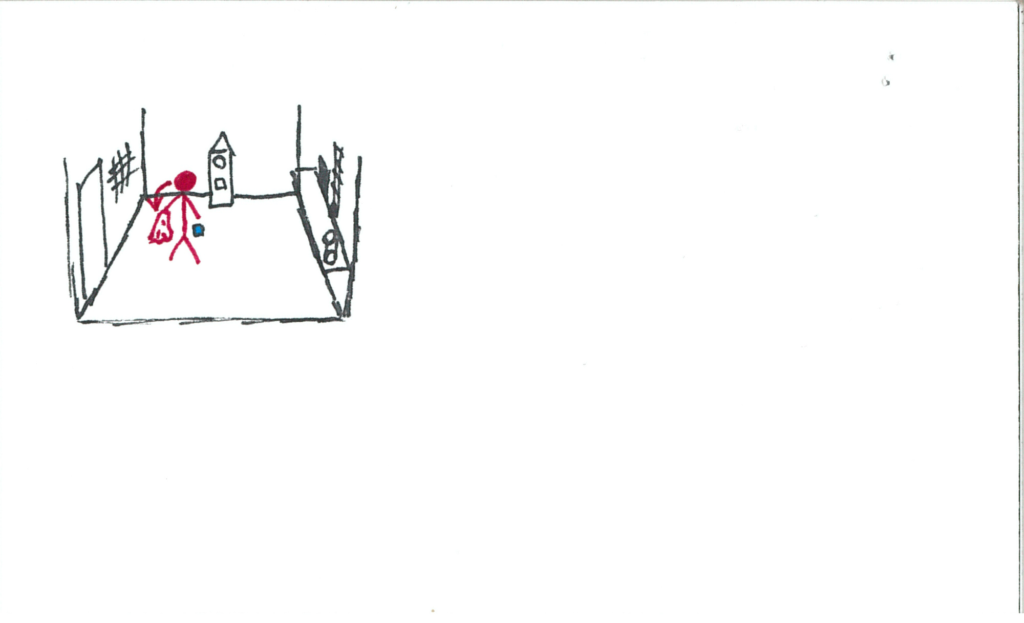

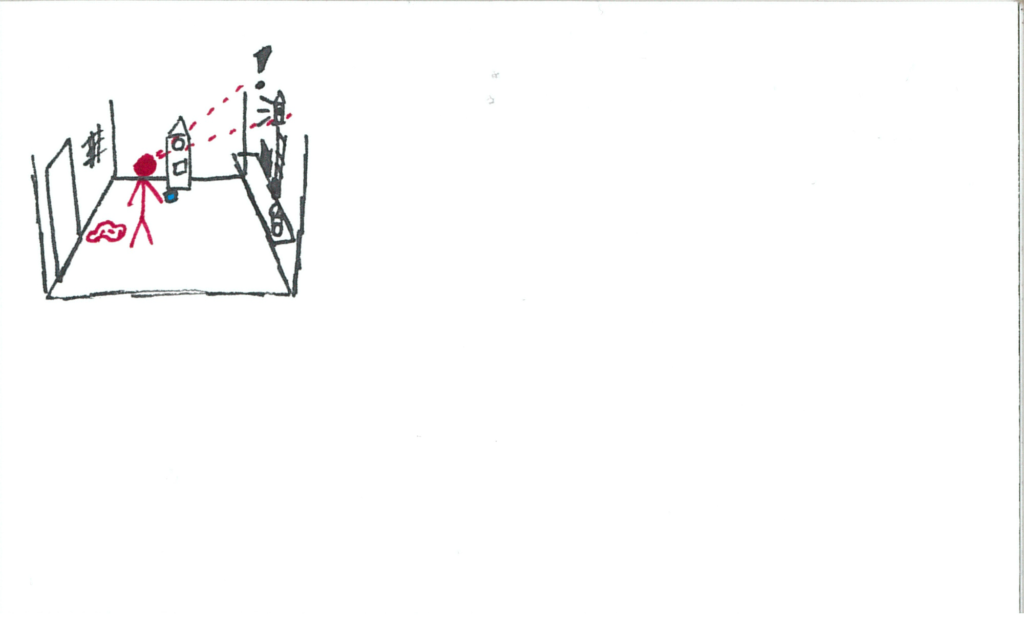

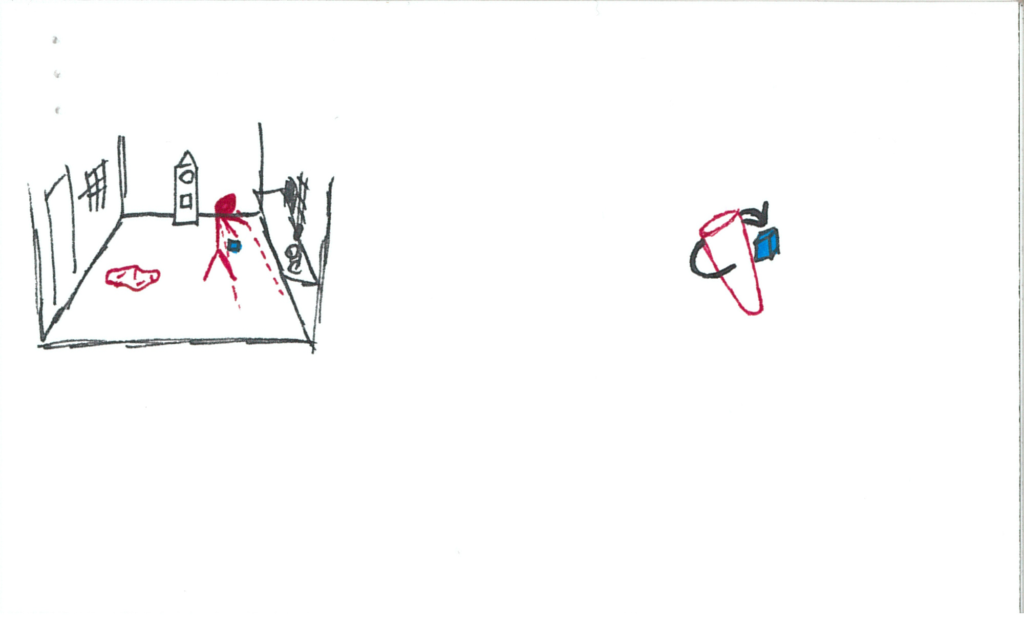

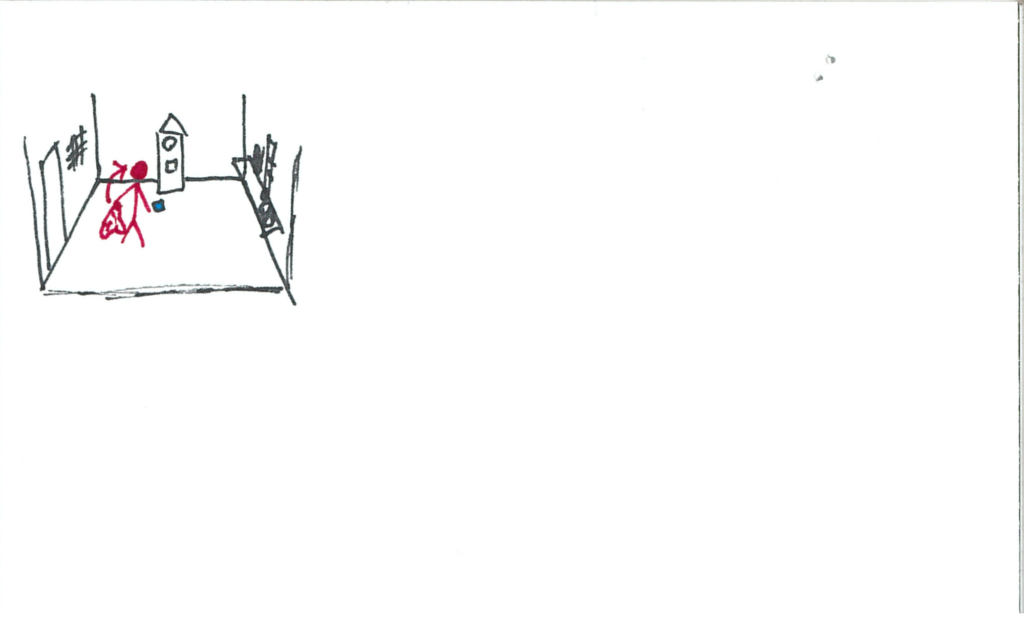

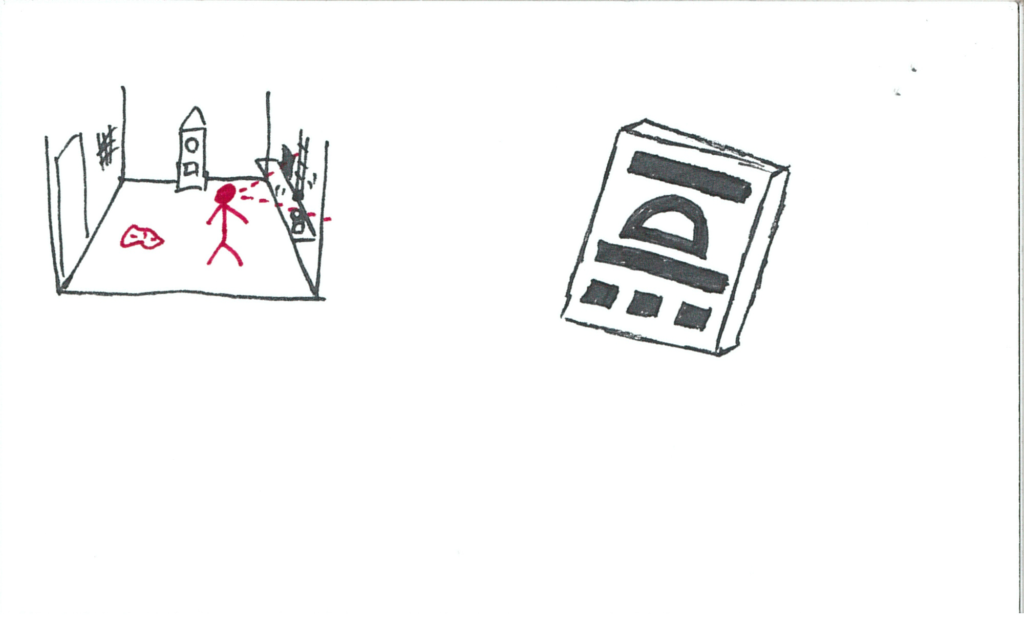

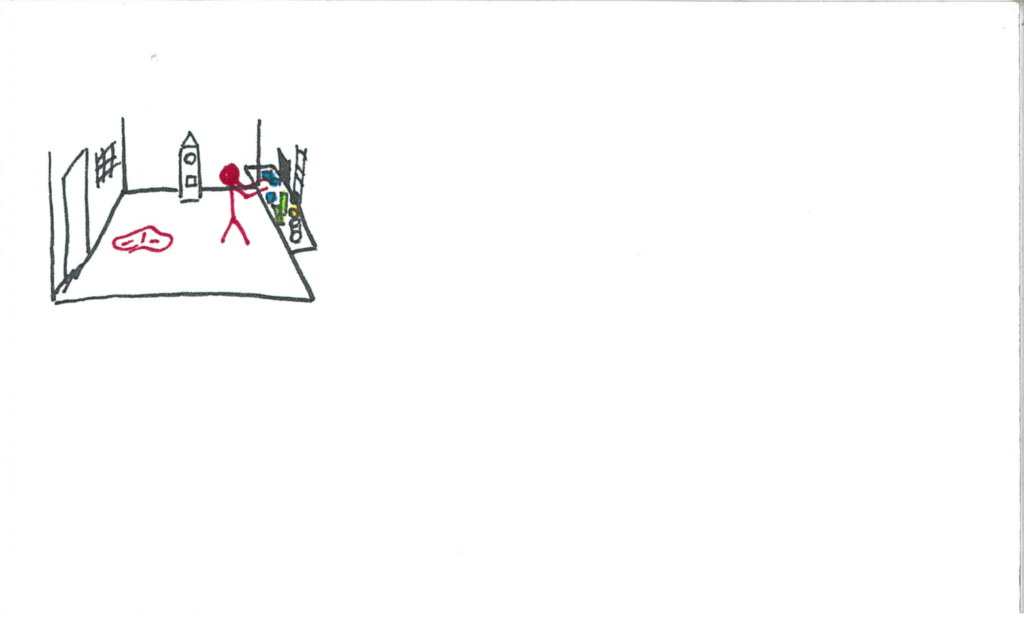

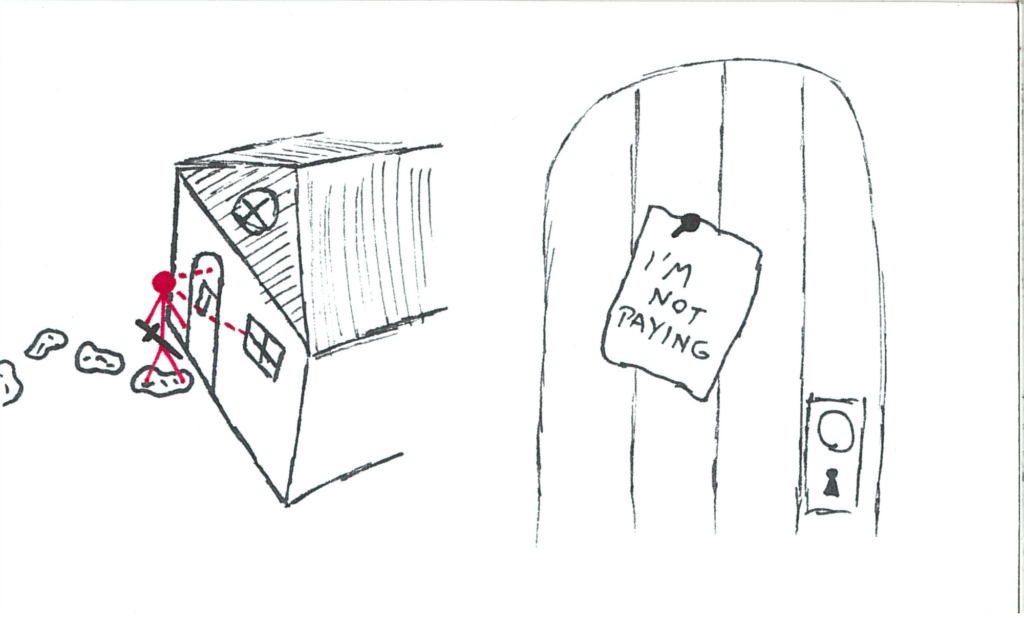

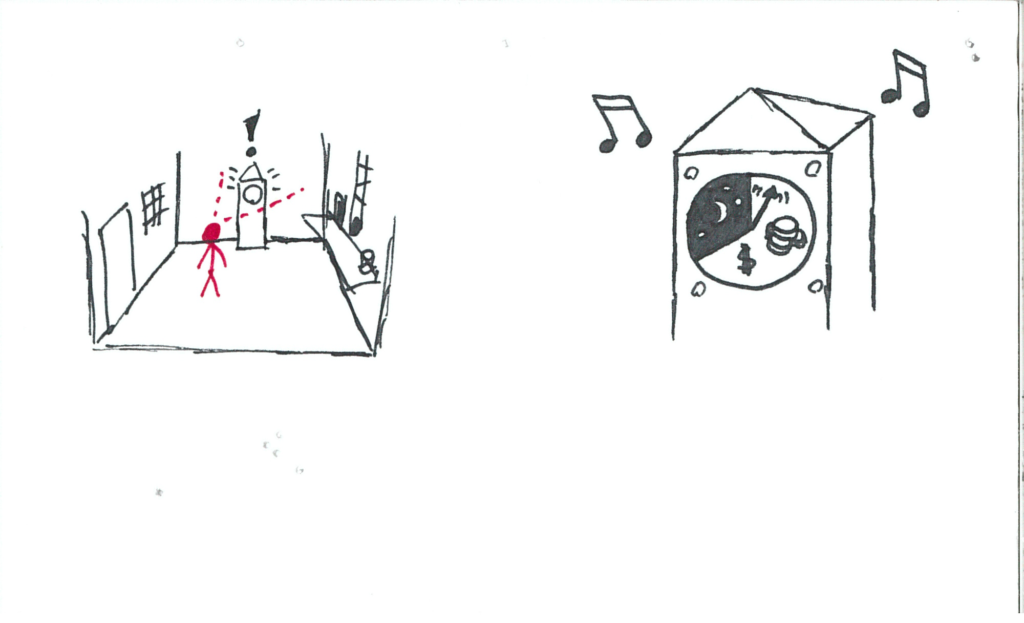

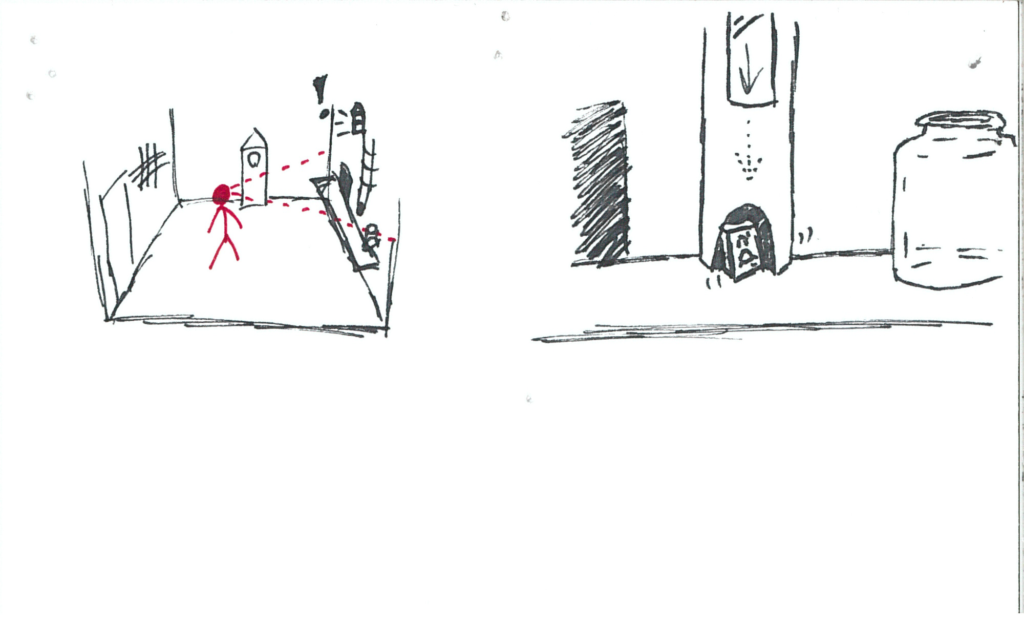

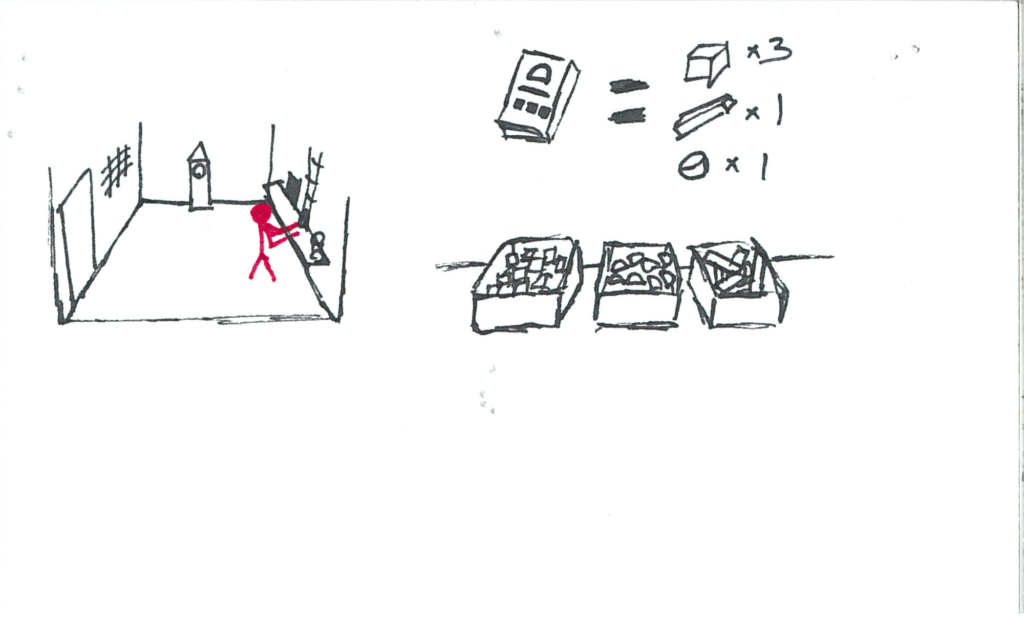

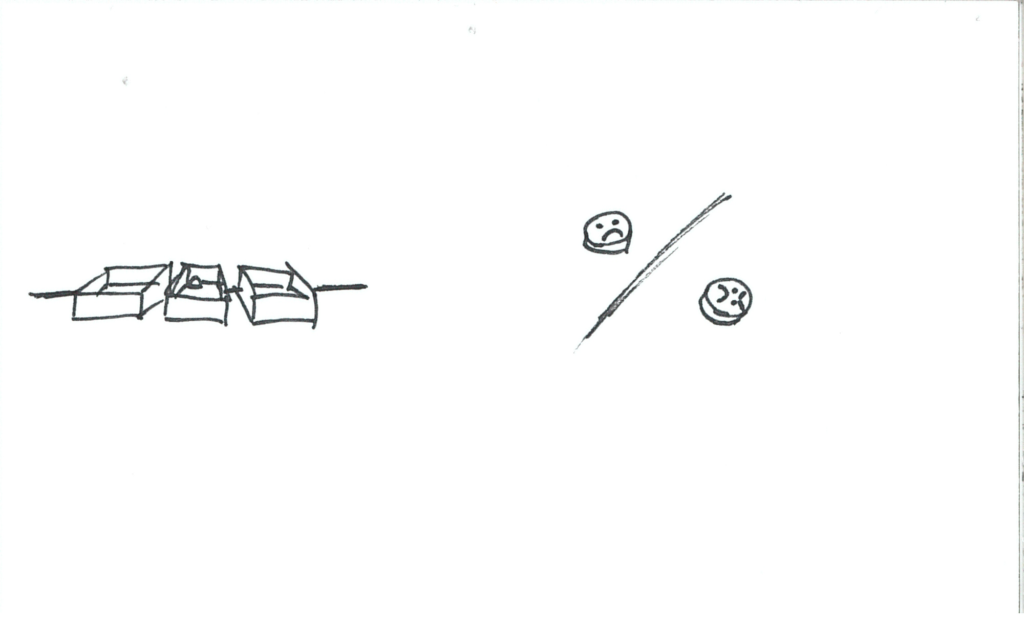

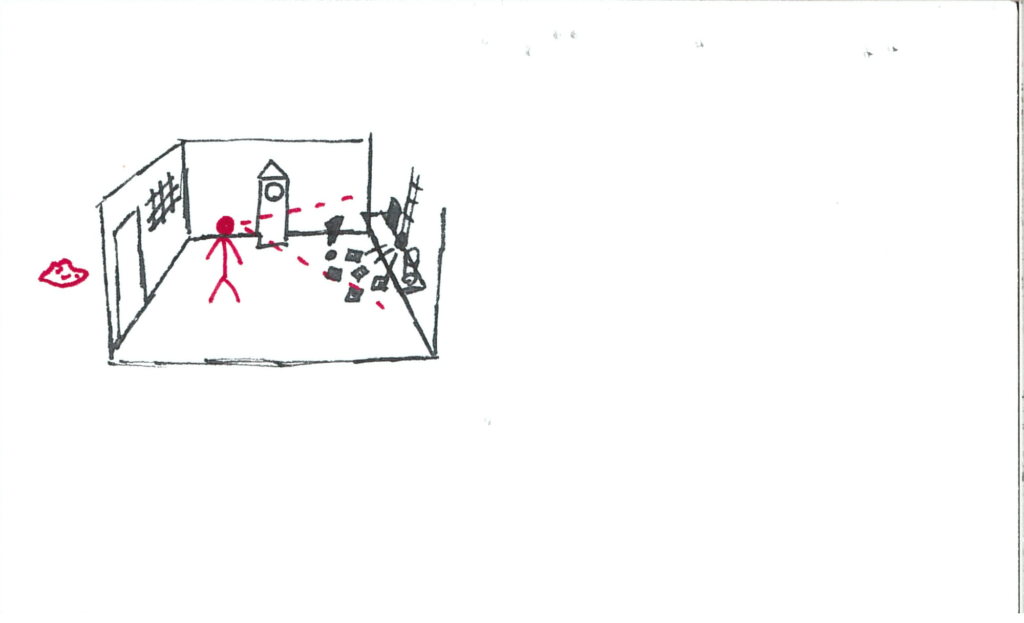

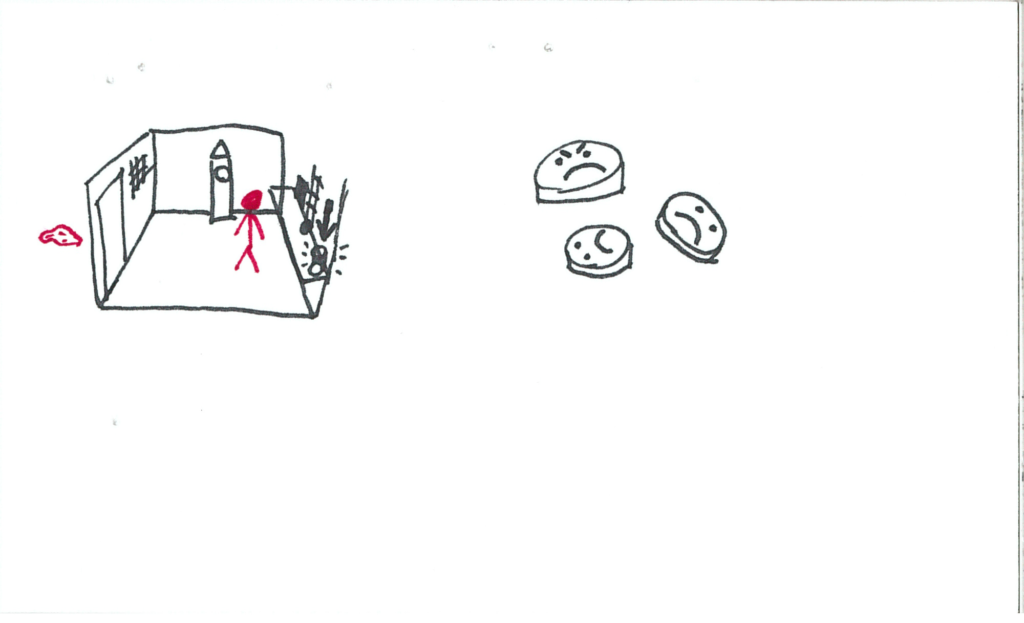

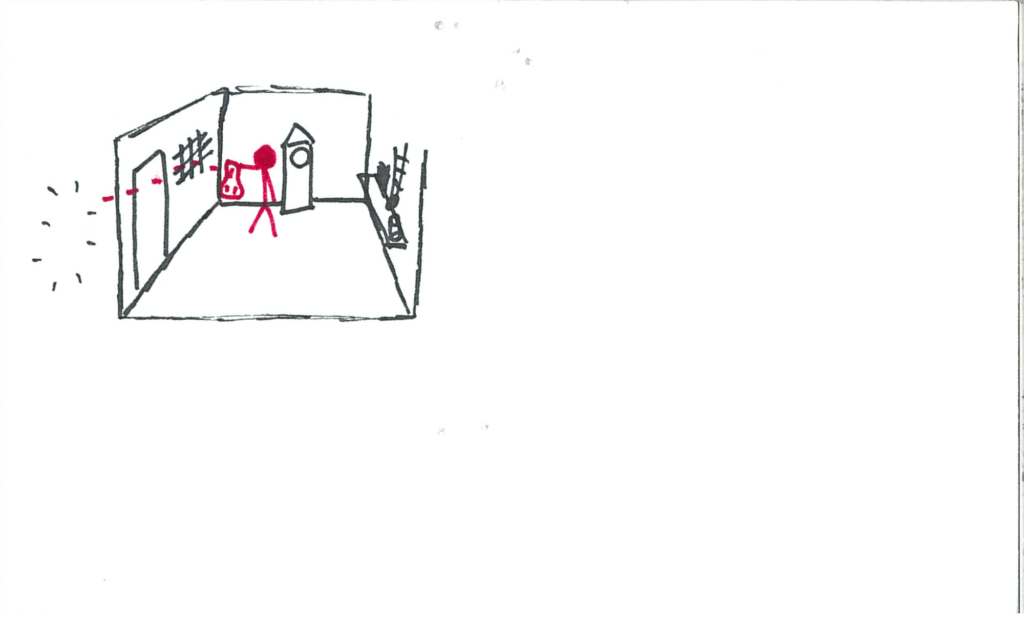

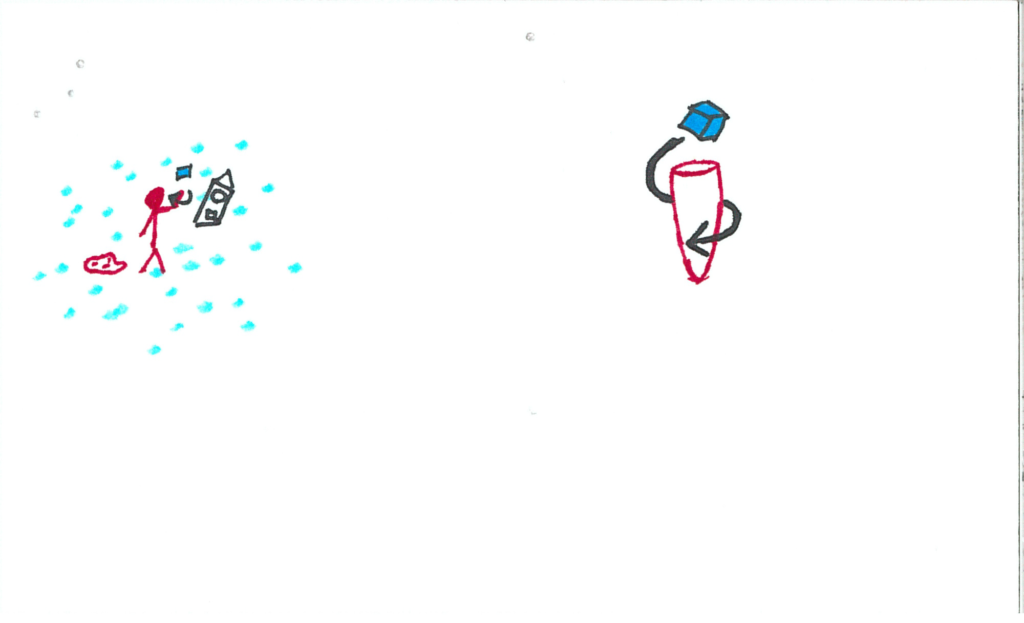

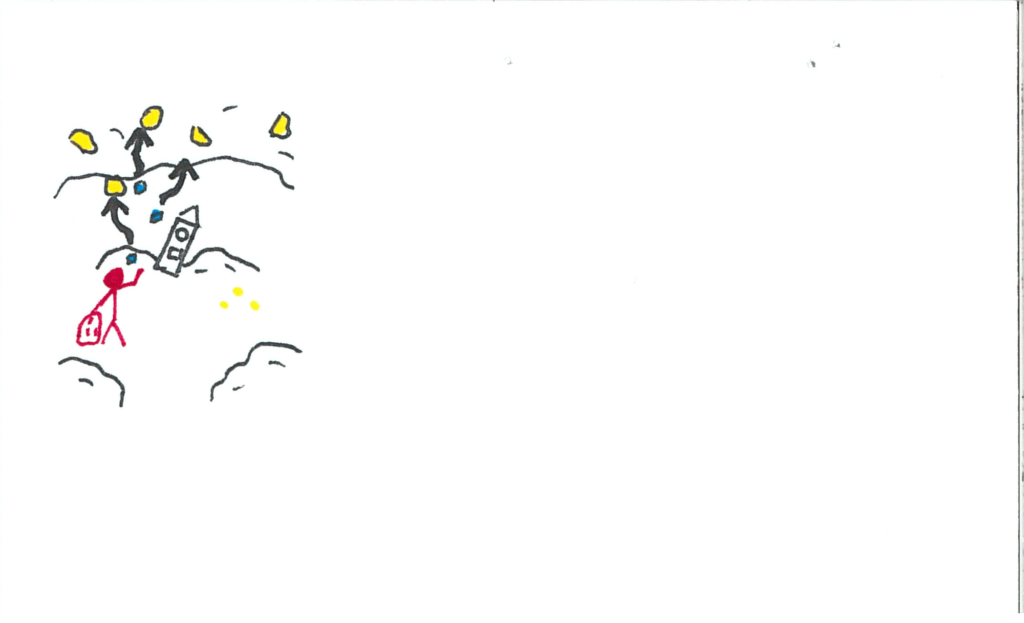

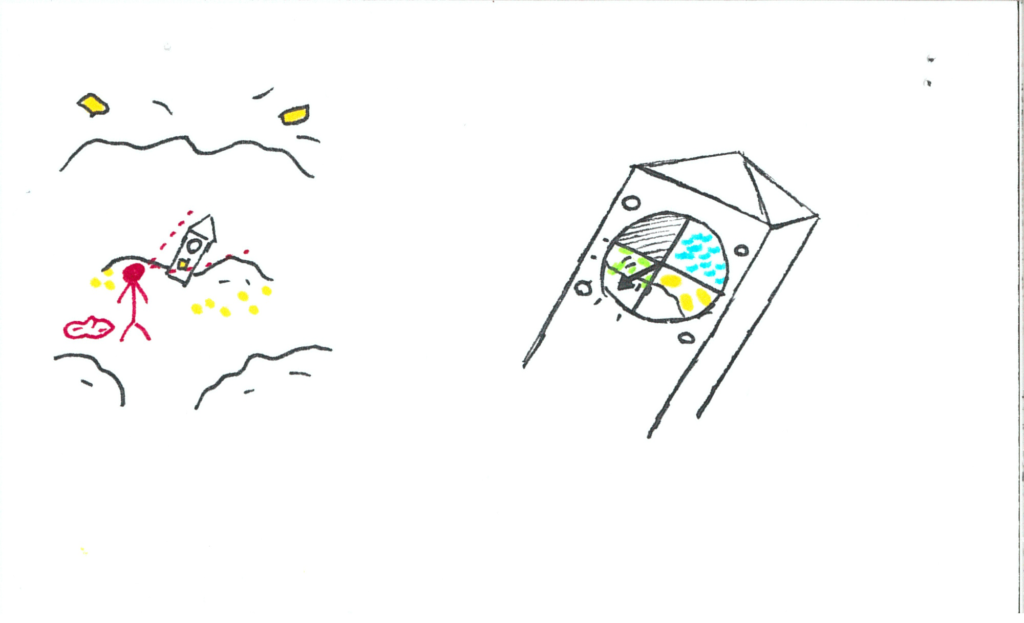

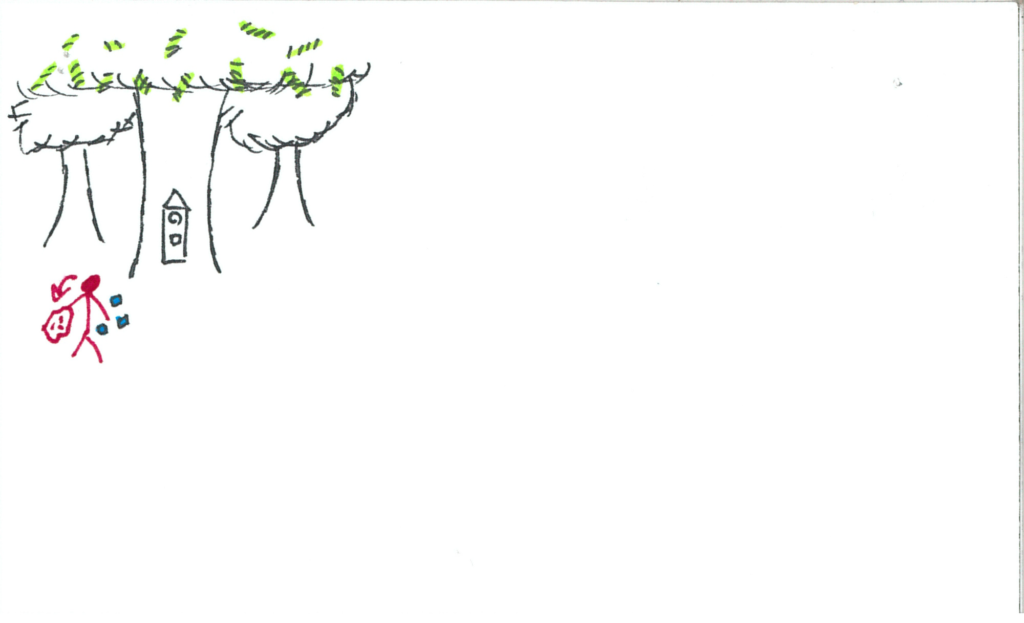

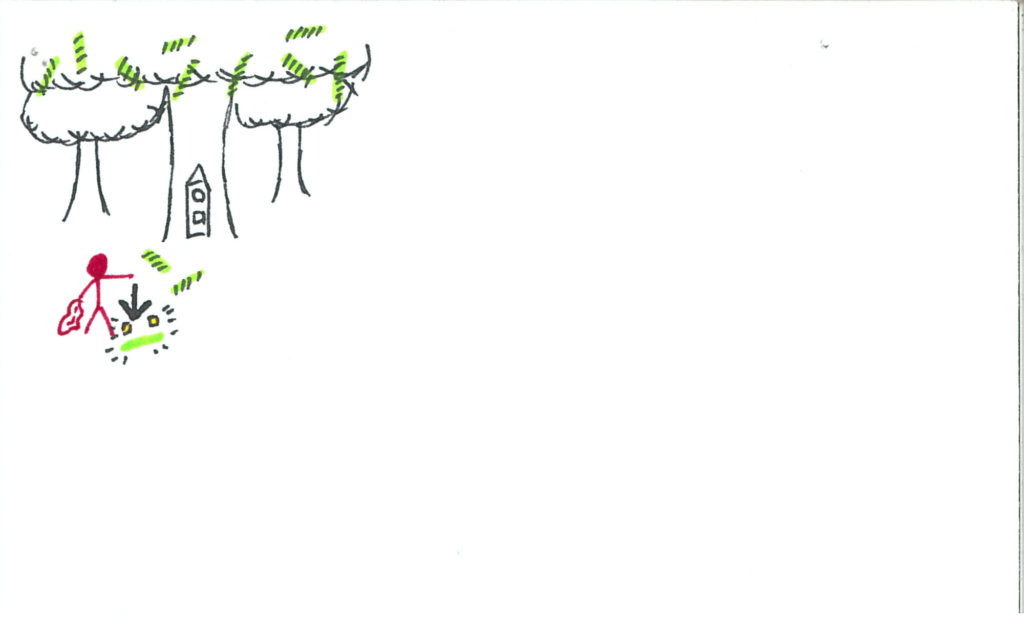

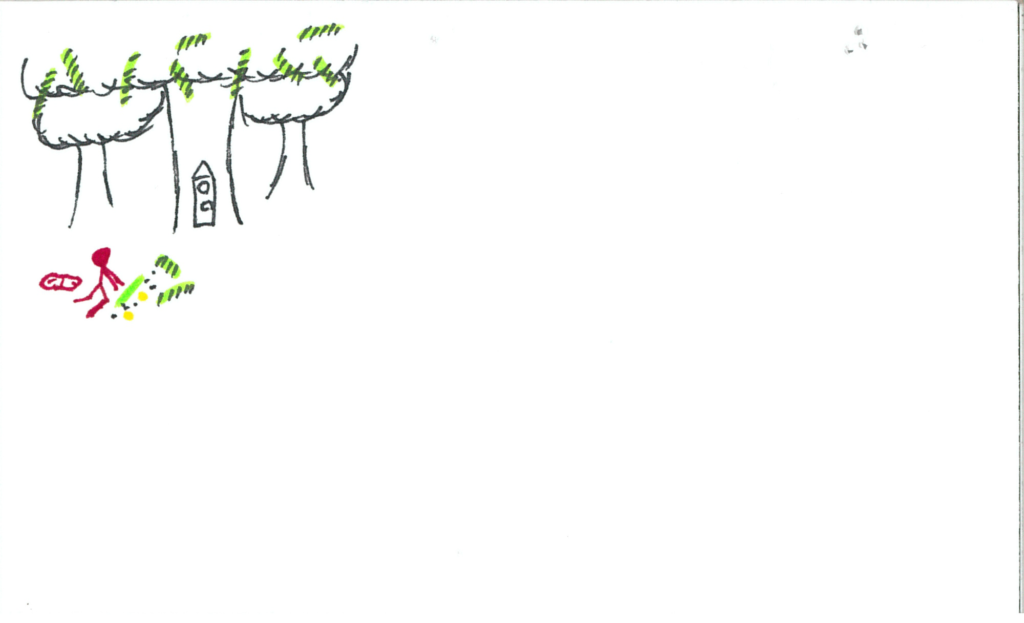

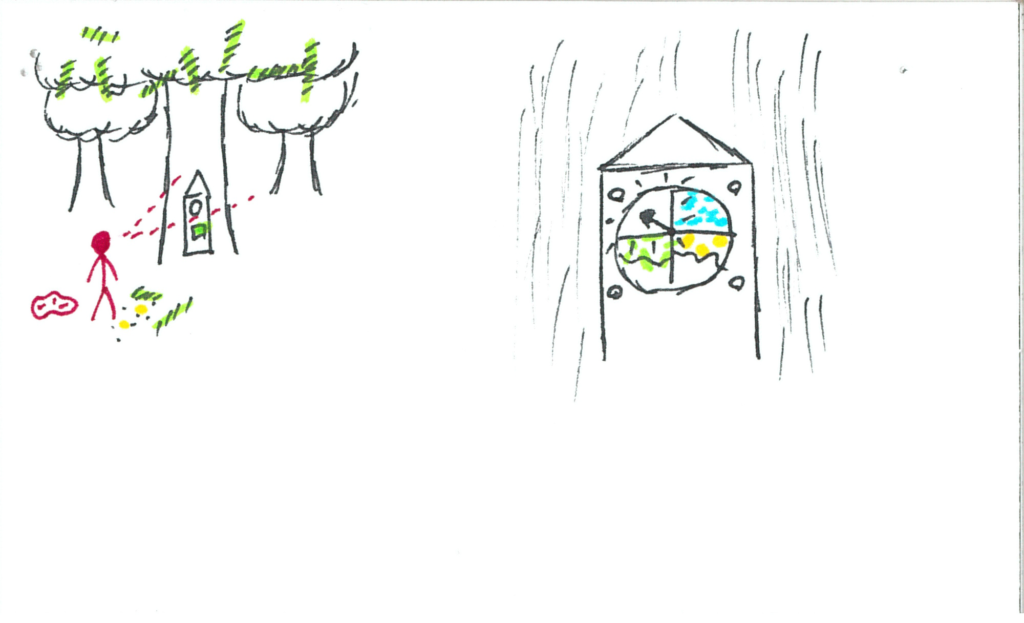

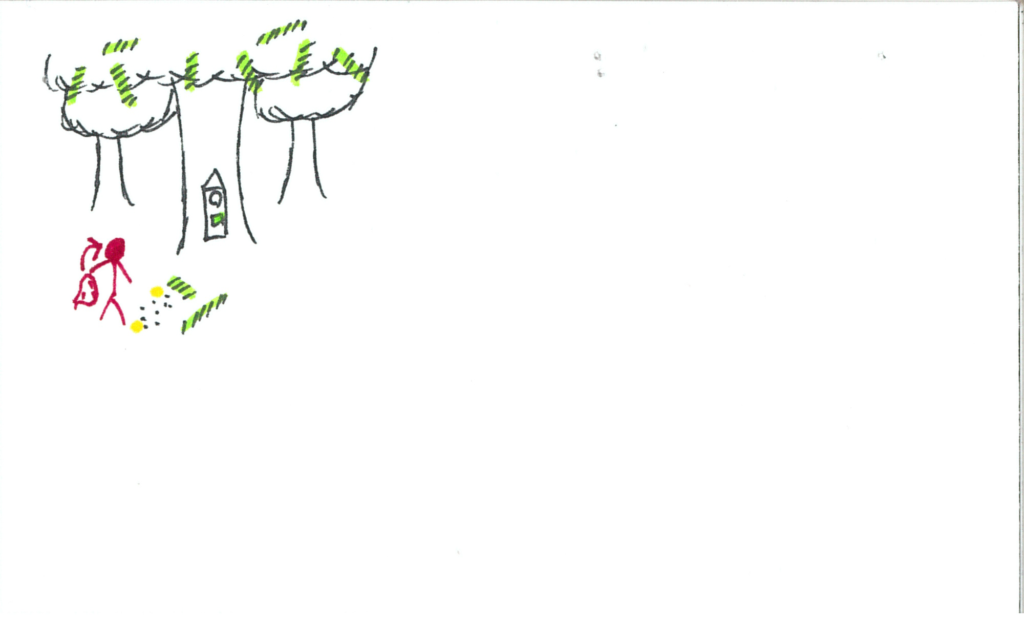

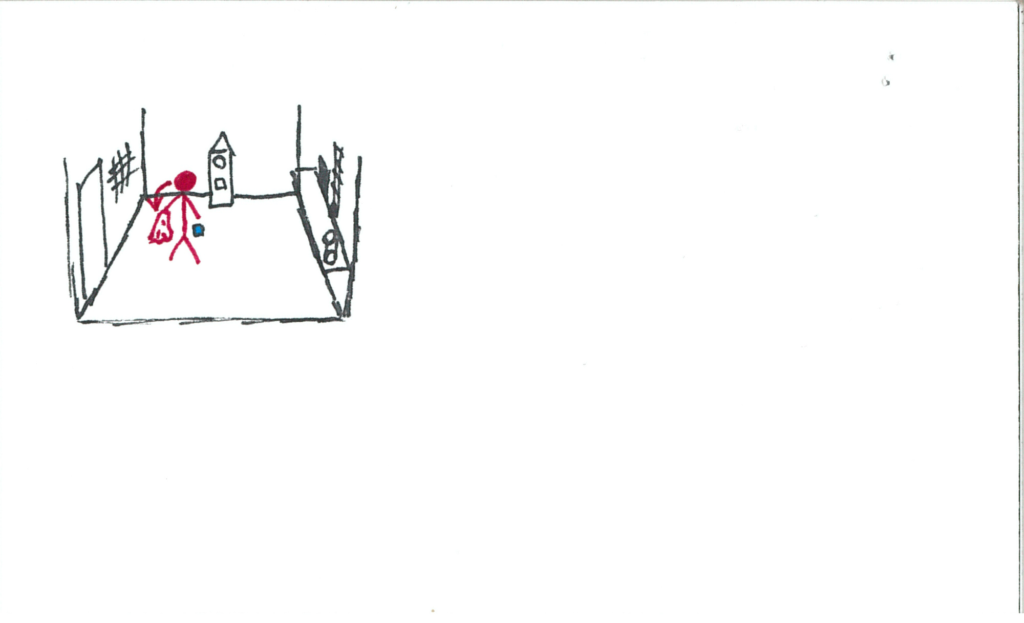

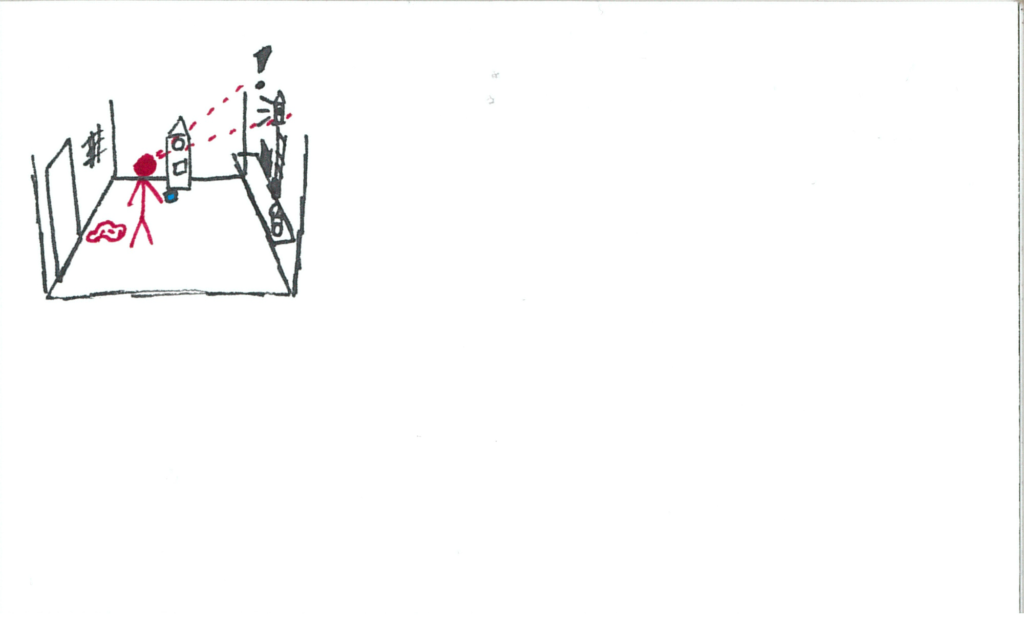

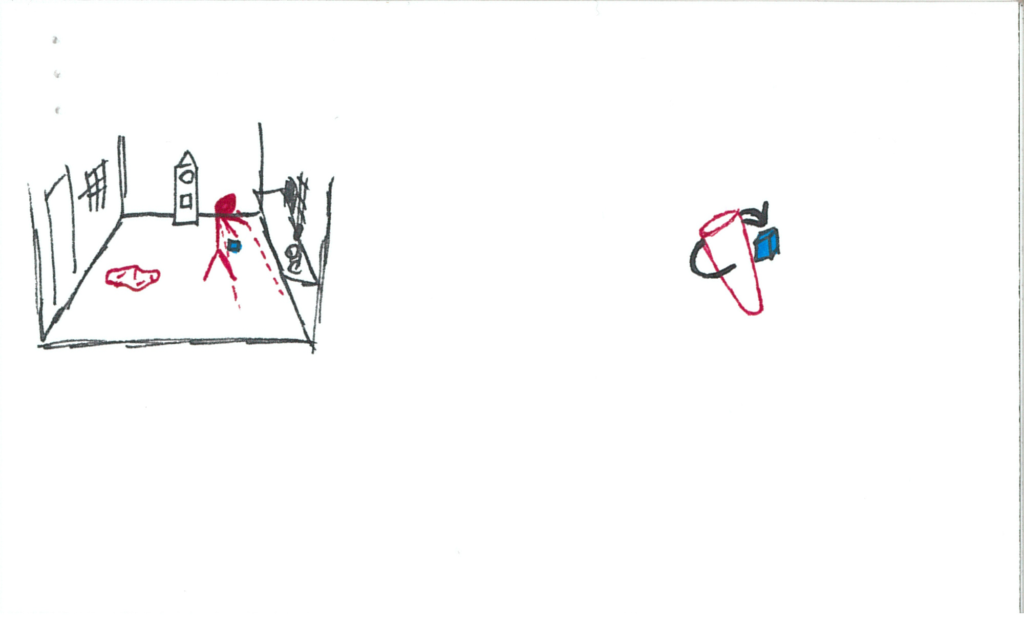

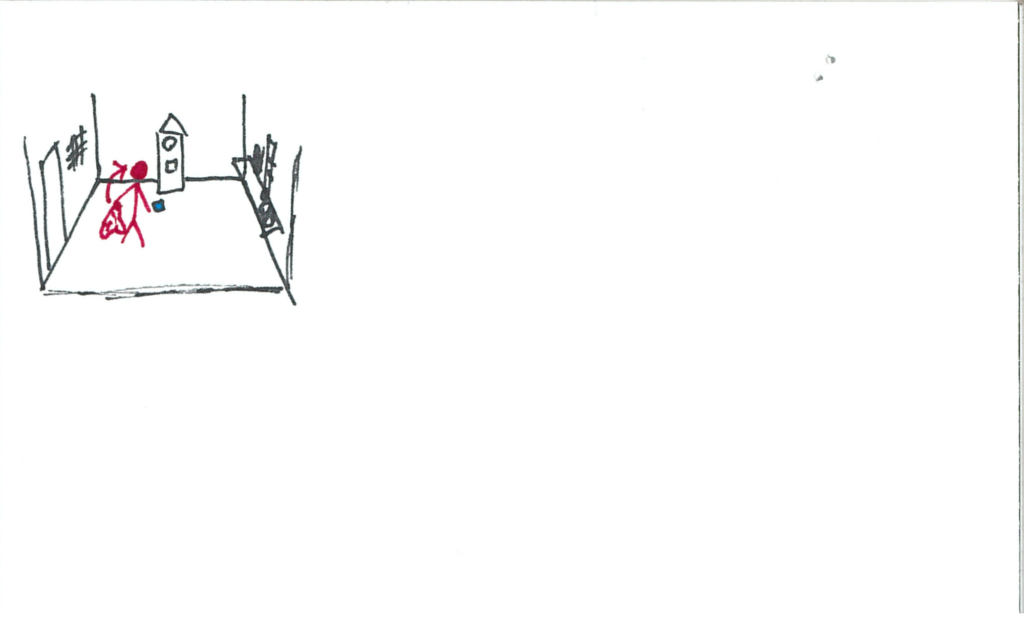

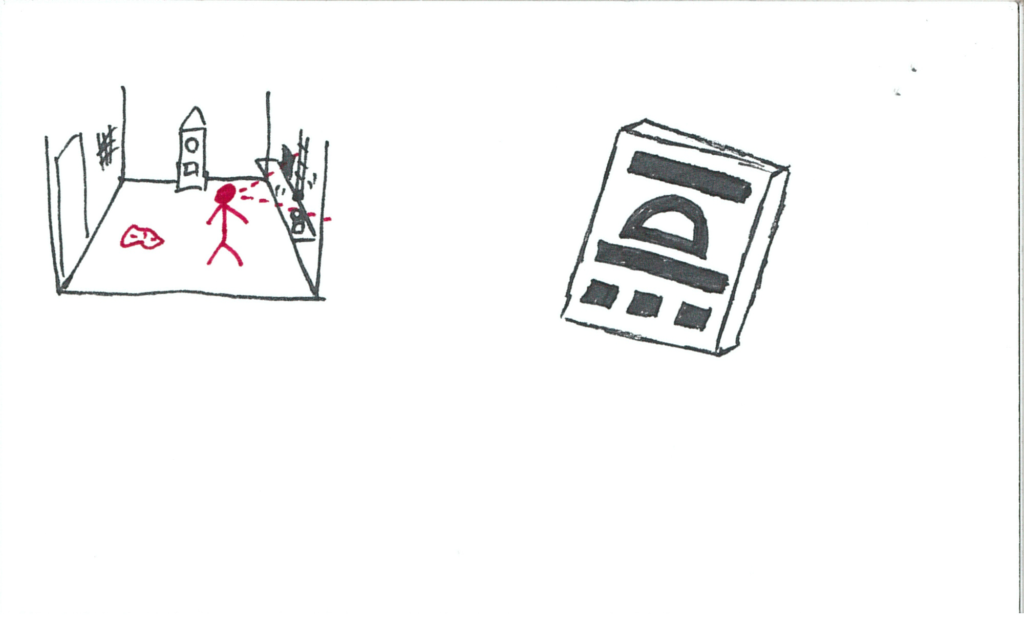

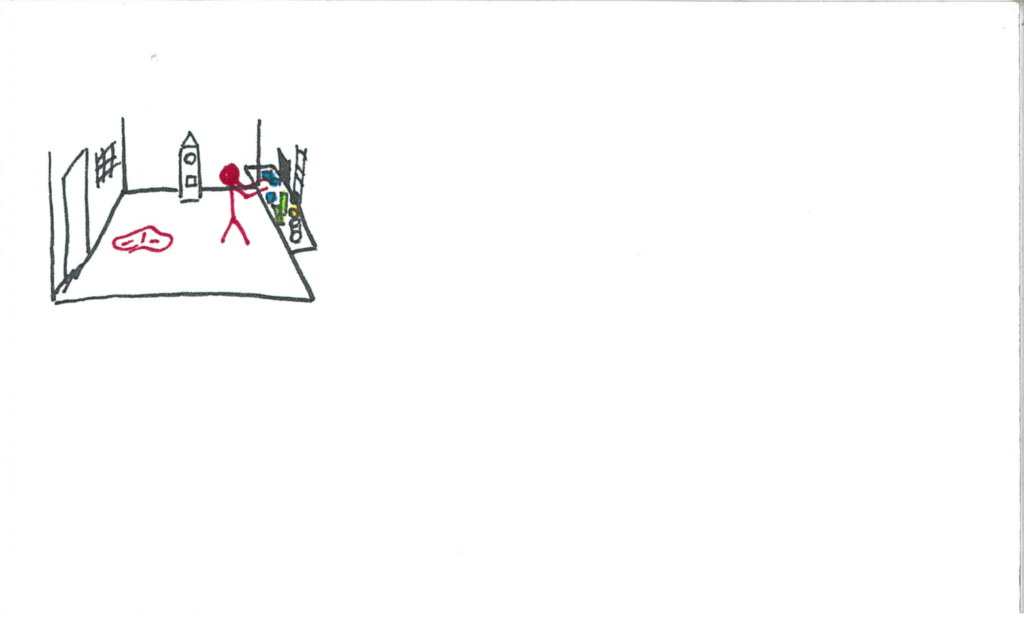

Taking inspiration from Vincent McCurley’s “Storyboarding in Virtual Reality“, I’ve created a format for storyboarding VR of my own. Below is an example:

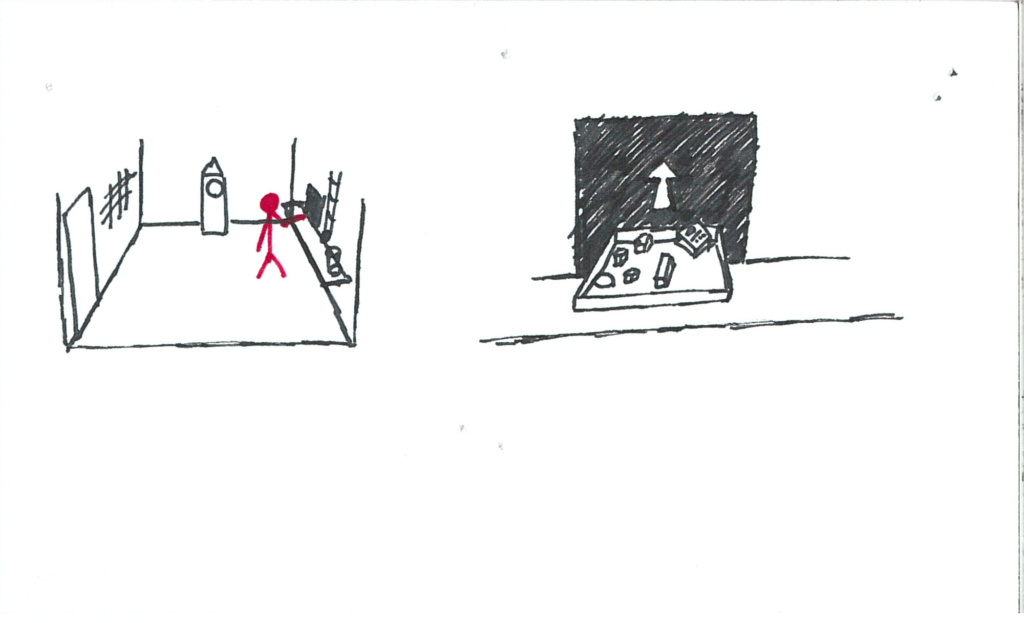

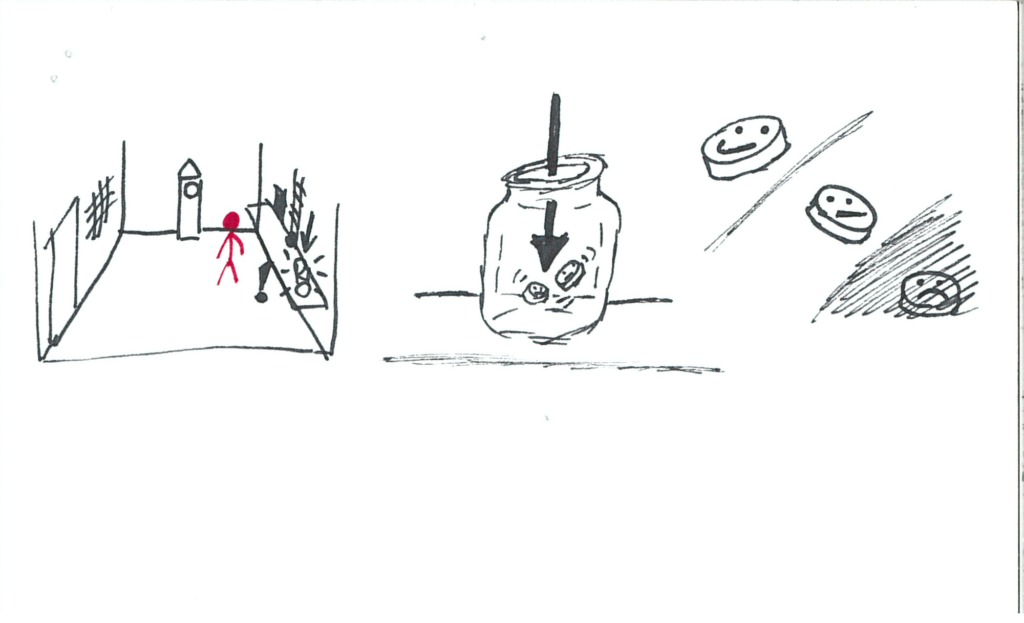

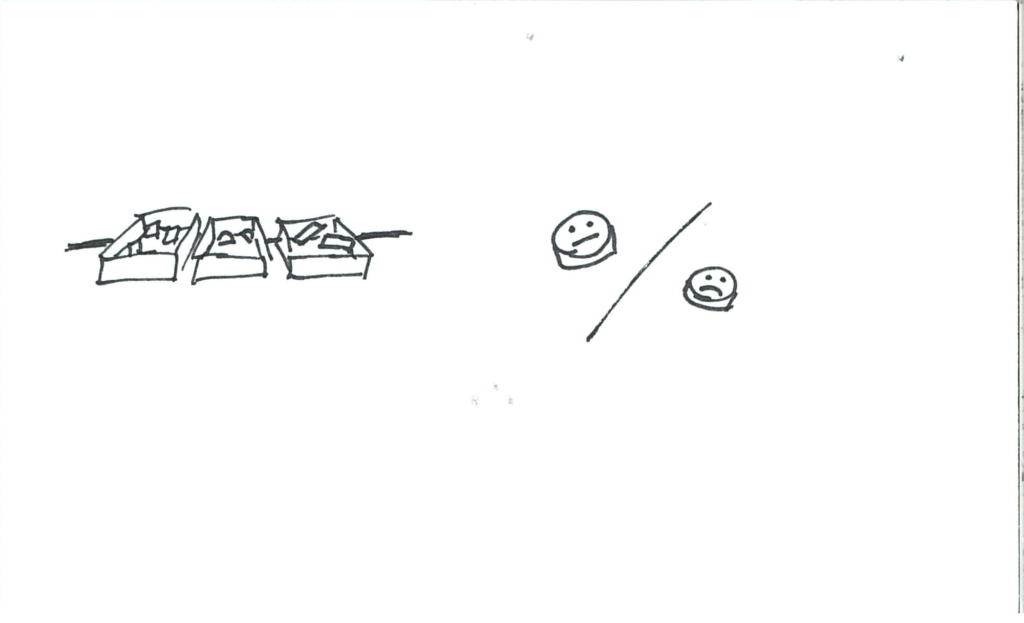

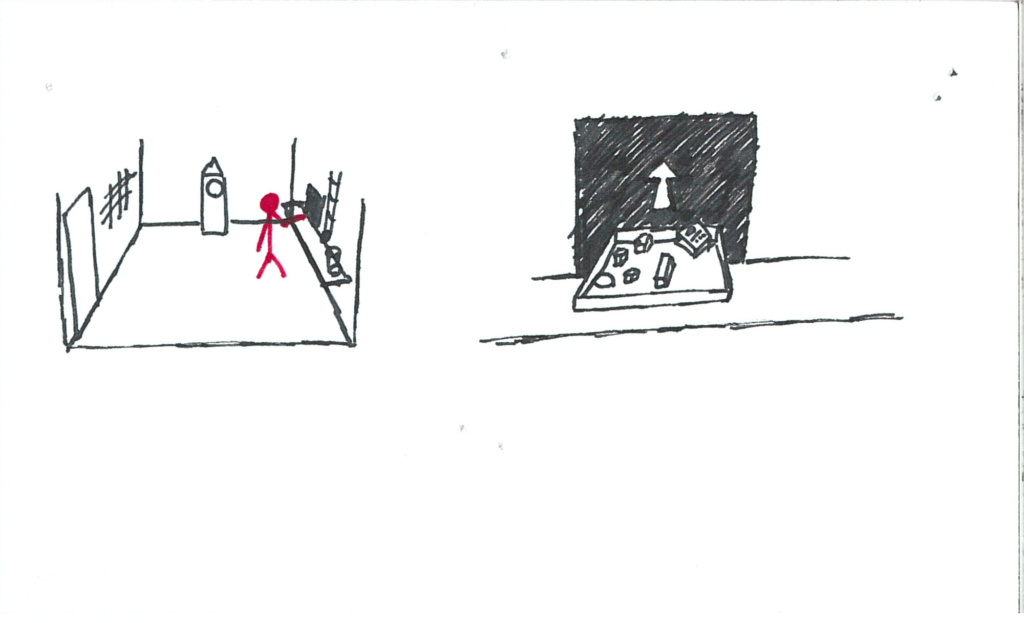

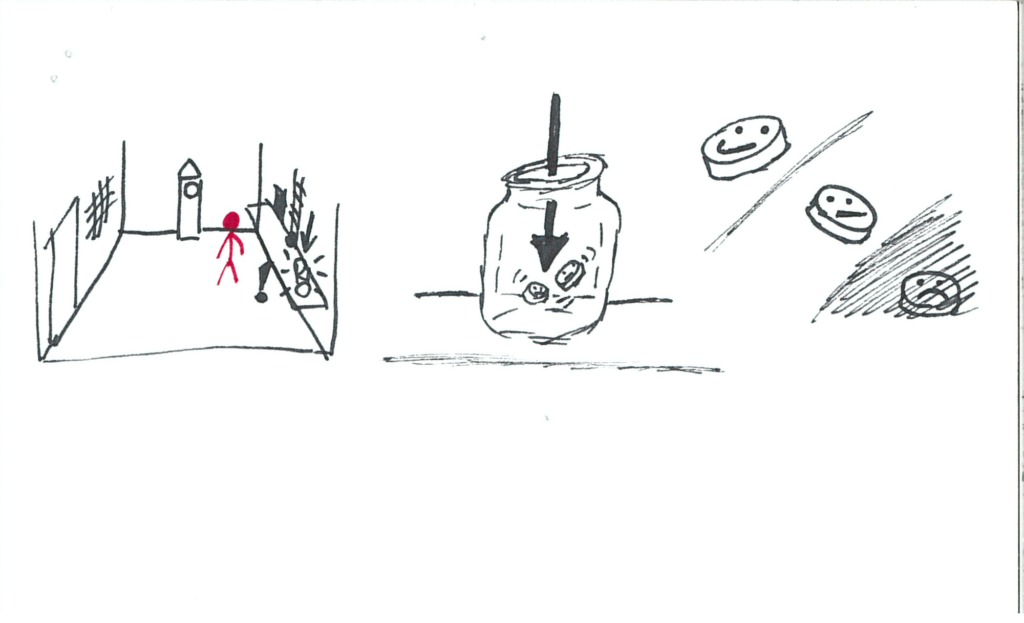

Left side of the card is usually going to be a stage-view of the user (red) in their environment. Right side will often be a frameless point-of-view perspective sketch in the event that there’s something the user needs to see/do to further the story. Other things worth noting are that interaction queues are drawn in red, often with dotted red lines denoting a field of view. This could resemble an expectation of where the user will look, give reference to a perspective sketch, or be a view-triggered event. There’s gonna be exceptions to these rules, but overall, understanding this format should allow the story to be communicable.

And so, Somniat begins:

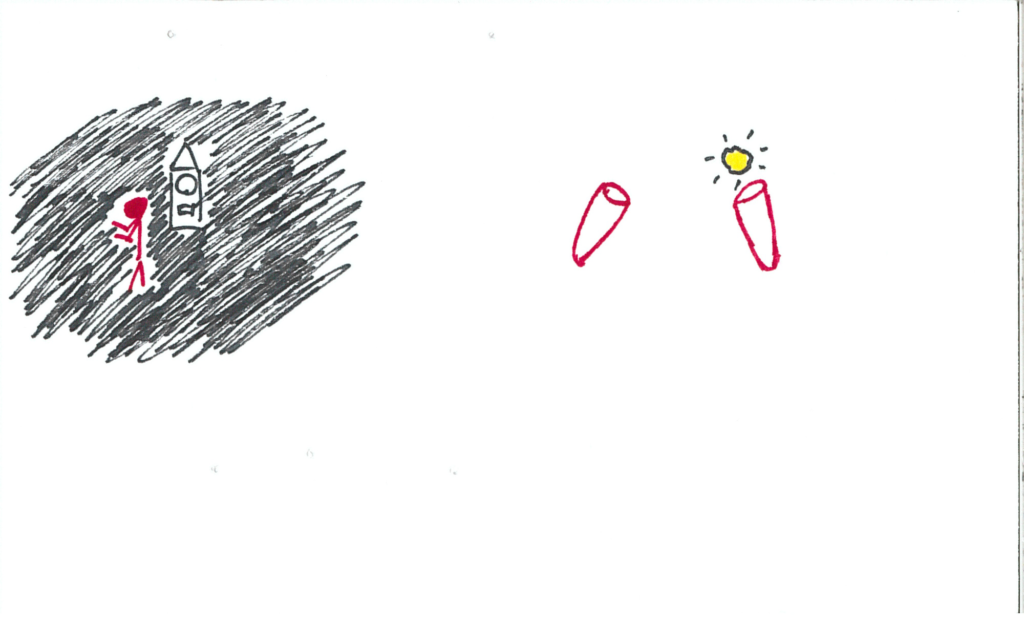

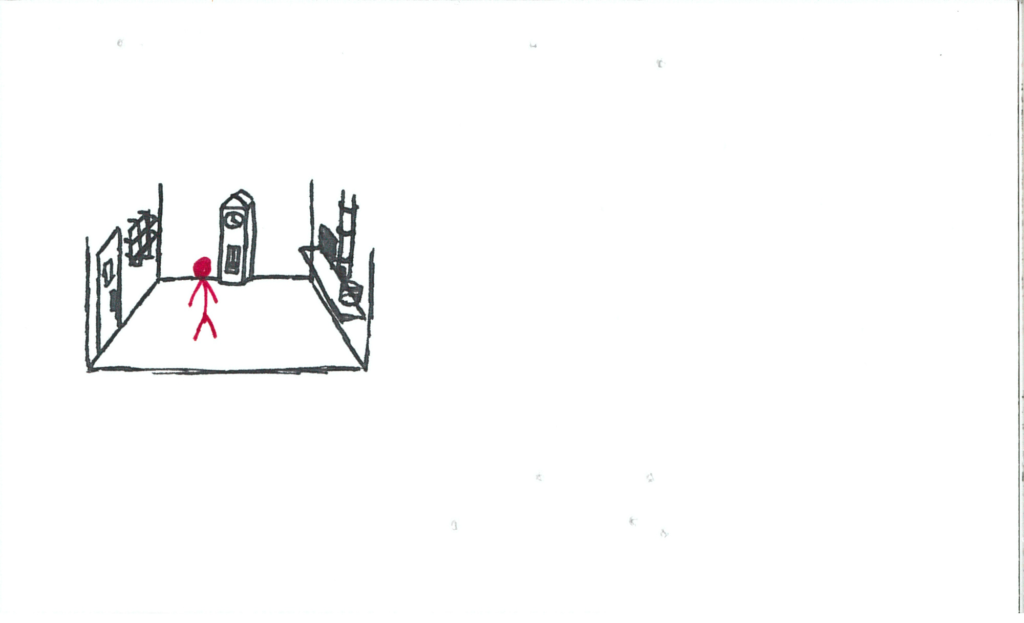

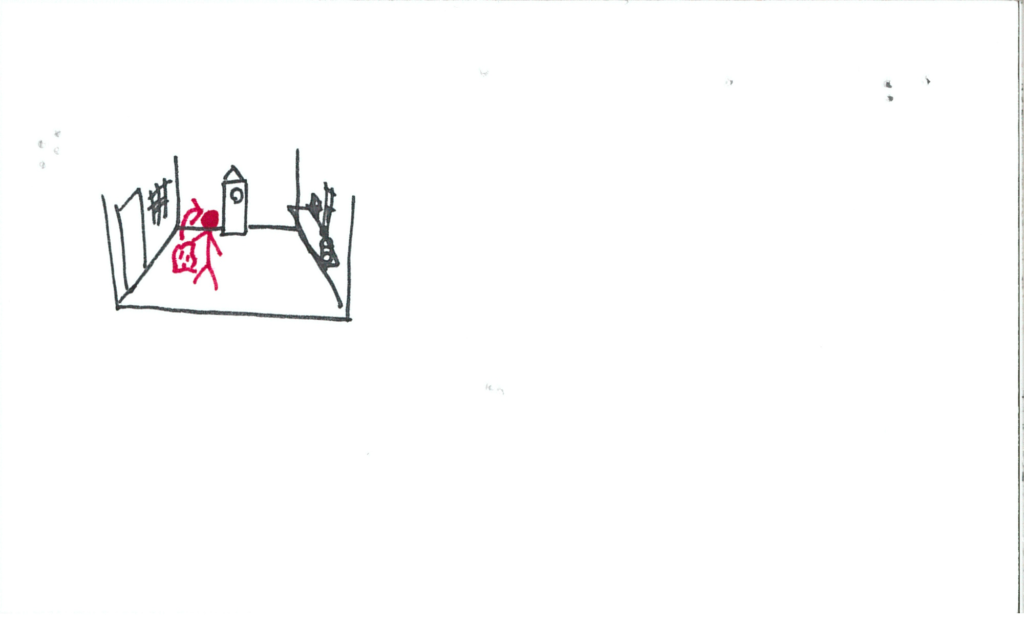

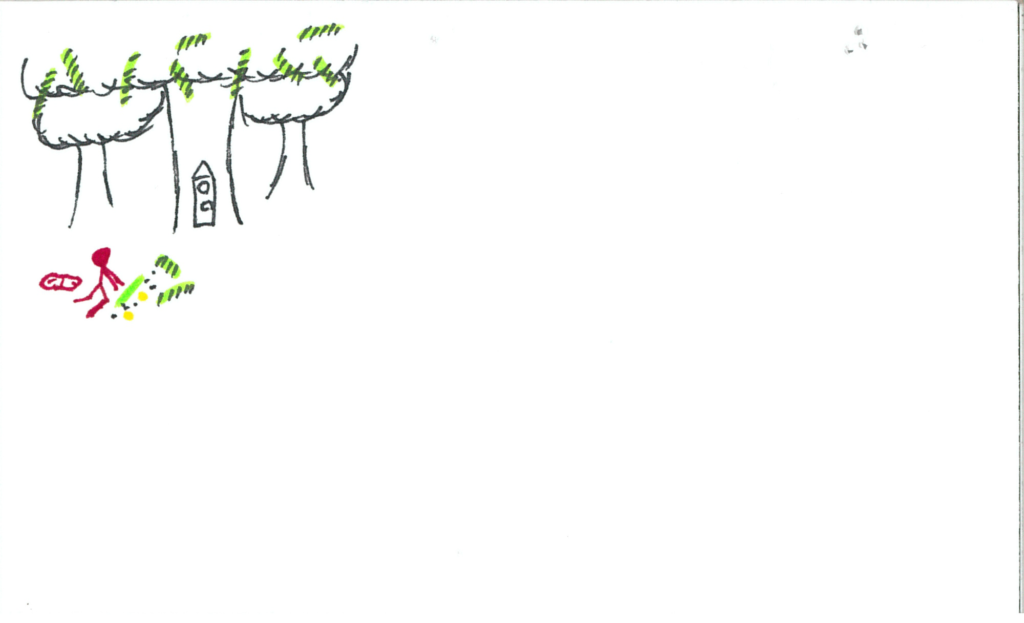

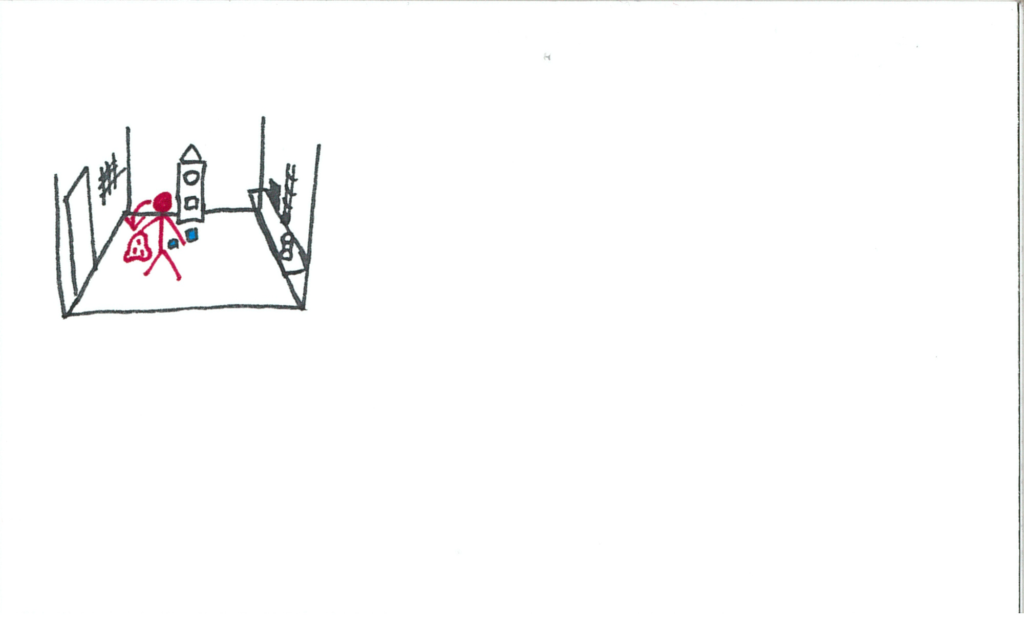

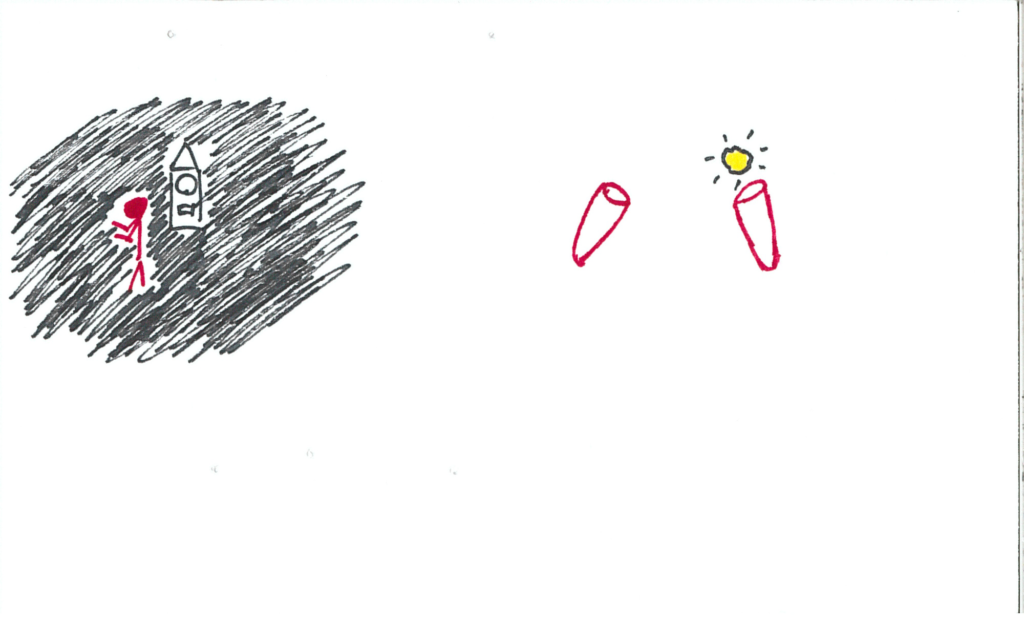

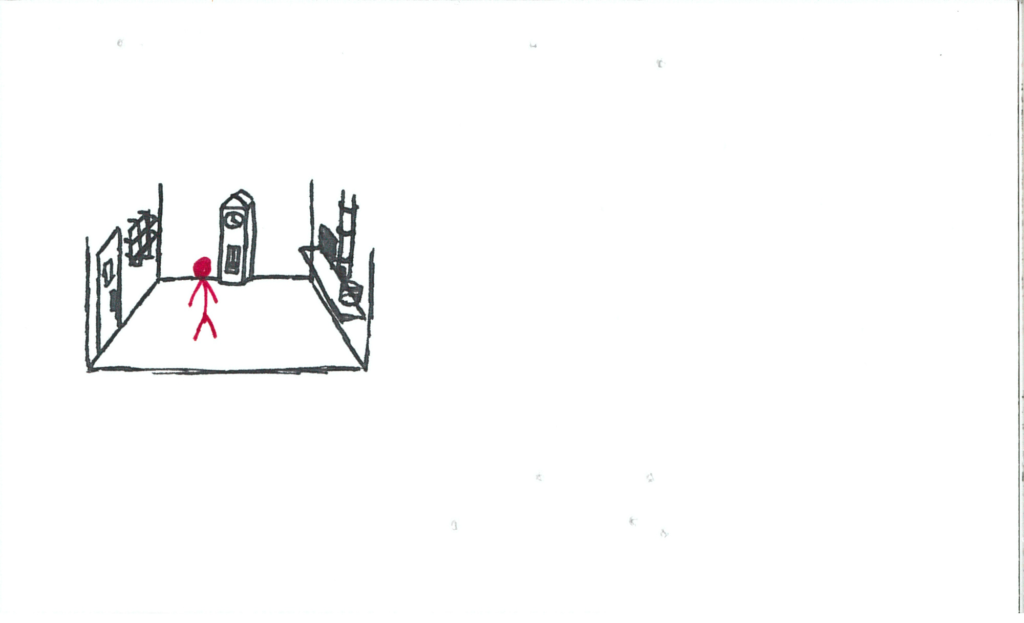

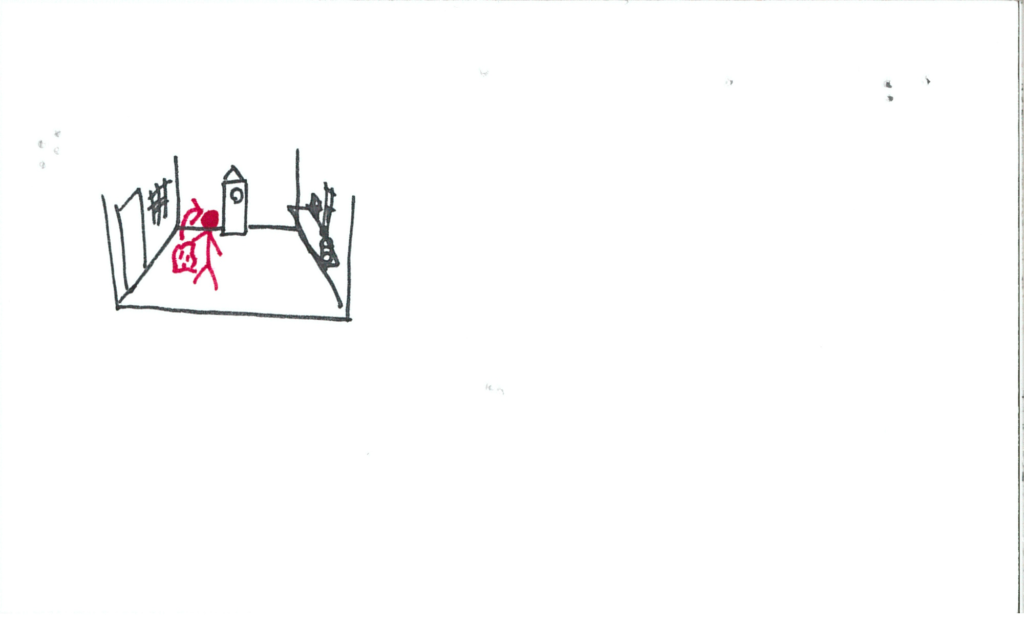

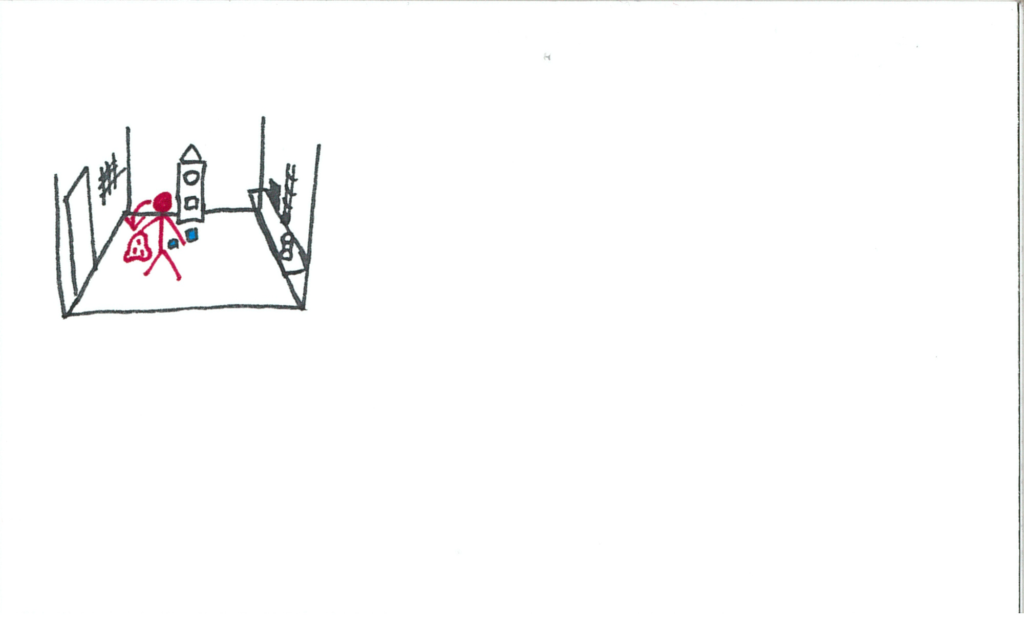

The user starts the experience in a dark void. This is the “menu screen,” if you will. Front and centre is a grandfather clock.

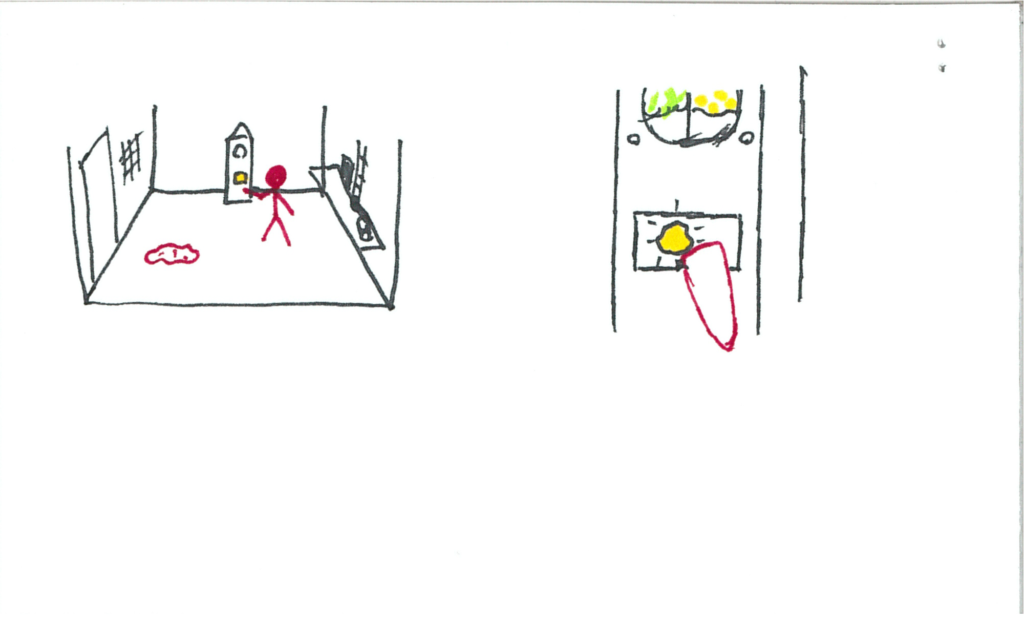

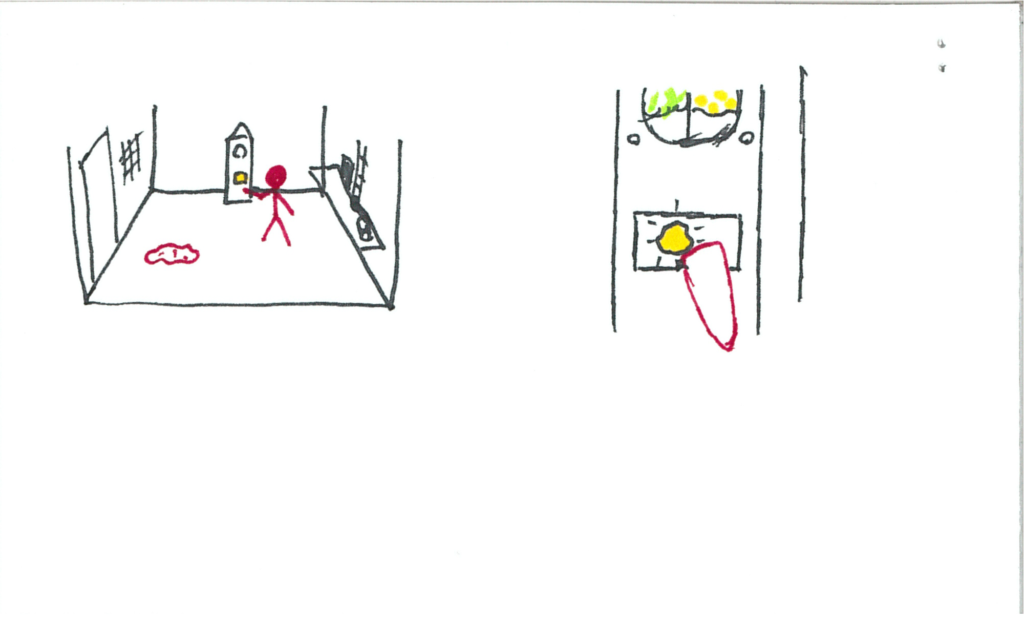

Upon looking at your hands/controllers, you have a glowing yellow orb in one of them. The clock has a slot with a dim yellow light in it.

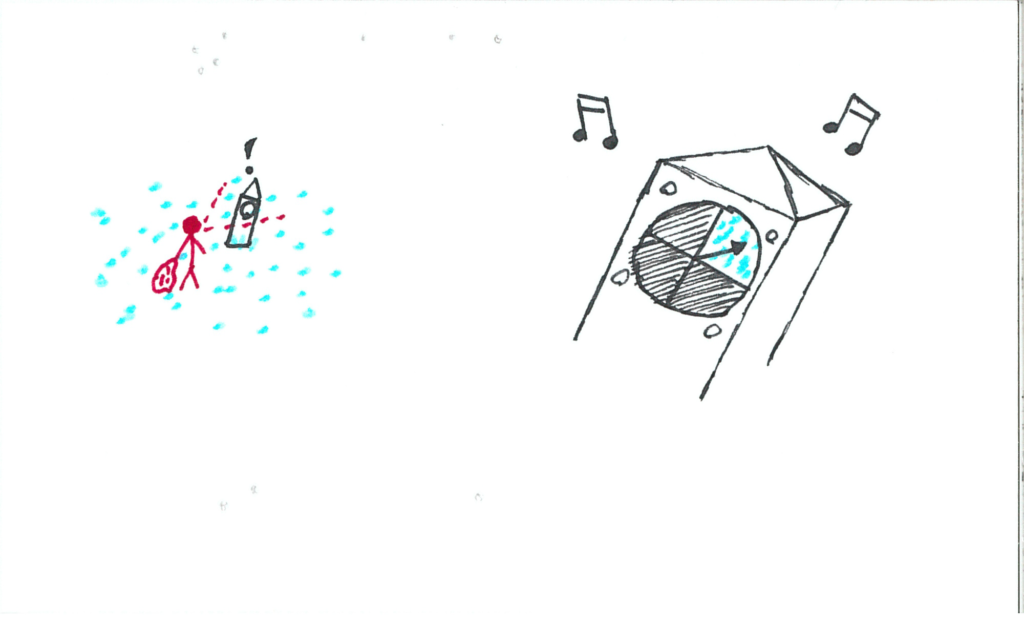

The user inserts the orb into the slot. Music begins to play and the clock slowly lights up.

Introductory credits appear around the user in the void as the clock lights up.

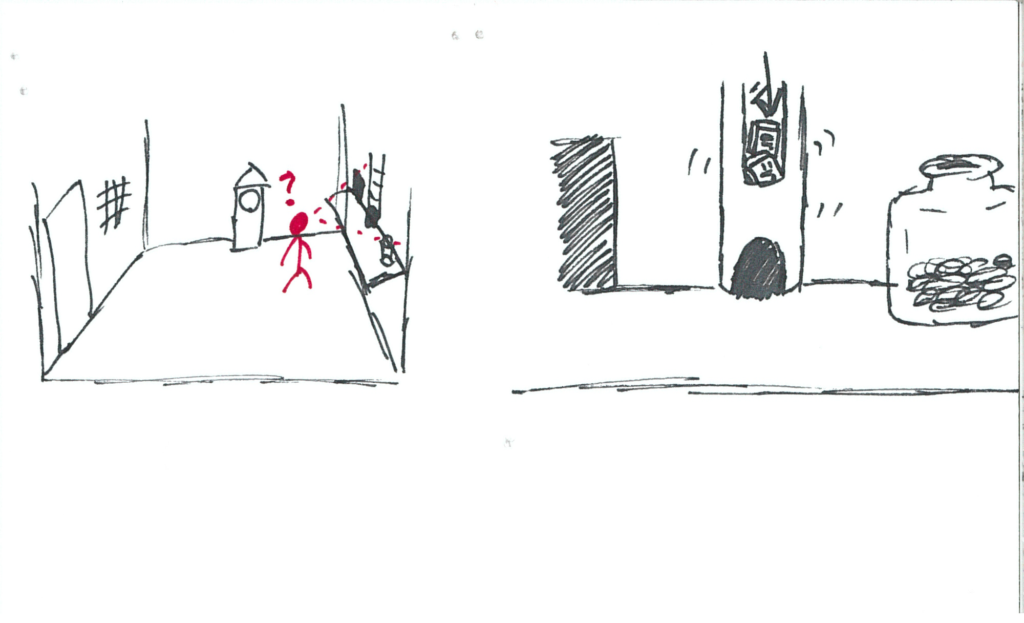

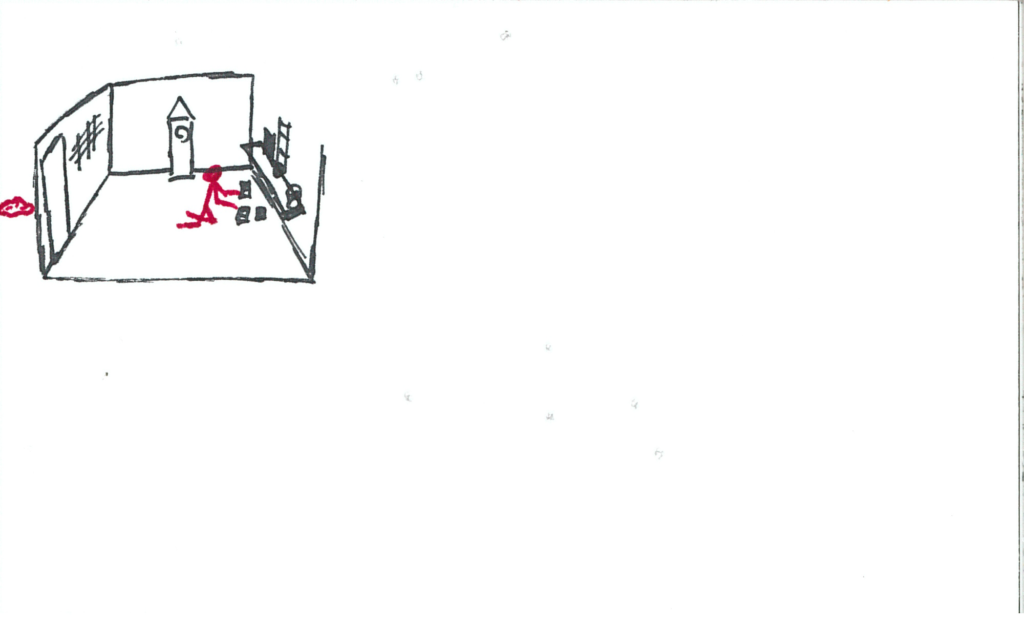

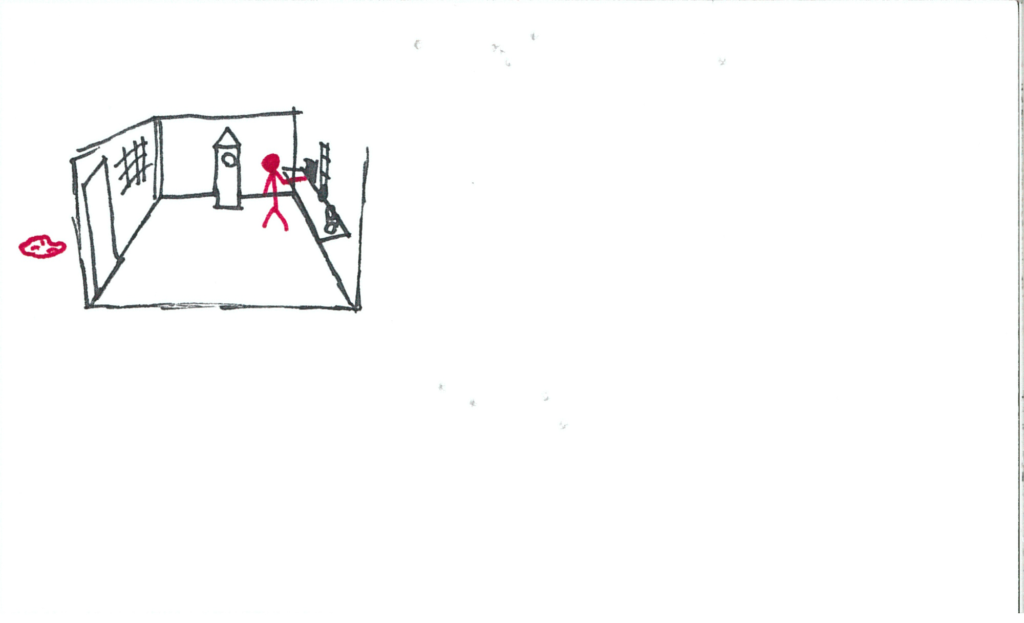

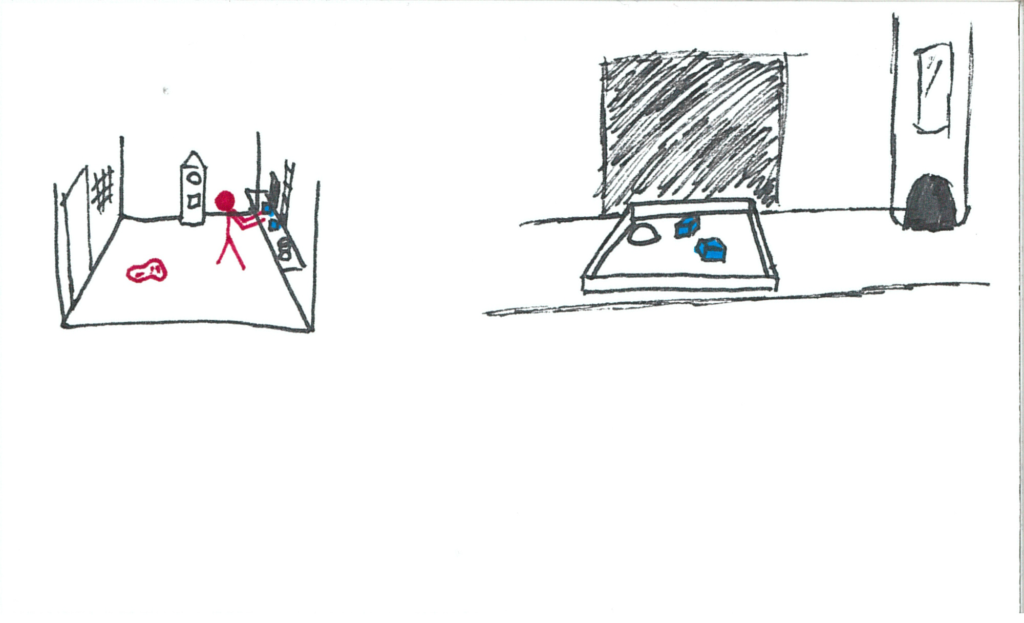

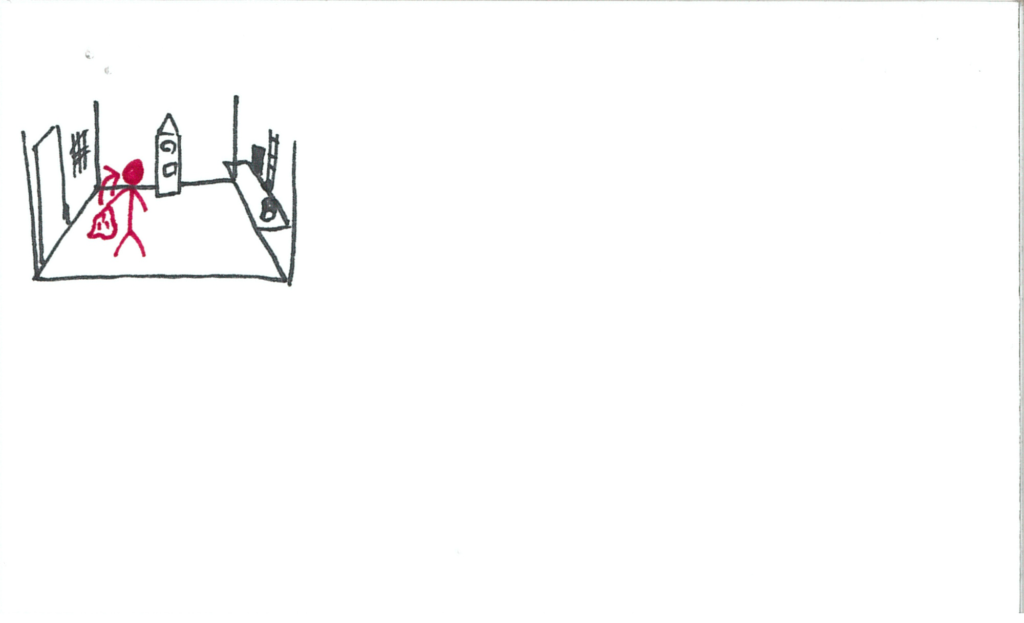

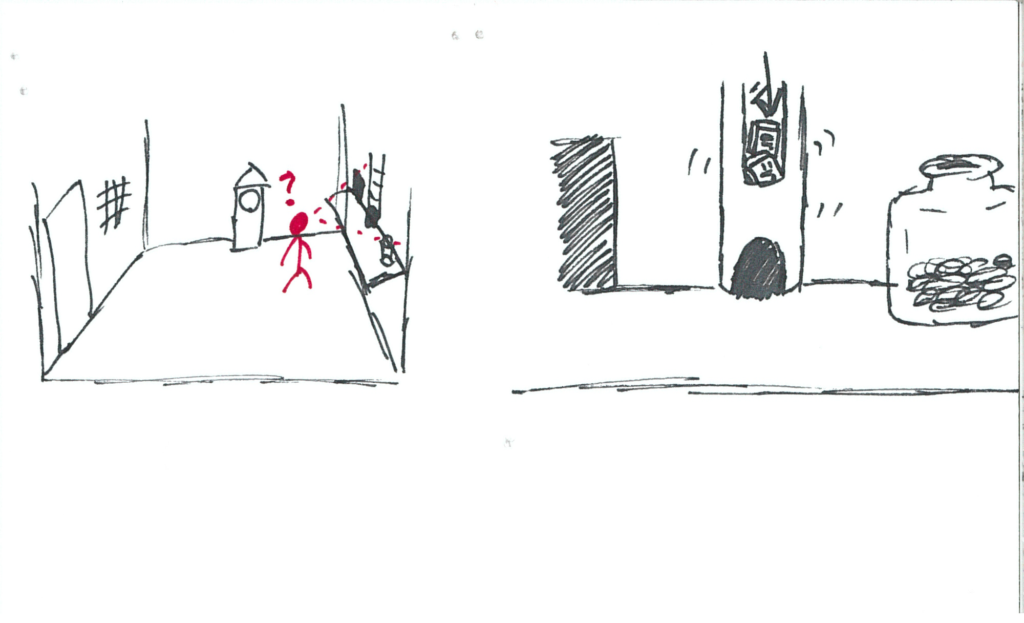

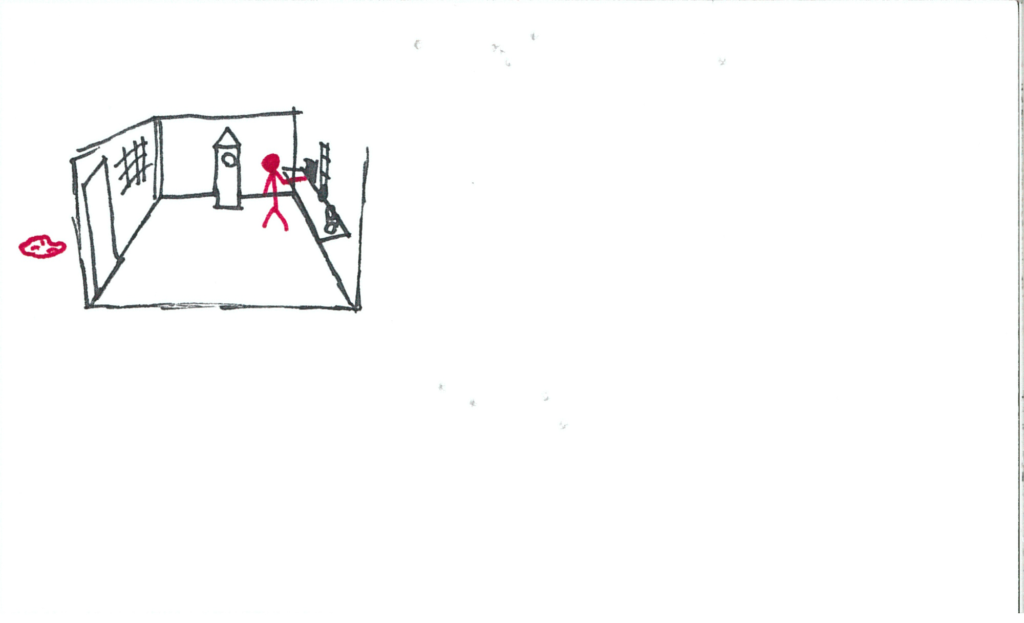

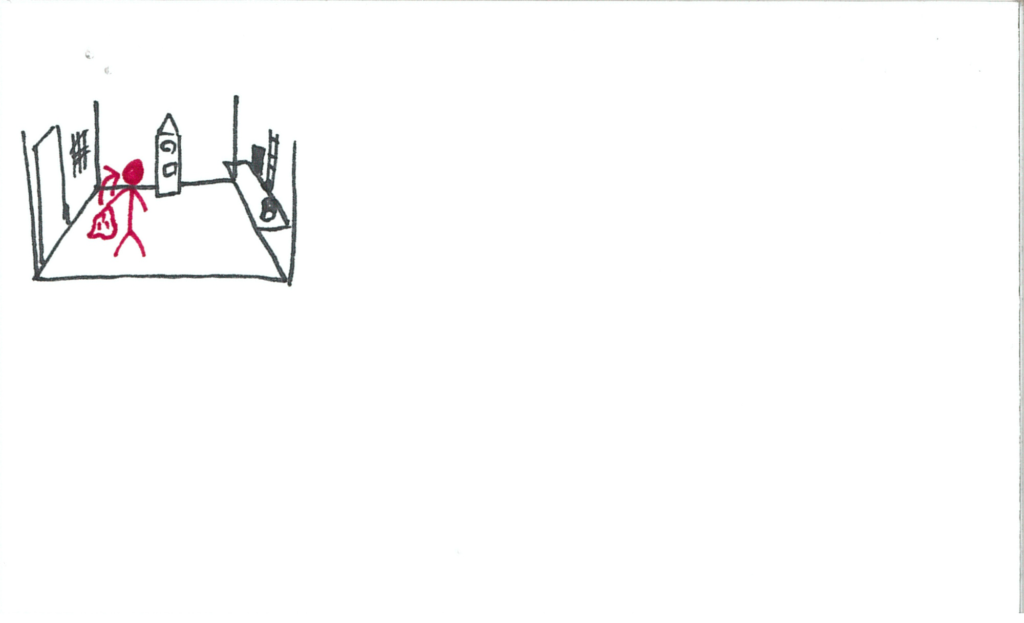

The music uncomfortably cuts and the user finds themselves in a small industrial room made of muted and cold colors. The grandfather clock is still to the front, workstation to the right, and a door and barred-window to the left.

The clock chimes. Its hand ticks from “nighttime” to “work”.

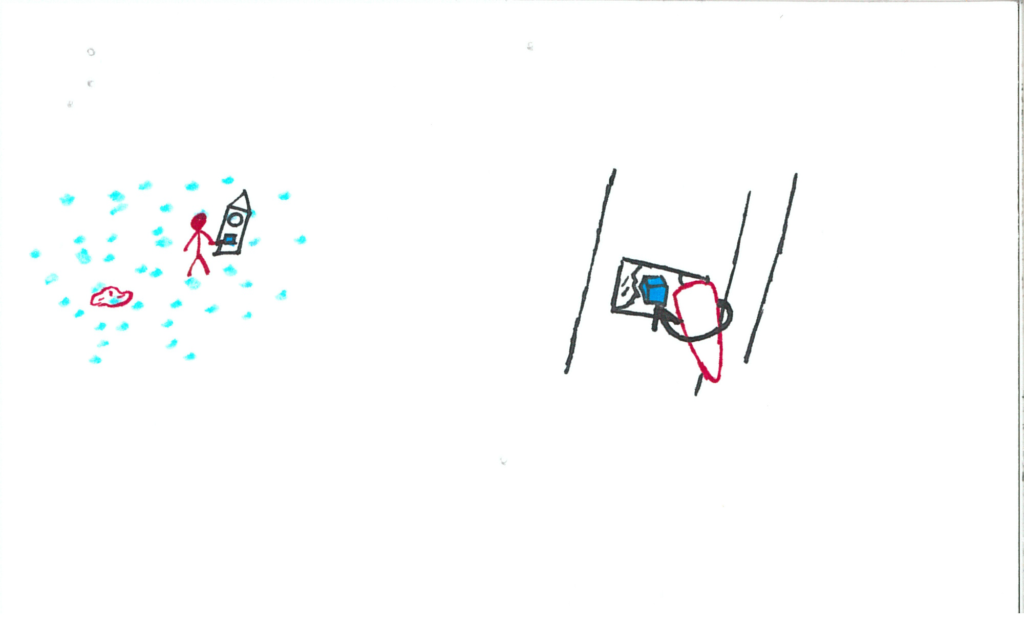

A whistle above the workstation sounds. A wooden block/tag falls down the pipe.

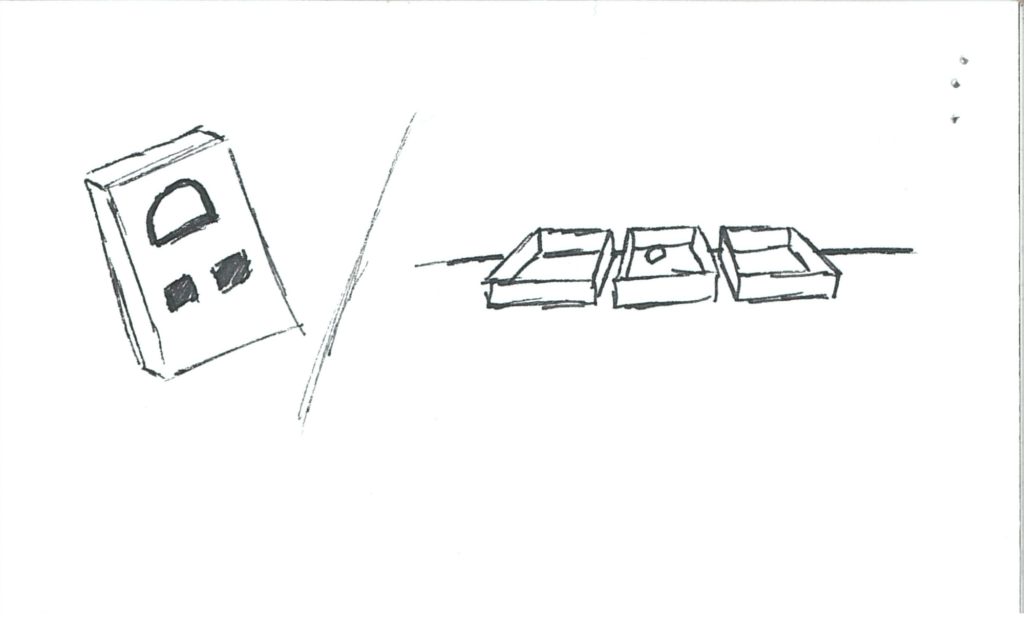

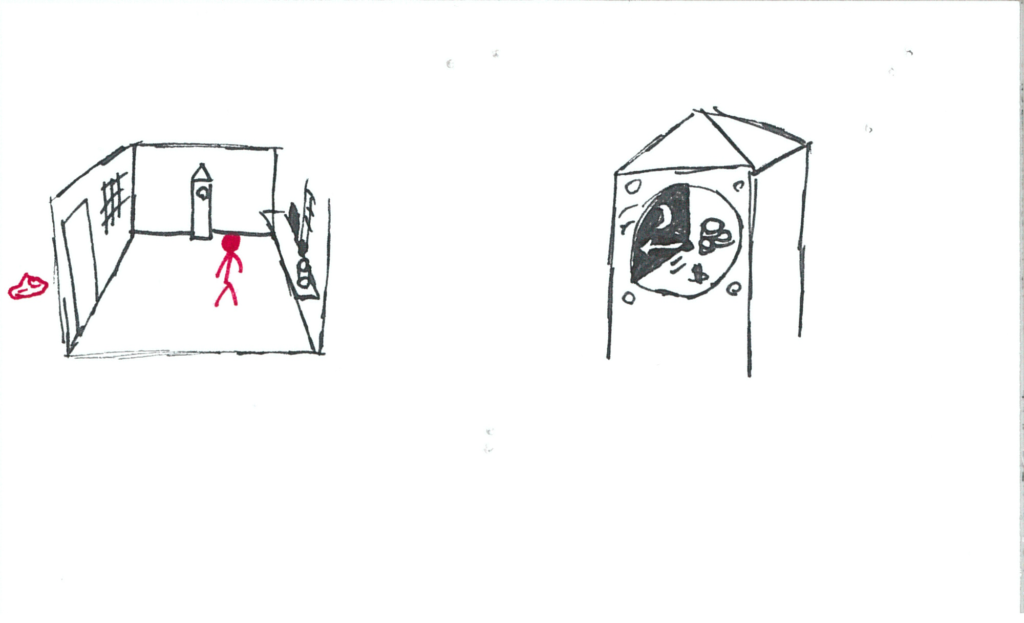

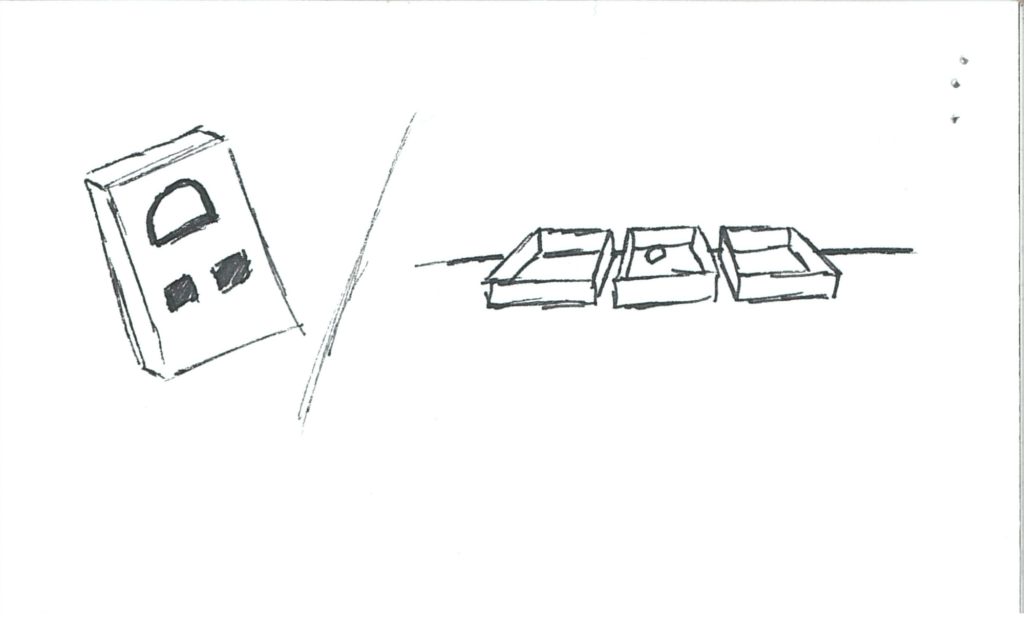

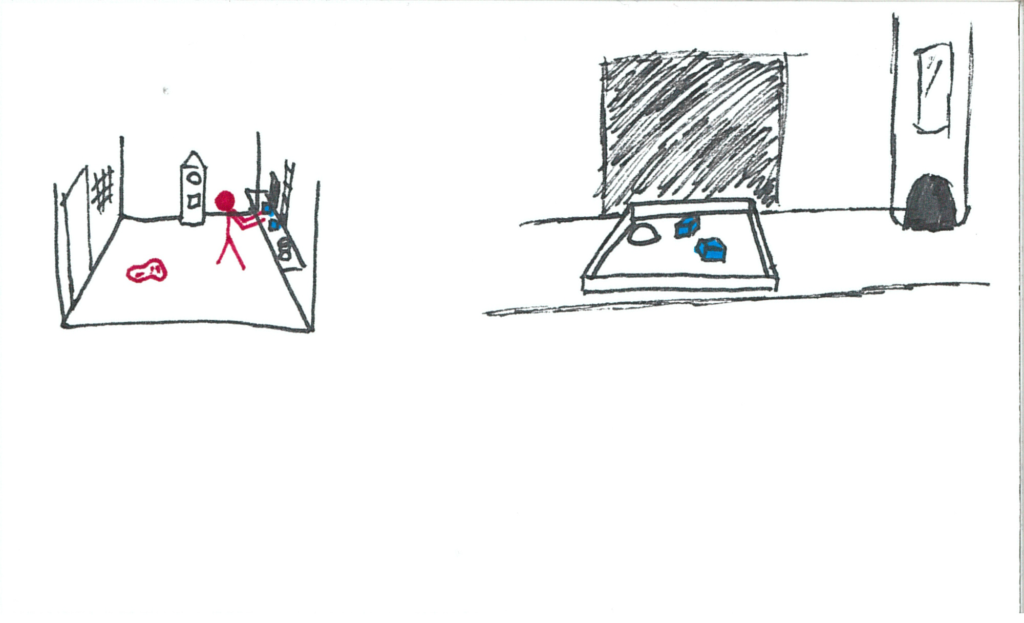

The tag has basic geometric shapes on it (square, semicircle, or line). Below the main workstation shelf is a second shelf with 3 bins on it. Inside these bins are basic geometric solids (cubes, hemispheres, rods).

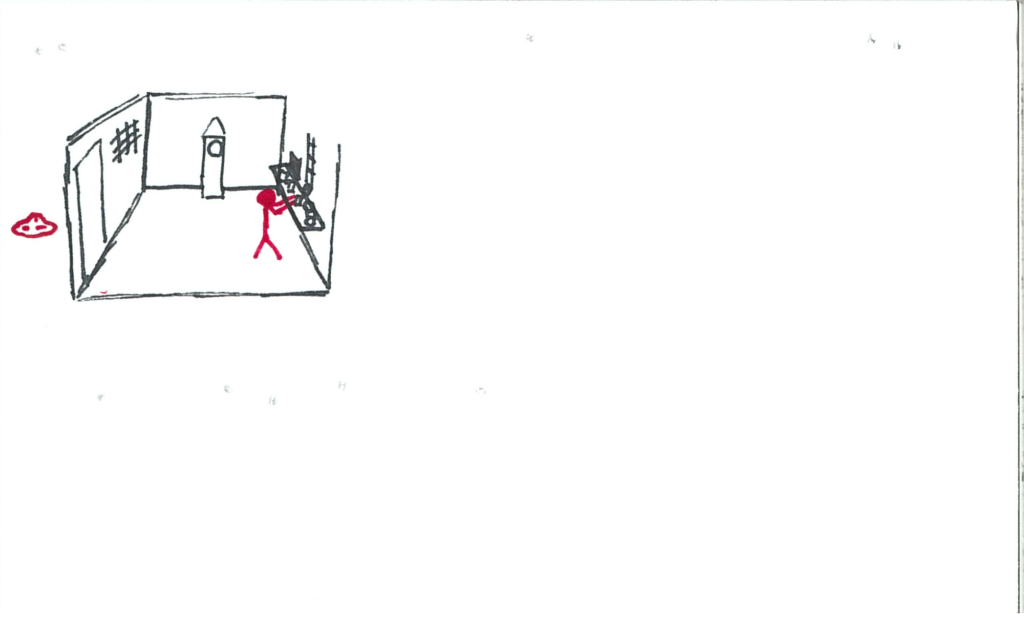

The user places the geometric objects requested by the tag onto a tray that’s travelling on a conveyor. It leaves the room.

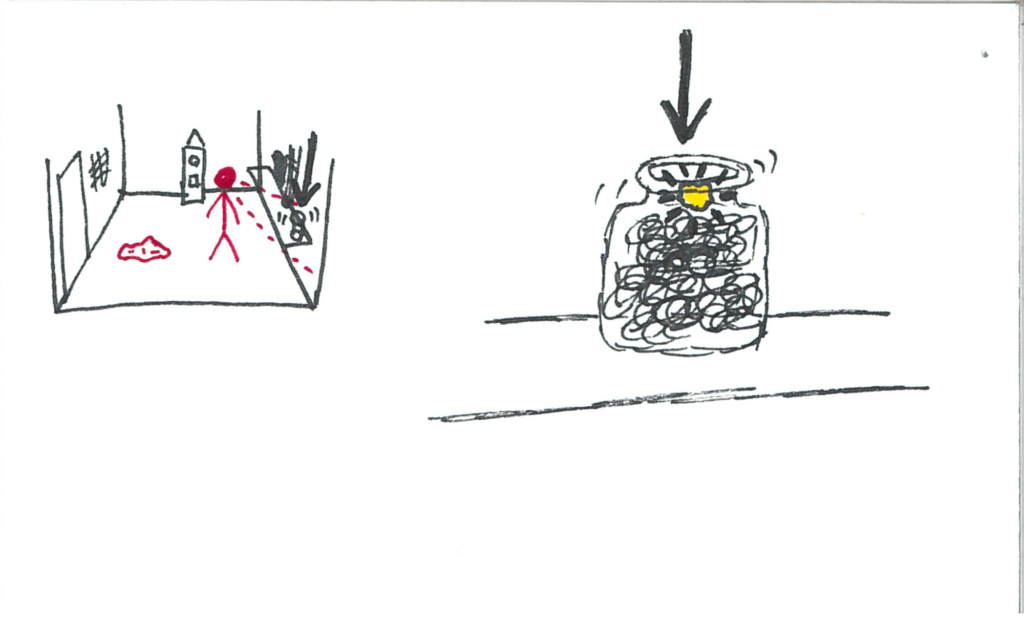

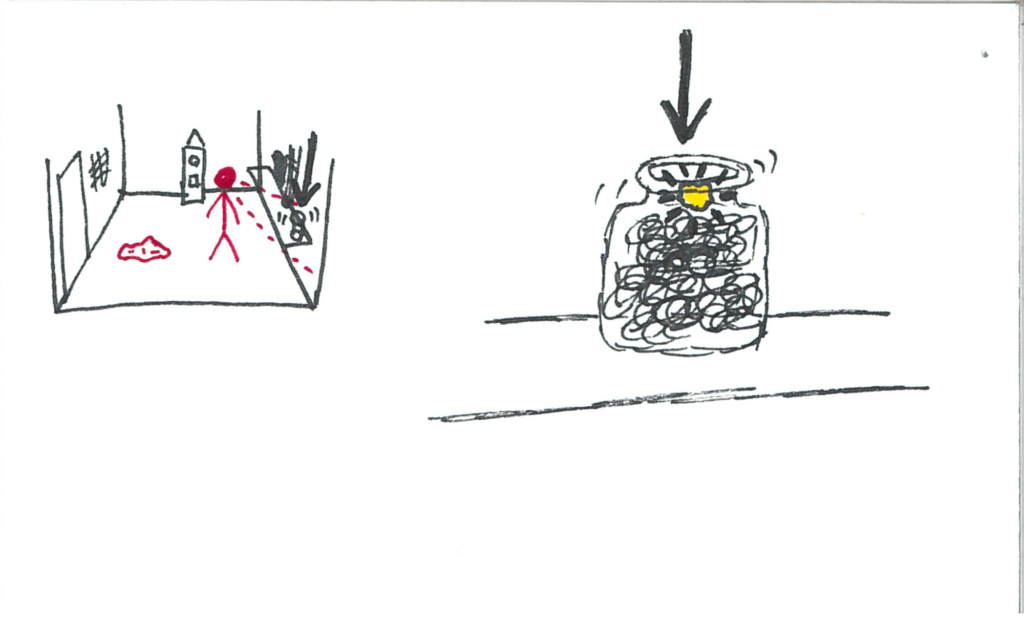

Money falls down into a jar on the edge of the workstation shelf. The coins have emotions drawn on them.

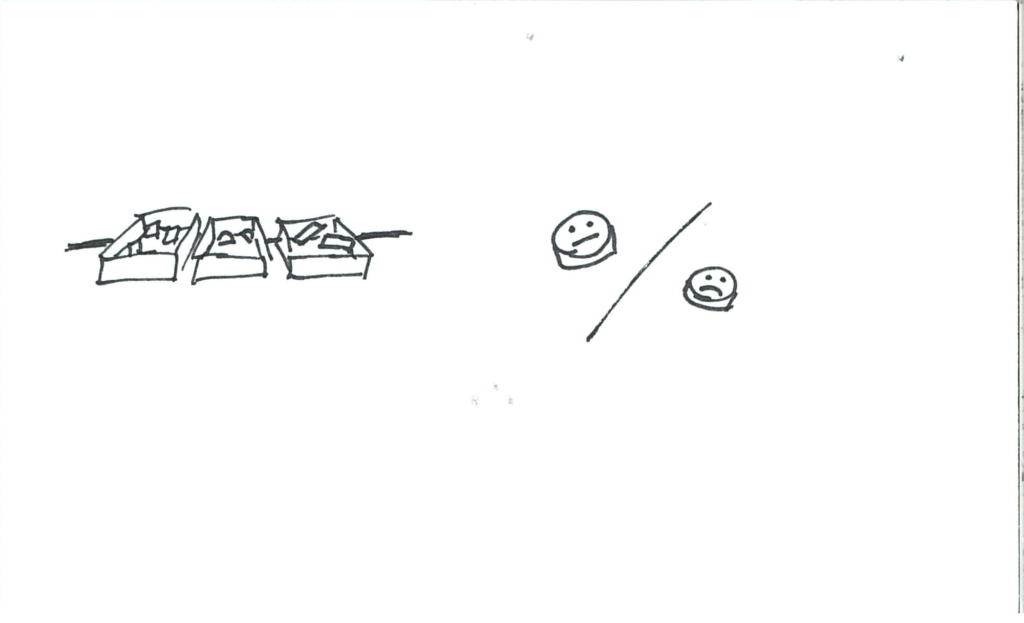

The user continues fulfilling orders, but supplies start running low. Customers are giving less satisfactory emotions.

There’s nearly no resources left and the user is forced to send out incomplete orders. Customers are very dissatisfied.

The whistle blows as usual, however no tag arrives.

More tags fall down the pipe but do not come out.

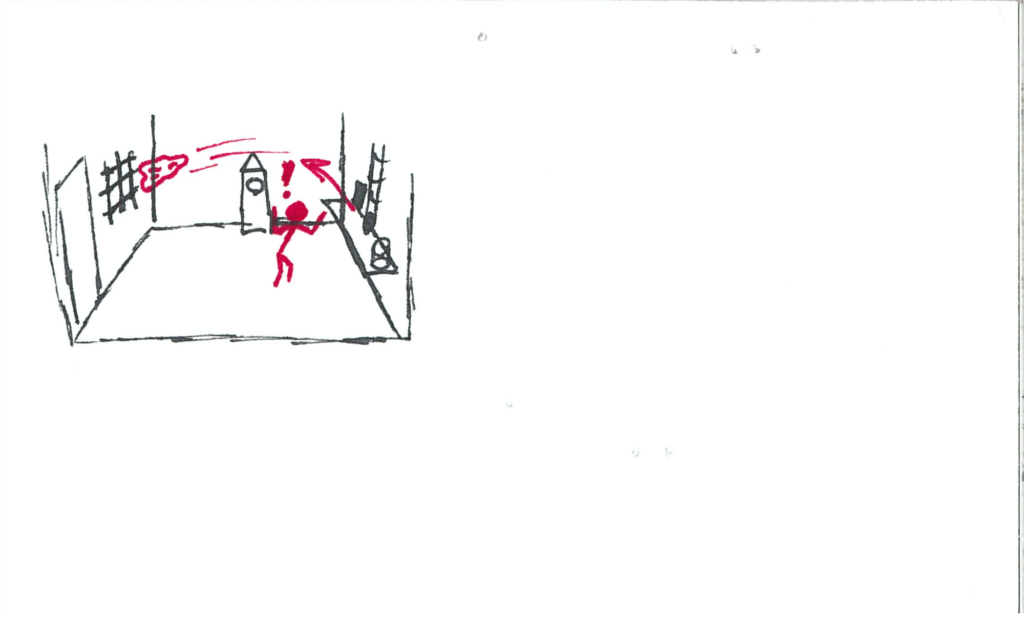

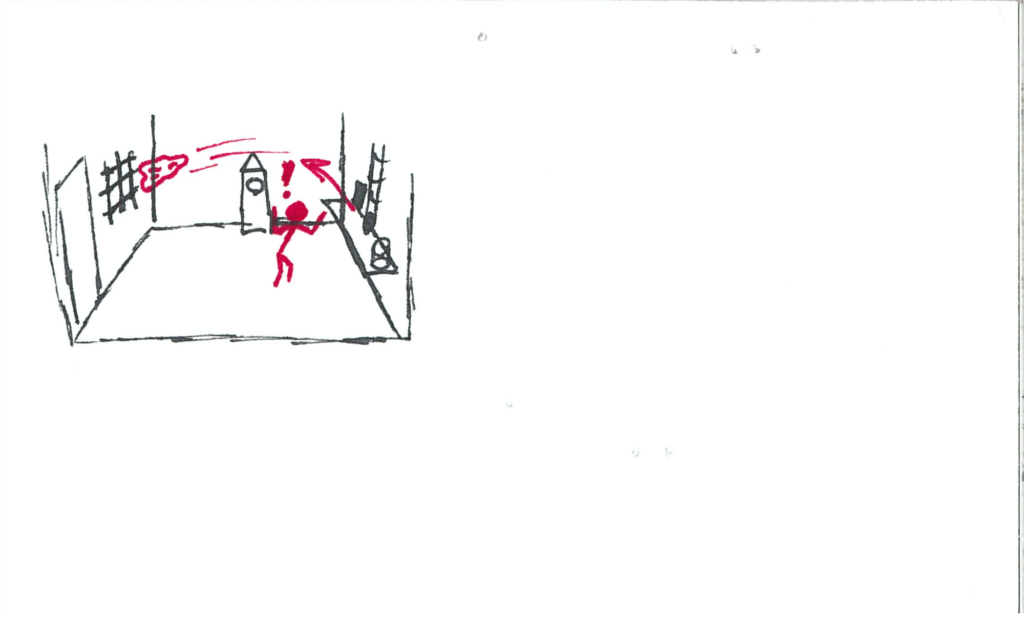

A vibrant red blanket shoots out of the pipe and is launched across the room!

It gets propelled through the grated window, out of the user’s reach.

All the work orders that were backed up come spilling out, and many of their trays are almost through the conveyor belt.

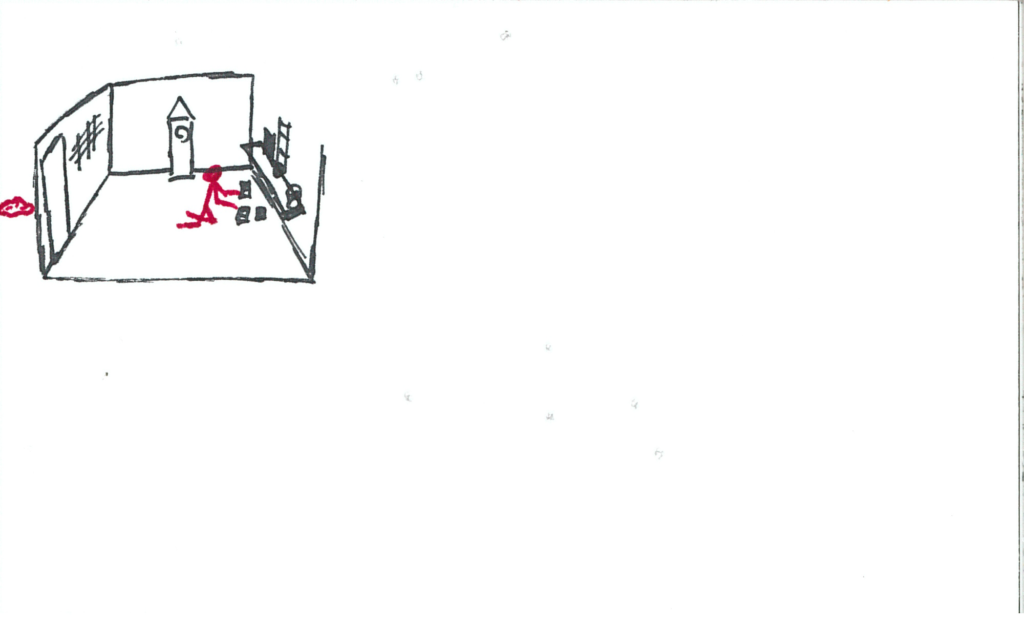

The user scrambles to fulfill all the orders, but they have virtually no resources.

Everyone’s really unhappy with the user’s work.

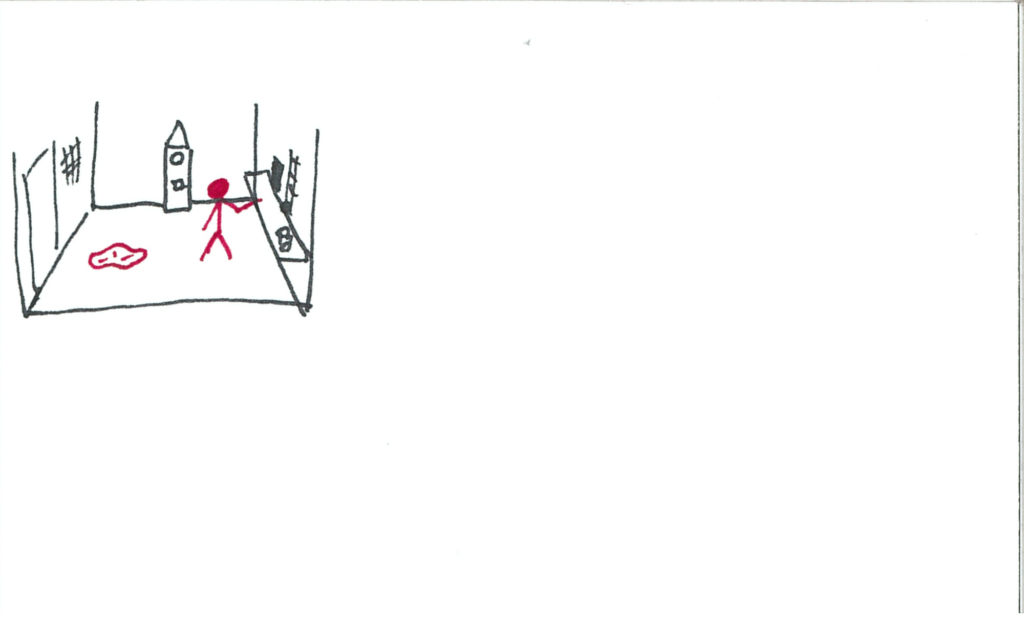

No further work orders come after that onslaught. The clock has ticked over back to “nighttime”.

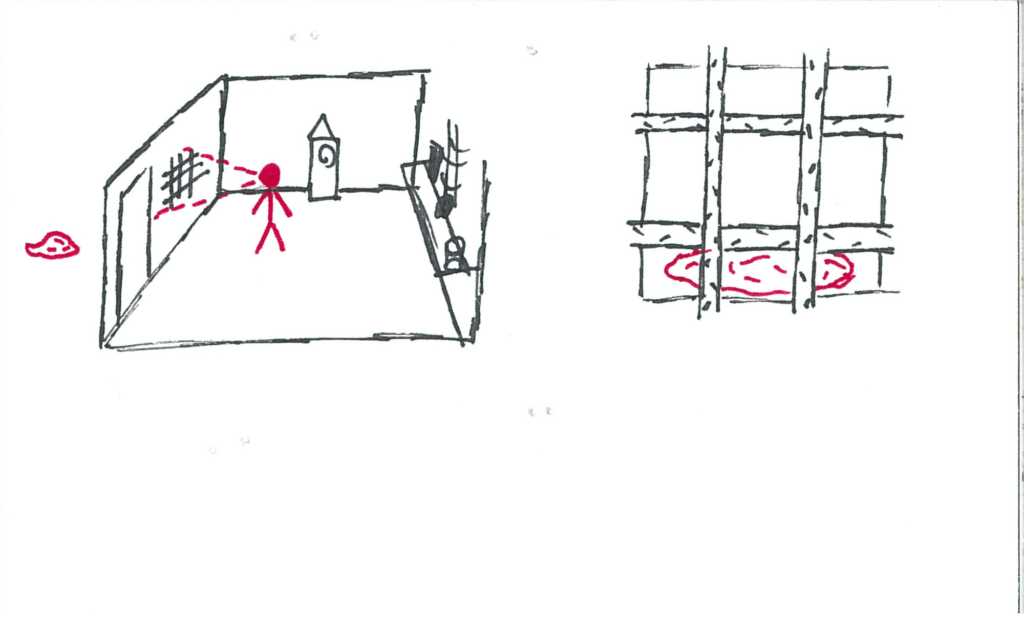

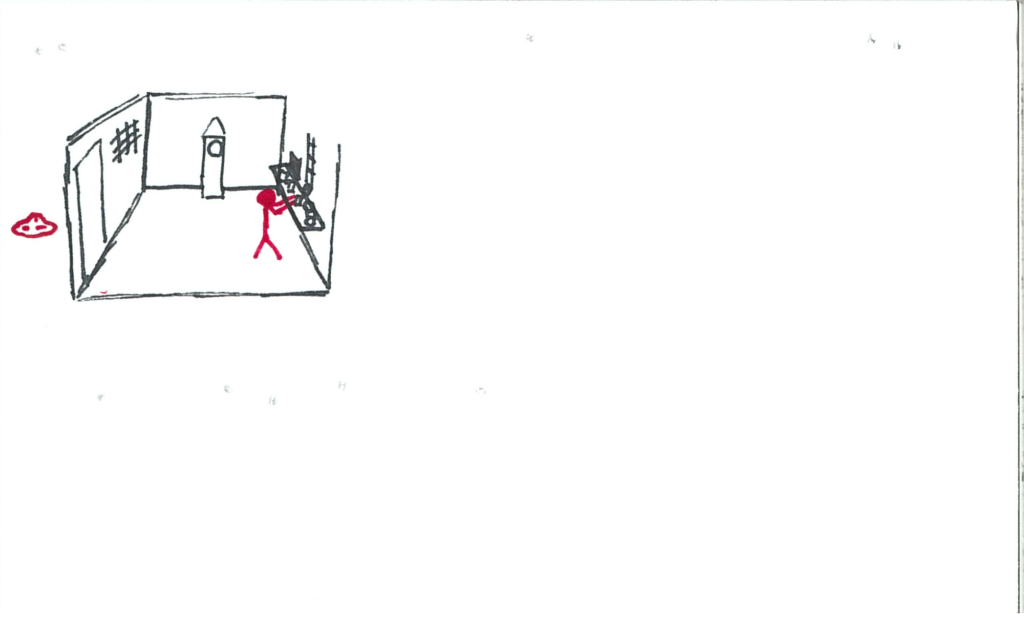

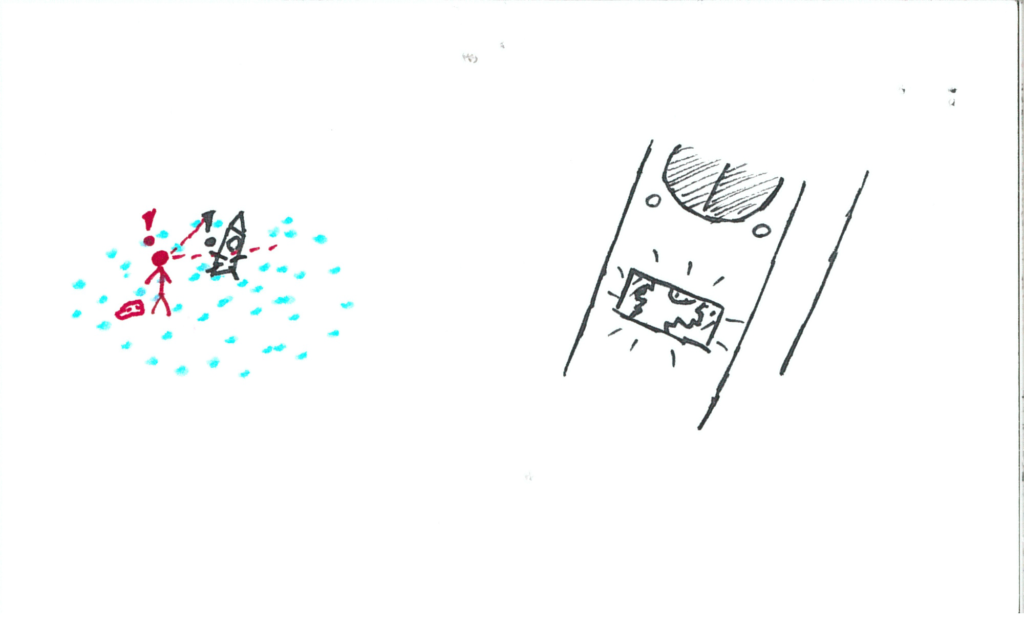

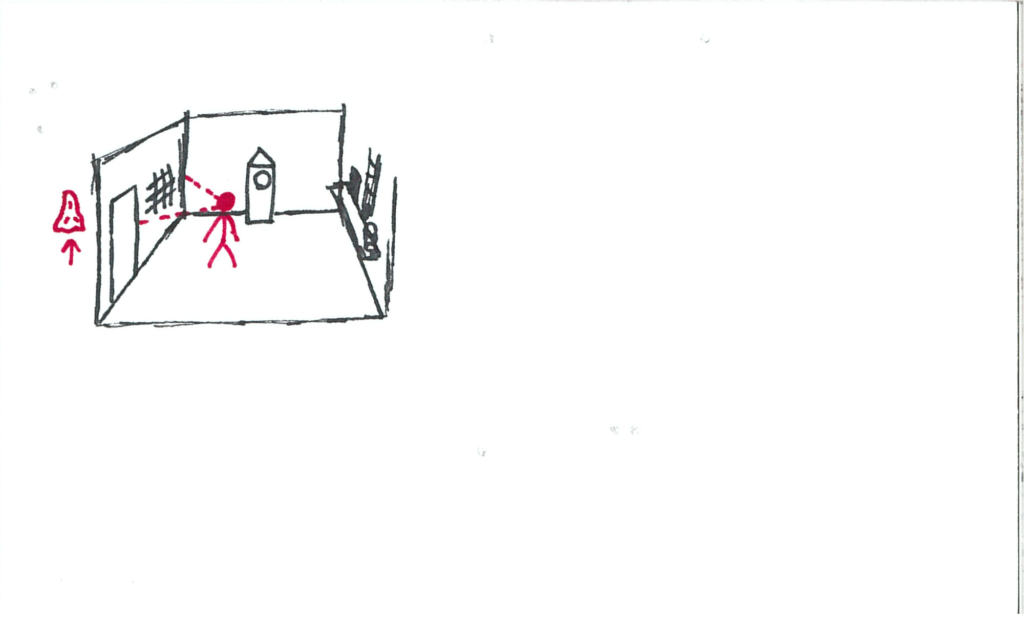

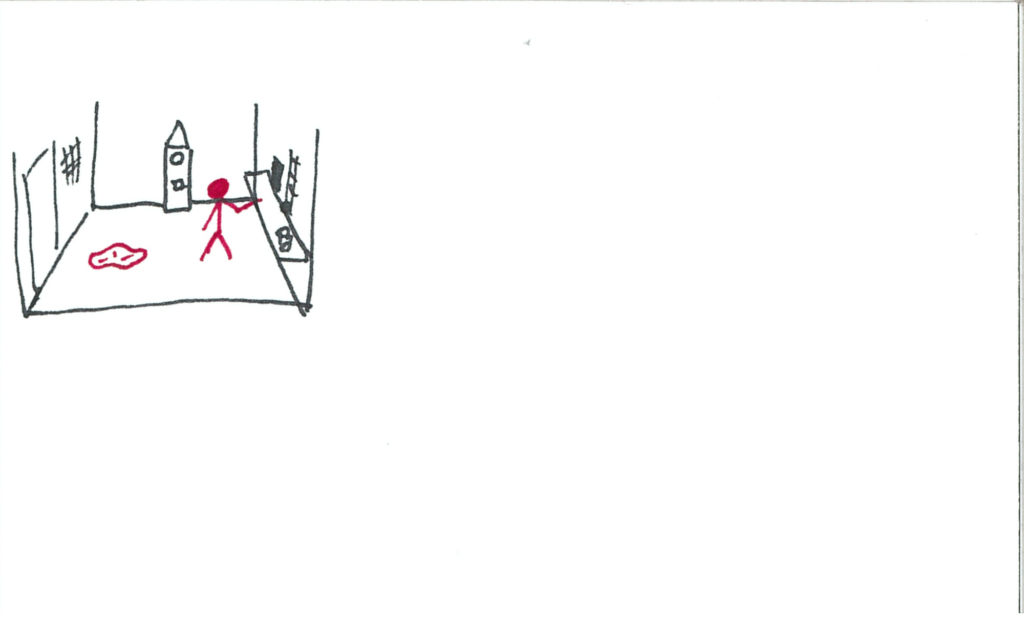

Upon looking at the blanket, it is picked up by an invisible force!

Once lowered, a person is revealed to now be holding it! This person makes eye contact with the user and walks the blanket over to the window, offering it to the user.

Upon taking the blanket, it falls limp in the user’s hands and the unknown person is nowhere to be seen!

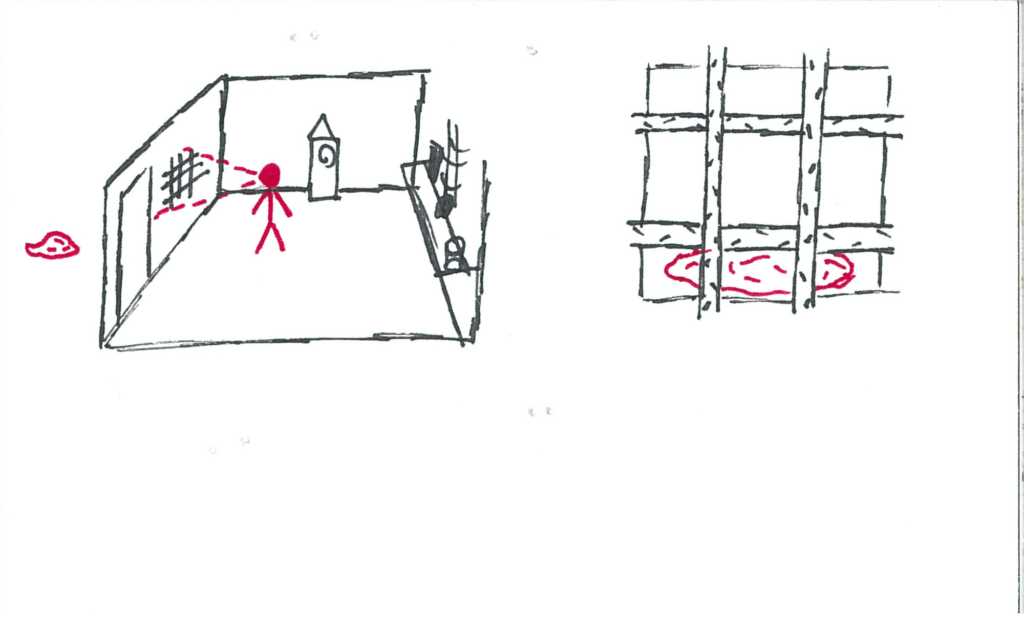

The blanket has some curious visual behaviours to it, encouraging the user to look at it up close.

Eventually the user will fill their entire field of view with the blanket upon investigating (this is drawn as pulling the blanket over the user’s head).

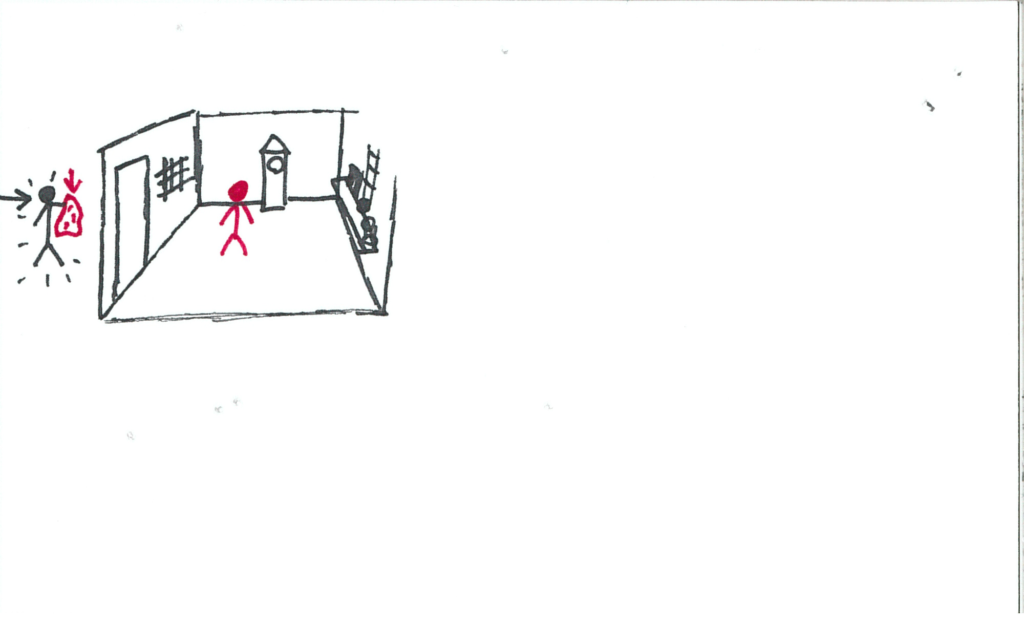

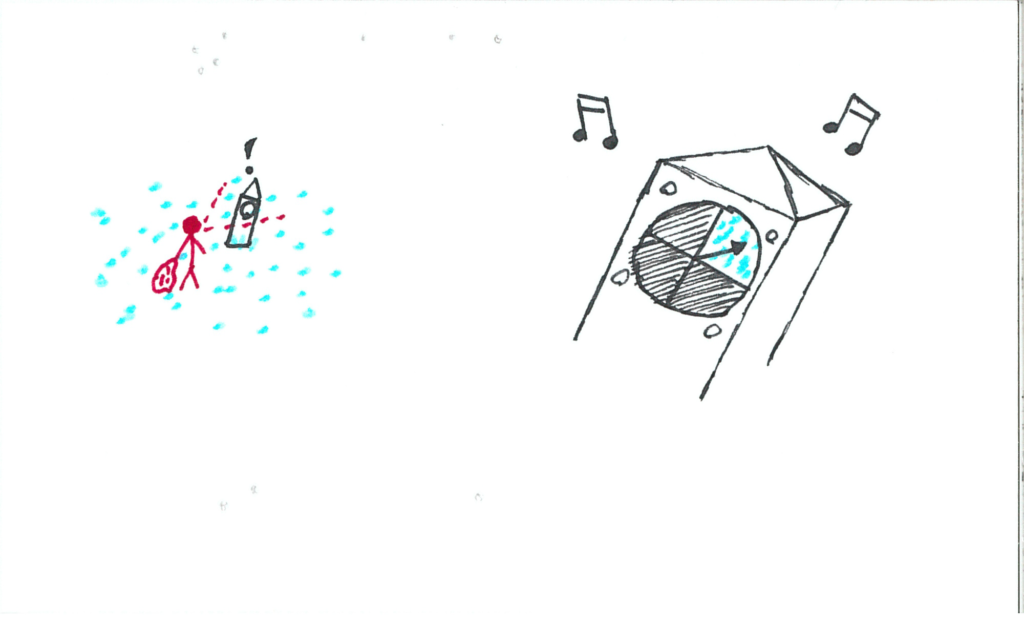

Upon removing/lowering the blanket, the user will find themselves in a magical world! The same tune from the opening credits will begin to play. This world will be in an expansive void with floating luminescent particles. The grandfather clock is still front and centre.

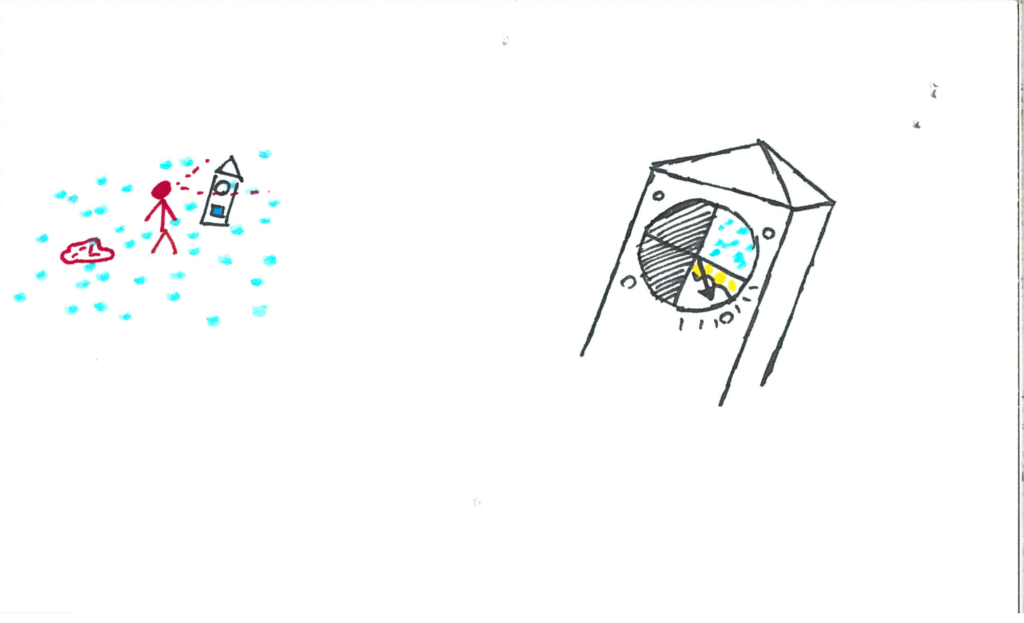

The clock chimes again. The face is different now. The hand is pointed to a drawing of this world, and there are three other portions which are unlit.

The energy source to the clock bursts. It is now dimly lit blue.

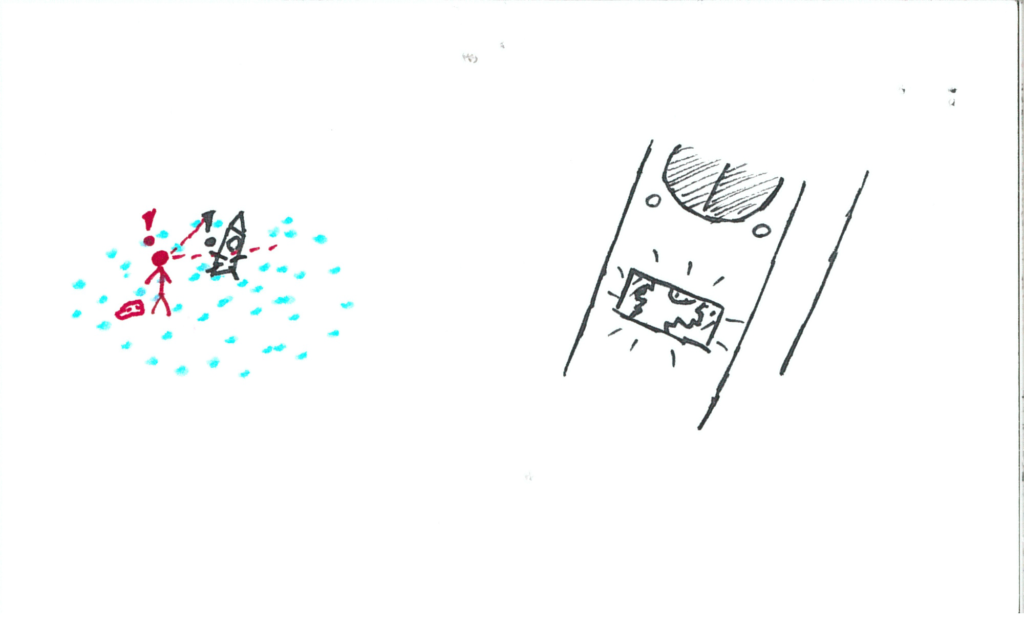

Eventually the user will interact with a particle. The particle will start revolving around their controller.

The user will insert the particle into the clock’s slot.

The music picks up. A new section of the clock face illuminates! The user will press the button next to that face (also illuminated), and the hand will tick to that location.

The user will block their view again with the blanket.

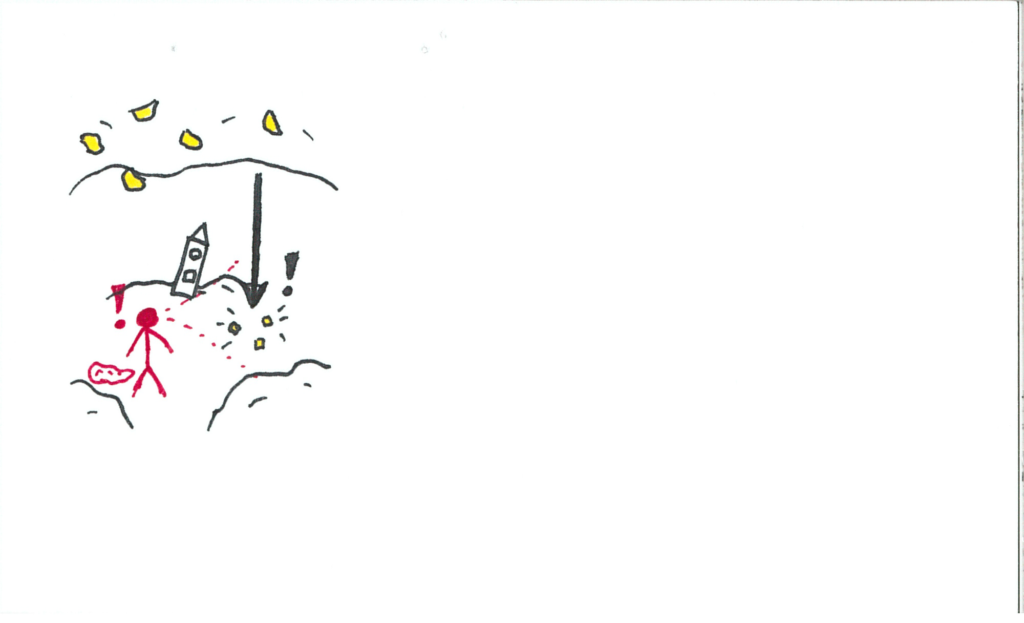

Upon removing/lowering the blanket, the user will find themselves in a cave. There are luminescent crystals in the ceiling, and the clock’s slot is dimly lit their colour. A particle came with the user when changing worlds, and floats up to the ceiling. Clock is still front and centre.

The particle bumps a crystal.

The crystal falls and shatters on the ground.

The user will eventually identify they need a way to safely acquire a crystal to insert into the clock, and so they will return to the particle void.

The user will grab a few particles and return to the cave.

The user will catch one of the crystals as it falls, preventing it from shattering. *Note: The crystals will replenish. I draw them as finite sources, but for the game-sake of the experience, they will respawn between visits.

The user inserts the crystal into the clock. Music picks up again.

A new section of the face illuminates, along with its button. The user hits the button and the hand ticks over.

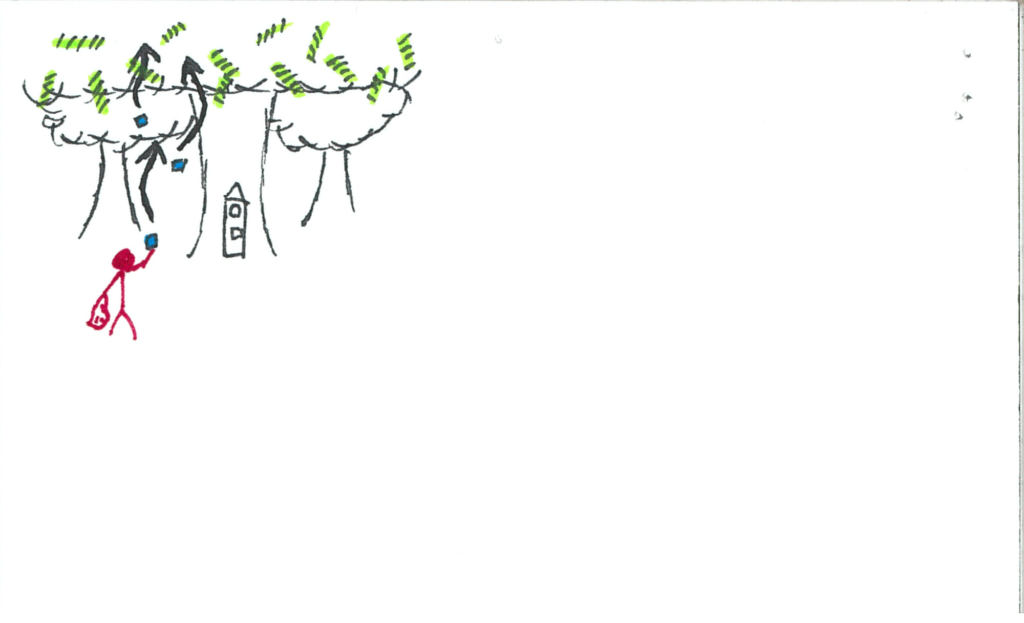

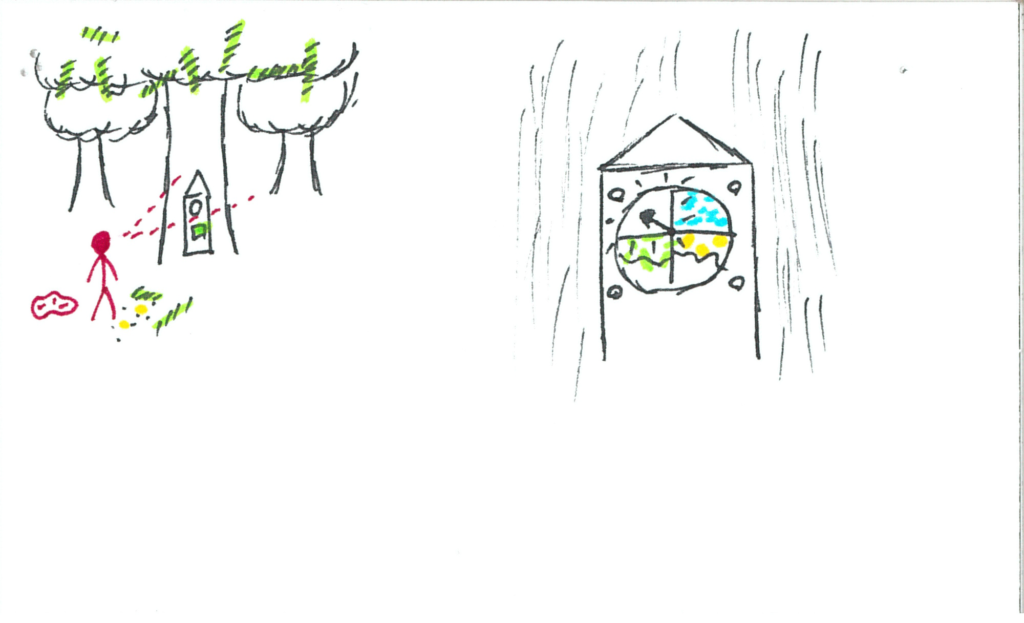

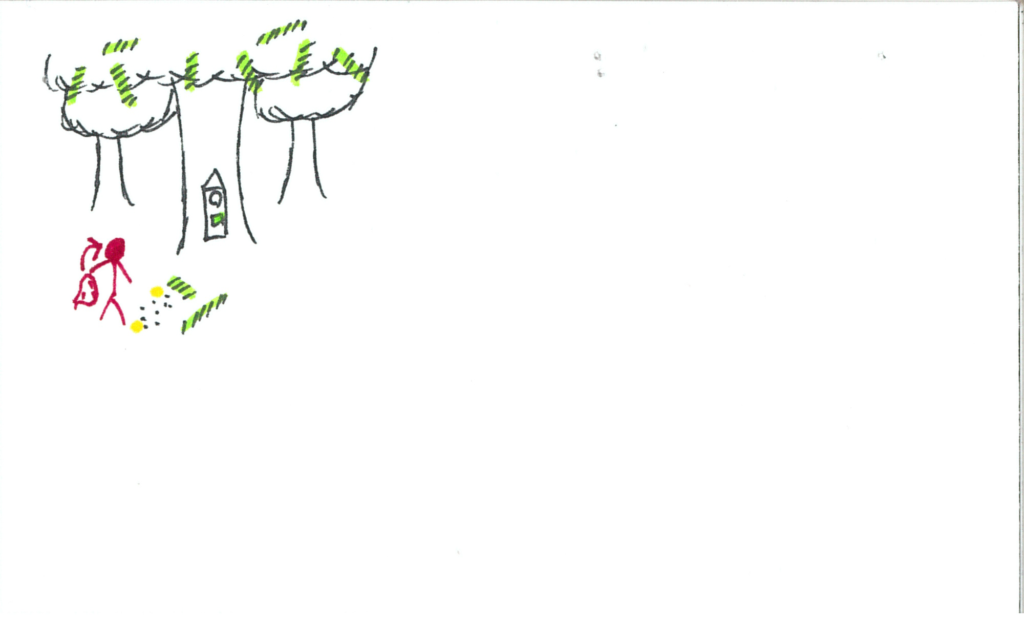

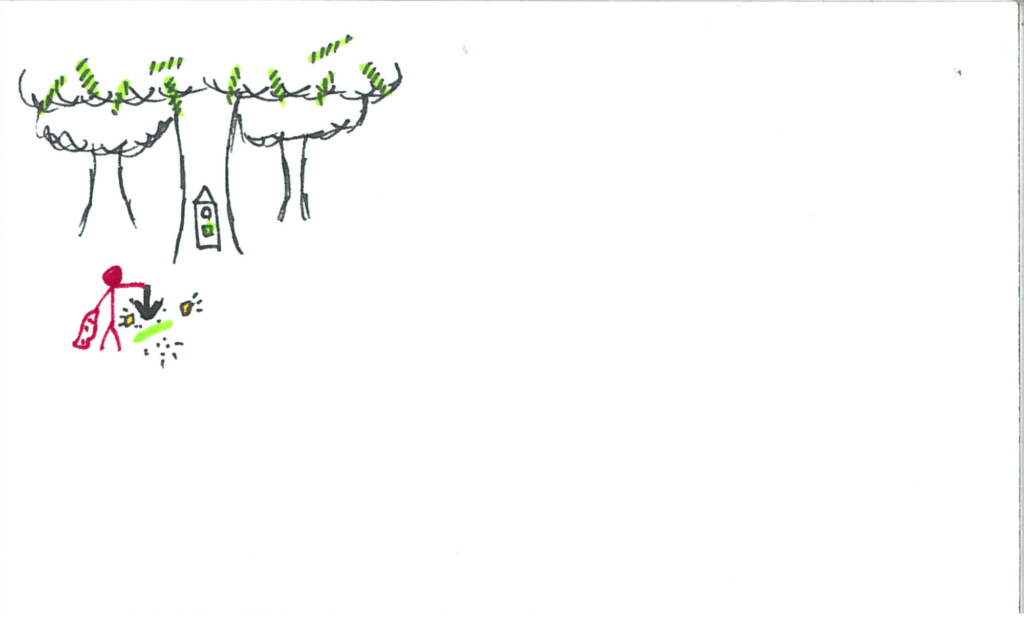

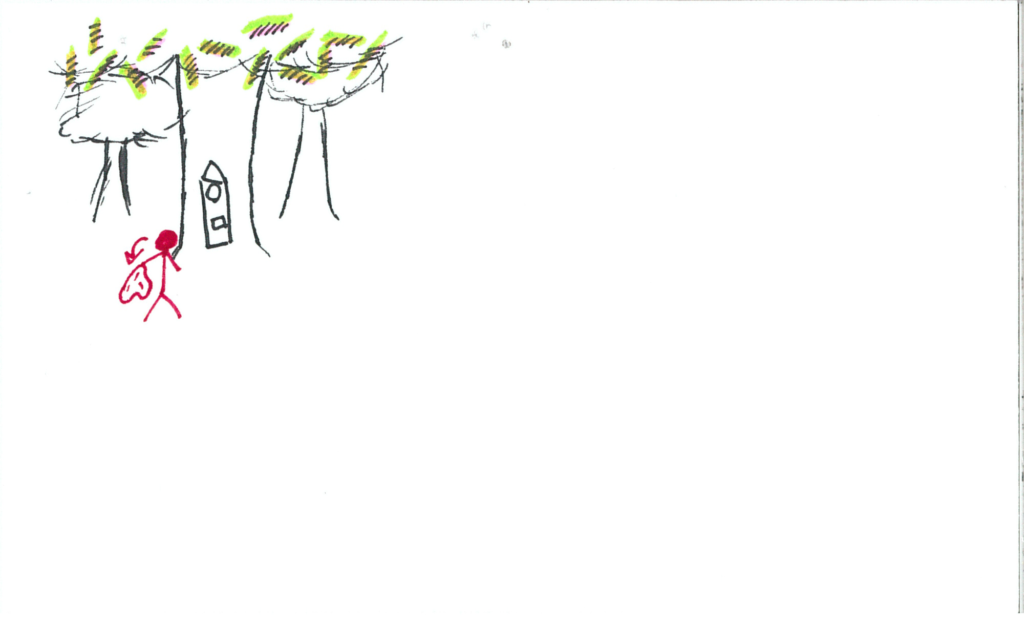

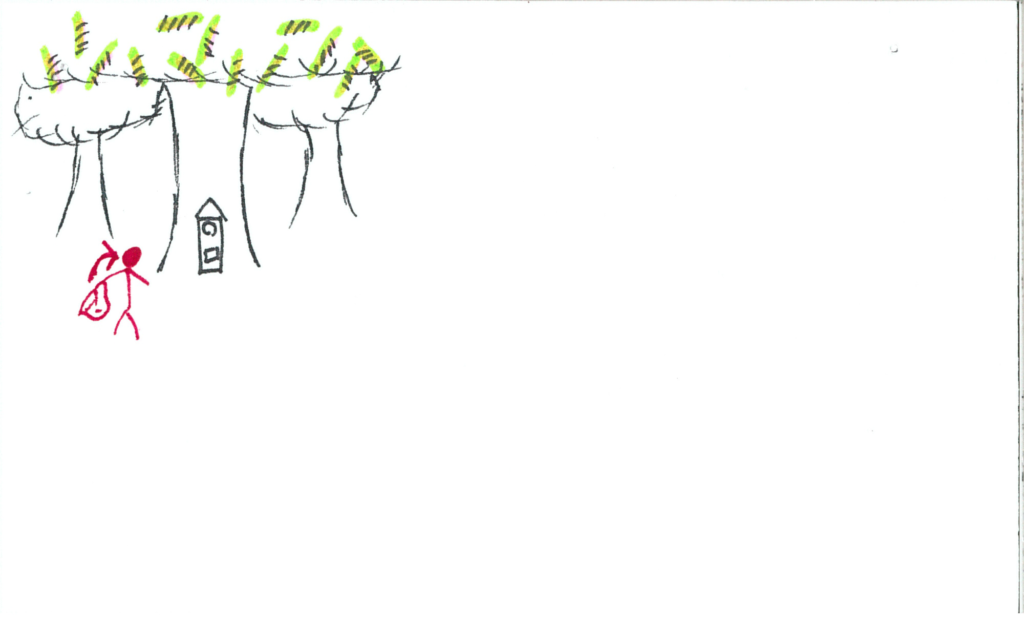

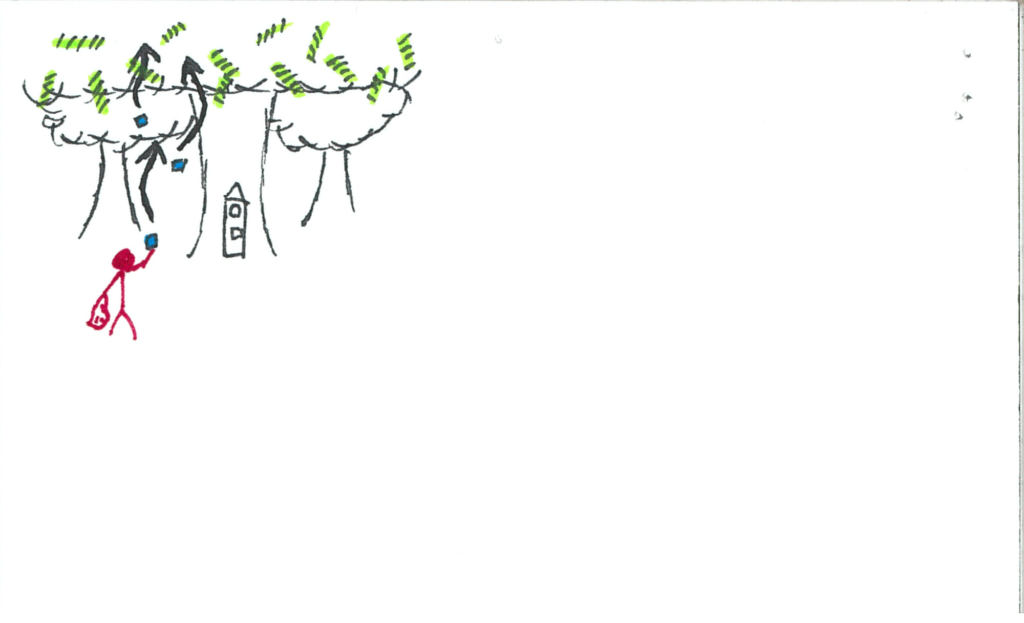

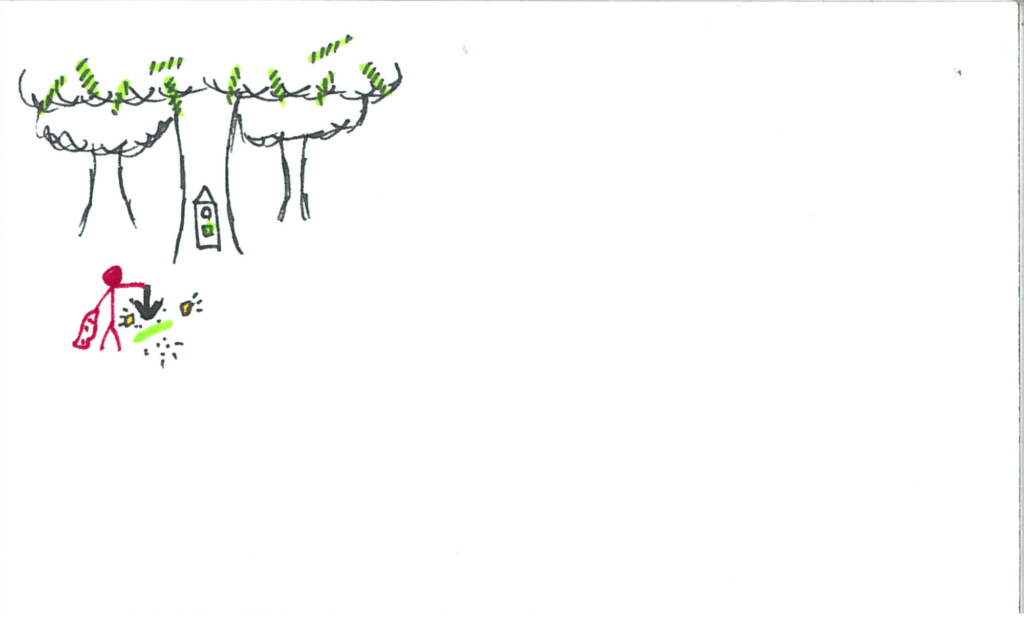

The user now finds themselves in a massive forest. The clock is dimly lit green. There are luminescent green objects in the trees. The grandfather clock is front and centre as usual, this time inside a tree trunk.

The user heads back to the particle void.

The user releases the particles into the trees.

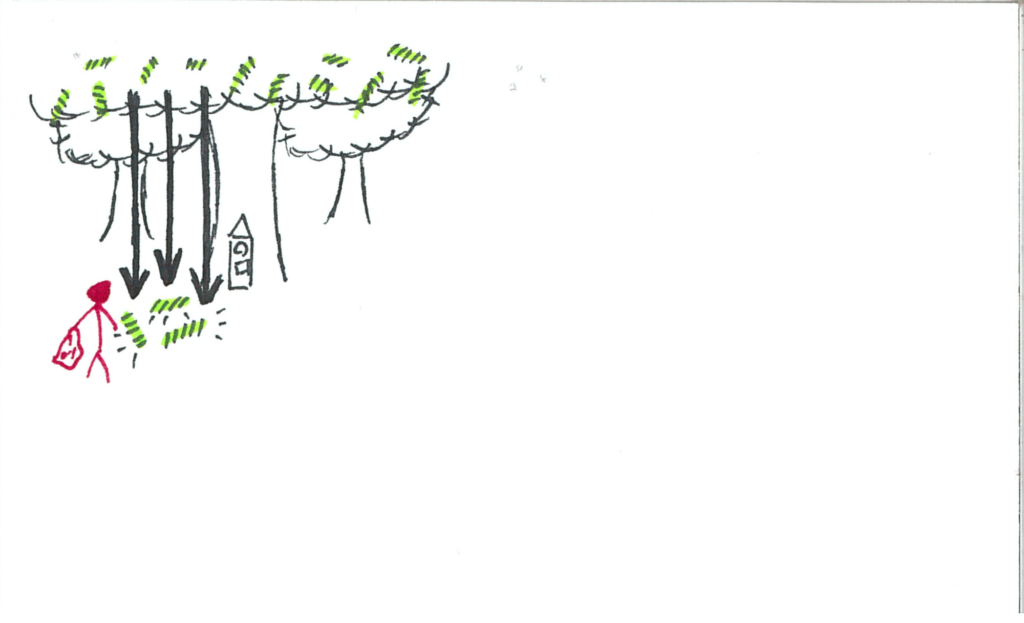

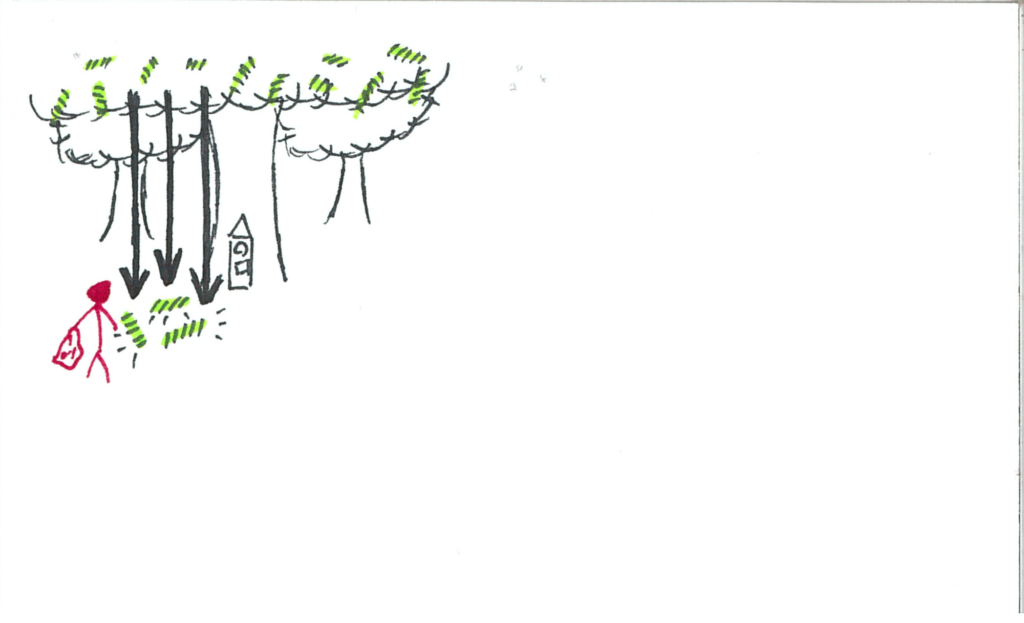

The luminescent sticks fall to the ground, but are encased in an outer shell.

The user now needs a way to break open the shells. The user goes to get a crystal.

The user drops the crystal onto a shell. The crystal and the shell shatter, revealing the luminescent stick.

The user grabs the stick.

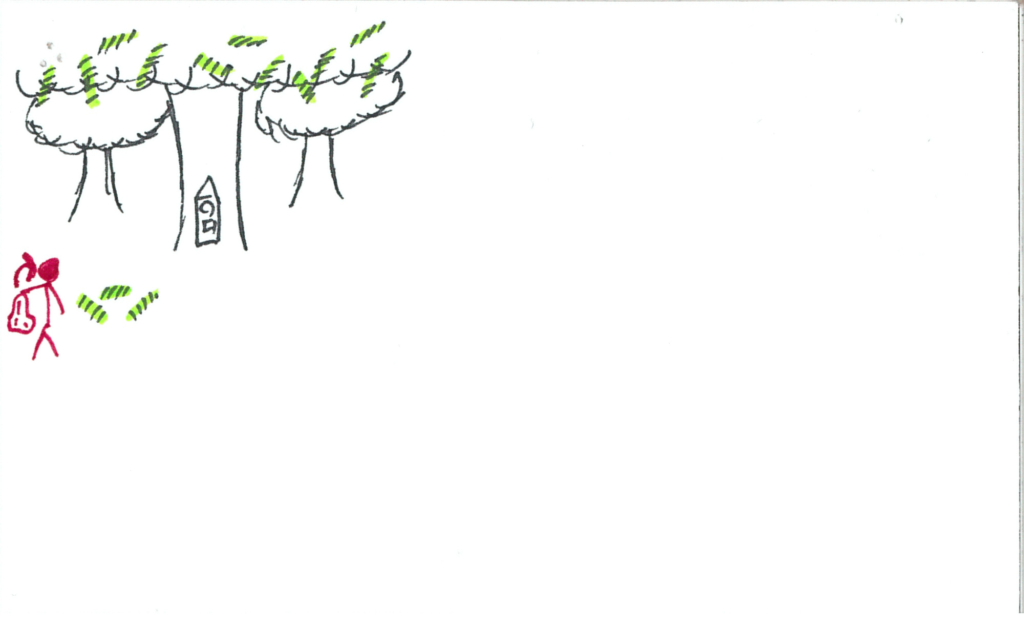

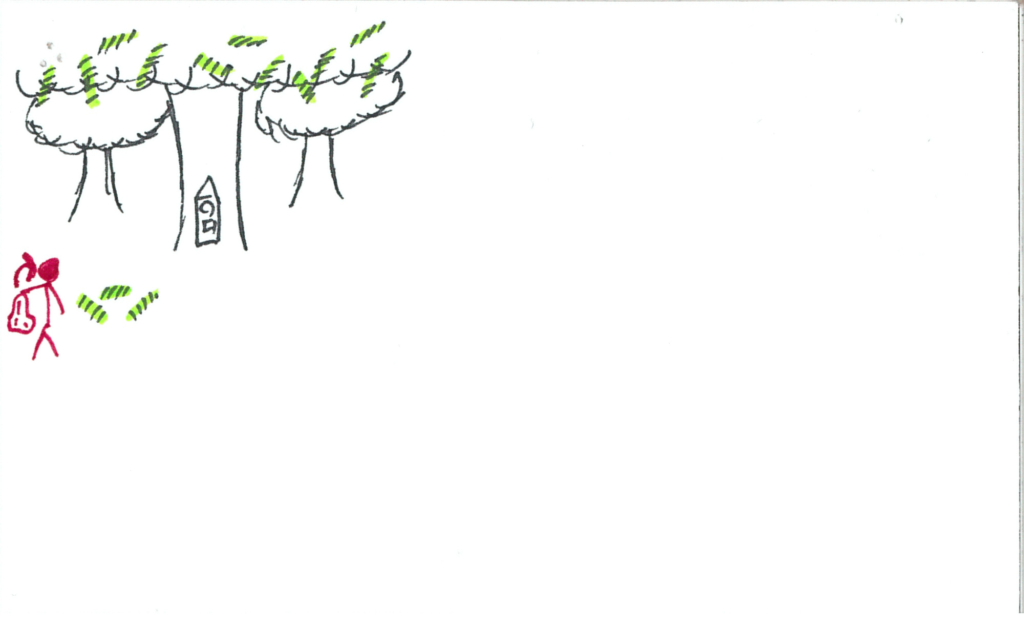

The user inserts the stick into the clock. Music picks up once more.

The clock illuminates its final face, a bright white light. The user hits the button and the hand ticks over.

The user goes to this mystery world.

Upon removing/lowering the blanket, the music abruptly and uncomfortably stops. The user is back in the workroom.

The work whistle blows.

A tag comes down the pipe.

It requests resources that the user certainly does not have; specifically cubes.

However, upon looking at their controller, they have a blue particle still revolving around it! Note: these particles are cubes.

The user goes back to the particle void to collect more particle cubes.

The user fulfills the order with the magical cubes.

The customer is happier than any customer before!

Another order arrives. It’s a doozy! The order requests all of the resources.

The user knows what to do! They go to each realm to gather the necessary resources.

The user assembles the complete order with all their magical items.

The same yellow orb from the opening falls into the money jar.

The user grabs the orb. The clock is dimly glowing yellow.

The user inserts the orb into the clock.

The music pleasantly resumes, as the room fades out to black, leaving the user and the clock together in darkness.

Roll credits!

So! That is Somniat :) I’d love to hear what you all think of this idea. Shouldn’t be too long before it’s a full experience and we can give it a go!

As I complete developing this narrative, I’ll continue sharing my experience here. If you have any questions or feedback (I love feedback!), I’d love to hear about it in the comments or in an email to blog@talariavr.com. Should be getting back into hardware topics soon :)